Posts tagged as work

Worknotes: Winter 2024

23 January 2025I was working up to the wire in 2024; my main client project wrapped up on December 20th. It was an intense sprint finish to the year, and since then, after some genuine rest, I’ve mainly been getting my feet back under the desk, and slowly trying to bring the shape of 2025 into focus.

What was I up to in the past six-ish months?

Google Deepmind - further prototyping / exploration

I returned to the AIUX team at Deepmind for another stint on the project we’d been working on (see: Summer 2024 Worknotes), taking us from September through to the end of the year. This was meaty, intense, and involving, but I’m pleased to where it got to.

This time, the focus was in two areas: replatforming the project onto some better foundations, and then using those new foundations to facilitate exploring some new concepts.

This went pretty well, and I got to spend some good time with Drizzle (now in a much better place than when I first used it a long while ago; rather delightful to work with) and

pgvector. Highly enjoyable joining vector-based searches onto a relational model, all in the same database.And I think that’s all I can say about that. Really good stuff.

Poem/1 - further firmware development

I continued occasionally work on the firmware development of Poem/1 with Matt Webb. The firmware was in a good place, and my work focused on formatting and fettling the rendering code, and iterating on some of the core code we’d worked on earlier in the year.

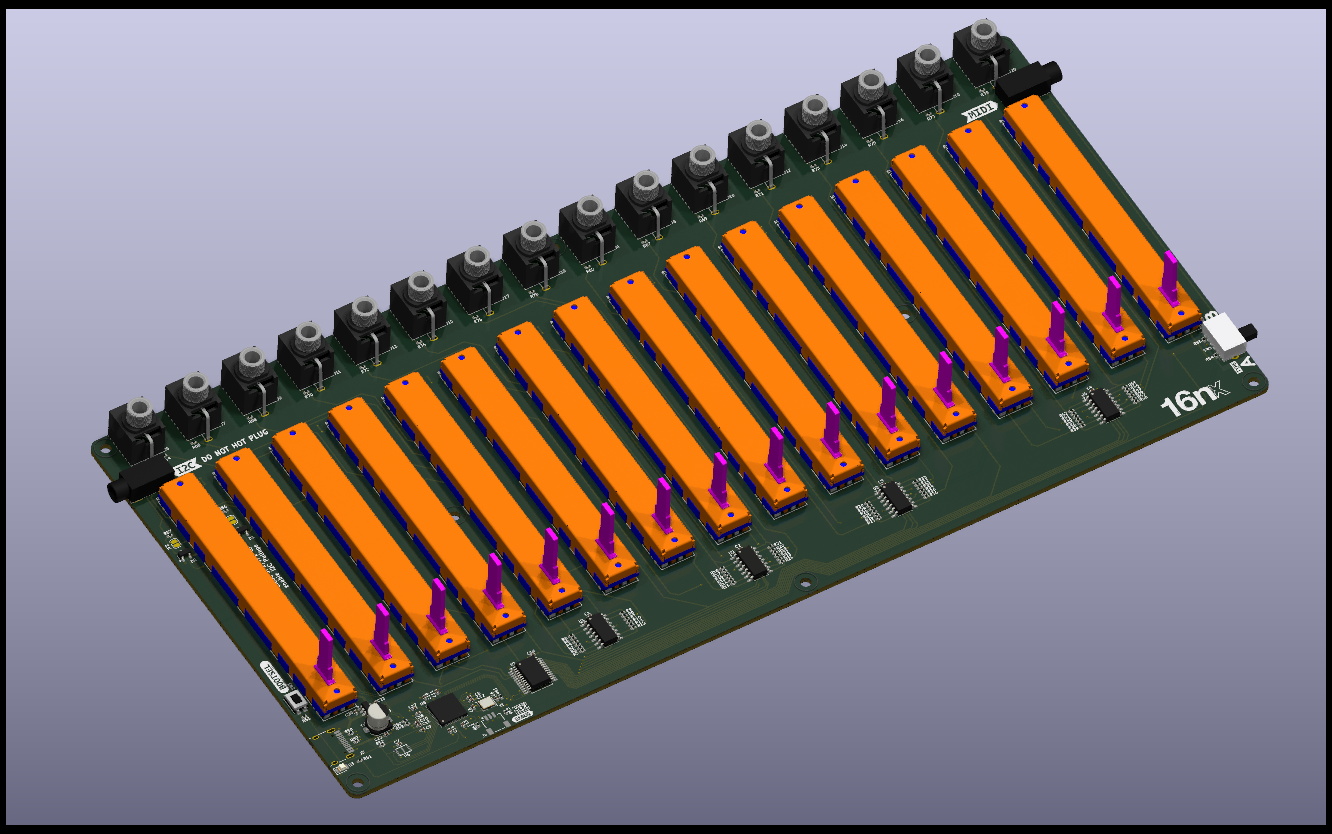

16nx

In September, I released a completely new version of the open-source MIDI controller I make. It has no new functionality. Instead, it solves the more pressing problem that you could no longer make a 16n, because the development board it was based on is no longer available. I ported everything to RP2040, and designed it around a single board with all componentry pre-assembled on it. I’ve got a case study of this coming next week, examining what I did and why, but for now, the updated 16nx site has more details on it. Another full-stack hardware project, with equal parts CAD, schematic design, layout, and C++.

Ongoing consulting

I kicked off some consulting work on a geospatial games project, currently acting as a technical advisor (particularly focusing on tooling around geodata), and this should continue into 2025. The way we’re working at the moment is with longer meetings/workshops based around rich briefs: the client assembles their brief / queries as a team, I prep some responses, present back to them, and that opens up future discussion. It’s a nice shape of work, focusing on expertise rather than delivery, and makes a good balance with some of the more hands-on tasks I have going on.

Coming up

2025 is, currently, quiet; slightly deliberately so, but I’m eyeing up what’s next. I’m back teaching for a morning a week at CCI from mid-February; I have further brief consultancy with the games project on the slate; there are few other possibilities on the horizon. Plus, after a crunchy end-of-year on the delivery front, I’ve got a lot of admin to do, and ongoing personal research.

But that’s all “small stuff”.

Like clockwork, here is where I say “I’m always looking for what’s next”. You can email me if you have some ideas. Things I’m into: R&D, prototyping, zero-to-one, answering the question “what if”, interactions between hardware and software, making tools for others to use, applying mundane technology to interesting problems. Perhaps that’s a fit for your work. If so: get in touch.

Worknotes: end of 2023 wrap-up

8 January 20242023 was frustratingly fallow, despite all best efforts. Needless to say, not just for me - the technology market has seen lay-offs and funding cutbacks and everything has been squeezed. But after a quiet few months, the end of 2023 got very busy, and there’s been a few different projects going on that I wanted to acknowledge. It looks like these will largely be drawing to a close in early 2024, so I’ll be putting feelers out around February. I reckon. In the meantime, several things going on to close out the year, all at various stages:

Lunar Design Project

After working on the LED interaction test harness for Lunar, I kicked off another slightly larger project with them in the late autumn. It’s a little more of an exploratory design project - looking at ways of representing live data - and that’s going to roll into early 2024. More to say when we have something to show - but for now it’s a real sweetspot for me of code, data, design, and sound.

Web development project “C”

More work in progress here: a contract working on a existing product to deliver some features and integrations for early 2024. Returning to the Ruby landscape for a bit, with a great little team, and a nice solid codebase to build on. Lots of nitty-gritty around integrating with other platforms’ APIs. This is likely to wrap up in early 2024.

This and the Lunar project were primary focuses for November and December 2023.

Nothing Prototyping Project

Kicking off in December, and running into January 2024: a small prototyping project with the folks at Nothing.

AI Clock

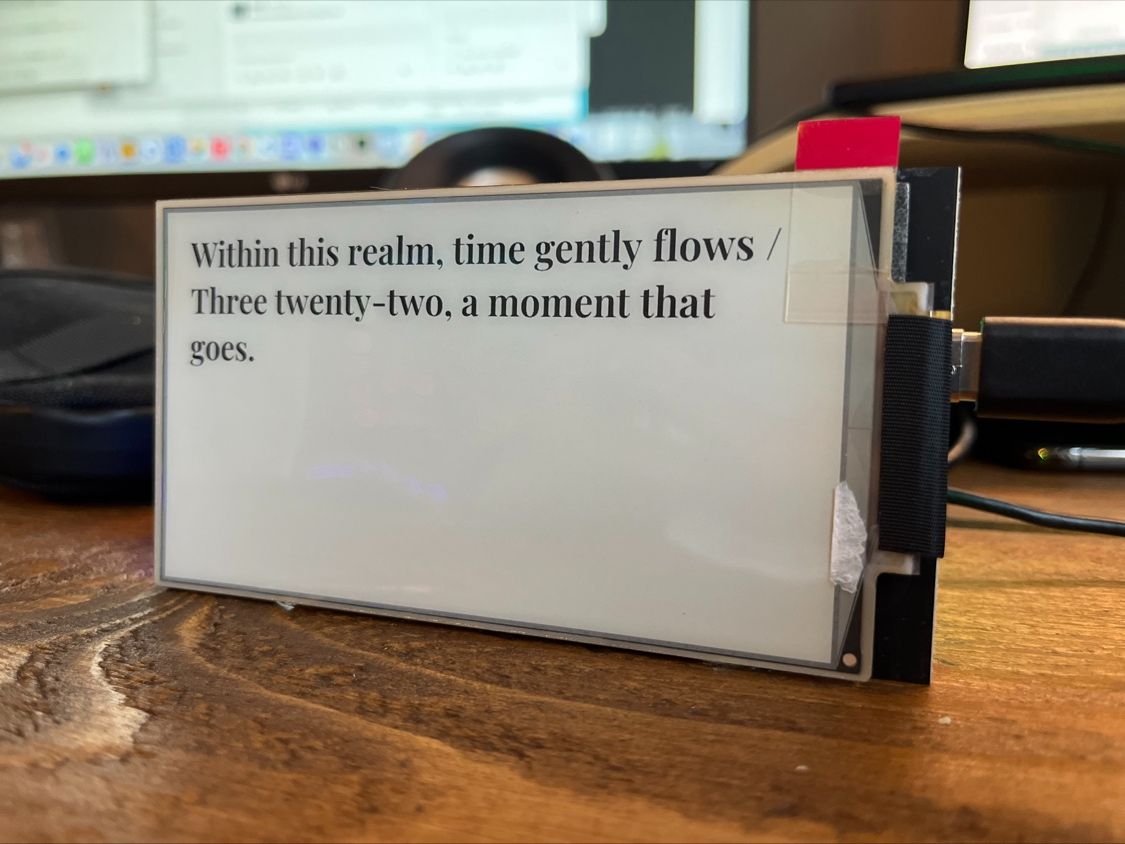

A short piece of work for Matt Webb to get the AI Clock firmware I worked on in the summer up and running on the production hardware platform. The nuances of individual e-ink devices and drivers made for the bulk of the work here. Matt shared the above image of the code running on his hardware at the end of the year; still after all these years of doing this sort of thing, it’s always satisfying to see somebody else pushing your code live successfully.

Four projects made the end of 2023 a real sprint to the finish; the winter break was very welcome. These projects should be coming into land in the coming weeks, which means it’s time start looking at what 2024 really looks like come February.

Recent and ongoing work: Community Connectivity

8 September 2023I’ve begun a small piece of ongoing consultancy with Promising Trouble on their Community Connectivity project. It’s a good example of the strategy and consulting work I do in my practice, alongside more hands-on technology making.

Promising Trouble is working with Impact on Urban Health on a multi-year partnership to explore how access to the internet impacts health and wellbeing. I’ve been working in an advisory capacity on a pilot project that will test the impact of free - or extremely affordable - home internet access.

Our early work has together has narrowing down how to make that happen from all the possibilities discovered early on in the project. That’s included a workshop and several discussions this summer, and we’ve now published a blogpost about our some of that work.

We’ve made some valuable progress; as I write in the post,

A good workshop doesn’t just rearrange ideas you already have; it should also be able to confront and challenge the assumptions it’s built upon.

Our early ideas were rooted in early 21st-century usage of ‘broadband’, a cable in the ground to domestic property - and that same concept underpins current policy and leglisation. But in 2023, there are other ways we perhaps should be thinking about this topic.

The post explores that change in perspective, as well as the discrepancy between the way “broadband” provision and mobile internet (increasingly significant as a primary source of access for many people) are billed and provided.

I hope that we’ve managed to communicate a little how we’re shifting our perspectives around, whilst staying focused on the overall outcome.

My role is very much advice and consultation as a technologist - I’m not acting as a networking expert. I’m sitting between or alongside other technology experts, acting as a translator and trusted guide. I help synthesise what we’re discovering into material we can share (either internally or externally), and use that to make decisions. Processing, thinking, writing.

When Rachel Coldicutt, Executive Director at Promising Trouble, first wrote to me about the project, she said:

“I thought about who I’d talk to when I didn’t know what to do, and I thought of you.”

One again, a project about moving from the unknown to the unknown.

The team is making good progress, on that journey from the unknown to the known, and I’ll be doing a few more days of work through the rest of the year with them; I hope to have more to share in the future. In the meantime: here’s the link to the post again.

New case study: Lunar Energy LED interactions

31 August 2023I’ve shared a new case study of the work I did this summer with Lunar Energy (see previous worknotes).

As I explain at length over at the post, it’s a great example of the kind of work I relish, that that necessarily straddles design and engineering. It’s a project that goes up and down the stack, modern web front-ends talking to custom hardware, and all in the service of interaction design. Lunar were a lovely team to work with; thanks to Matt Jones and his crew for being great to work with.

-

I recently released the 2.0.0 firmware for 16n, the open-source fader controller I maintain and support. This update, though substantial, is focused on one thing: improving the end-user experience around customisation. It allows users to customise the settings of their 16n using only a web browser. Not only that, but their settings will now persist between firmware updates.

I wanted to unpick the interaction going on here - why I built it and, in particular, how it works - because I find it highly interesting and more than a little strange: tight coupling between a computer browser and a hardware device.

To demonstrate, here’s a video where I explain the update for new users:

Background

16n is designed around a 32-bit microcontroller - Paul Stoffregen’s Teensy - which can be programmed for via the popular Arduino IDE. Prior to version 2.0.0, all configuration took place inside a single file that the end-user could edit. To alter how their device behaved, they had to edit some settings inside

config.h, and then recompile the firmware and “flash” it onto the device.This is a complex demand to make of a user. And whilst the 16n was always envisaged as a DIY device, many people attracted to it who might not have been able to make their own have, entirely understandably, bought their own from other makers. As the project took off, “compile your own firmware“ was a less attractive solution - not to mention one that was harder to support.

It had long seemed to me that the configuration of the device should be quite a straightforward task for the user; certainly not something that required recompiling the firmware to change. I’d been planning such a move for a successor device to 16n, and whilst that project was a bit stalled, the editor workflow was solid and fully working. I realised that I could backport the editor experience to the current device - and thus the foundation for 2.0.0 was laid.

MIDI in the browser

The browser communicates with 16n using MIDI, a relatively ancient serial protocol designed for interconnecting electronic musical instruments (on which more later). And it does this thanks to WebMIDI, a draft specification for a browser API for sending and receiving MIDI. Currently, it’s a bit patchily supported - but there’s good support inside Chrome, as well as Edge and Opera (so it’s not just a single-browser product). And it’s also viable inside Electron, making a cross-platform, standalone editor app possible.

Before I can explain what’s going on, it’s worth quickly reviewing what MIDI is and what it supports.

MIDI: a crash course

MIDI - Musical Instrument Digital Interface describes several things:

- a protocol for a serial communication format

- an electronic spec for that serial communication

- a set of connectors (5-pin DIN) and how they’re wired to support that.

It is old. The first MIDI instruments were produced around 1981-1982, and their implementation still works today. It is somewhat simple, but really robust and reliable: it is used in thousands of studios, live shows and bedrooms around the world, to make electronic instruments talk to one another. The component with the most longevity is the protocol itself, which is now often transmitted over a USB connection (as opposed to the MIDI-specific DIN-connections of yore). “MIDI” has for many younger musicians just come to describe note-data (as opposed to audio-data) in electronic music programs, or what looks like a USB connection; five-pin DIN cables are a distant memory..

The serial protocol consists of a set of messages that get sent between MIDI devices. There are relatively few messages. They fall into a few categories, the most obvious of which are:

- timing messages, to indicate the tempo or pulse of a piece of music (a bit like a metronome), and whether instruments should be started, stopped, or reset to the beginning.

- note data: when a note is ‘on’ or ‘off’, and if it’s on, what velocity it’s been played it (the spec is designed around keyboard instruments)

- other non-note controls that are also relevant - whether a sustain pedal is pushed, or a pitch wheel bent, or if one of 127 “continuous controllers” - essentially, knobs or sliders - has been set to a particular value.

16n itself transmits “continuous controllers” - CCs - from each of its sliders, for instance.

There’s also a separate category of message called System Exclusive which describe messages that an instrument manufacturer has got their own implementation for at the device end. One of the most common uses for ‘SysEx’ data was transmitting and receiving the “patch data” of a synthesizer - all the settings to define a specific sound. SysEx could be used to backup sound programs, or transmit them to a device, and this meant musicians could keep many more sounds to hand than their instrument could store. SysEx was also used by early samplers as a slow way of transmitting sample data - you could send an audio file from a computer, slowly, down a MIDI cable. And it could be also used to enable computer-based “editors”, whereby a patch could be edited on a large screen, and then transmitted to the device as it was edited.

Each SysEx message begins with a few bytes for a manufacturer to identify themselves (so as not to send it to any other devices on the MIDI chain), a byte to define a message number, and then a stream of data. What that data is is up to the manufacturer - and usually described somewhere in the back pages of the manual.

Like the DX7 editors of the past, the 16n editor uses MIDI SysEx to send data to and from the hardware.

How the 16n editor uses SysEx

With all the background laid out, it’s perhaps easiest just to describe the flow of data in the 16n editor’s code.

- When a user opens the editor in a web browser, the browser waits for a MIDI interface called 16n to connect. It hears about this via a callback from the WebMIDI API.

- When it finds one, it starts polling that connection with a message meaning give me your config!

- If 16n sees a message aimed at a 16n requesting a config, it takes its current configuration, and emits it as a stream of hex inside a SysEx message: here is my config.

- The editor app can then stop polling, and instantiate a

Configurationobject from that data, which in turn will spin up the reactive Svelte UI. - Once a user has made some edits in the browser, they choose to transmit the config to the device: this again transmits over SysEx, saying to the device: here is a new config for you.

- The 16n receives the config, stores it in its EEPROM, and then sets itself to use that config.

- If a 16n interface disconnects, the WebMIDI API sends another callback, and the configuration interface dismantles itself.

Each message in italics is a different message ID, but that’s the limit of the SysEx vocabulary for 16n: transmitting current state, receiving a new one, and being prompted to send current state.

With all that in place, the changes to the firmware are relatively few. Firstly, it now checks if the EEPROM looks blank, at first boot, and if it is, 16n will itself store a “default” configuration into EEPROM before reading it. Then, there’s just some extra code to listen for SysEx data, process it and store it on arrival, and to transmit it when asked for.

What this means for users

Initially, this is a “breaking change”: at first install, a 16n will go back to a ‘default’ configuration. Except it’s then very quick to re-edit the config in a browser to what it should be, and transmit it. And from that point on, any configuration will persist between firmware upgrades. Also, users can store JSON backups of their configuration(s) on their computer, making it easy to swap between configs, or as a safeguard against user error.

The new firwmare also makes it much easier to distribute the firmware as a binary, which is easier to install: run the loader program, drag the hex file on, and that’s that. No compilation. The source code is still available if they want it, but there’s no need to install the Arduino IDE to modify a 16n’s settings.

As well as the settings for what MIDI channel and CC each fader transmits, the editor let users set configuration flags such as whether the LED should blink on data transmission, or how I2C should be configured. We’ve still got some bytes free to play with there, so future configuration options that should be user-settable can also be extracted like this.

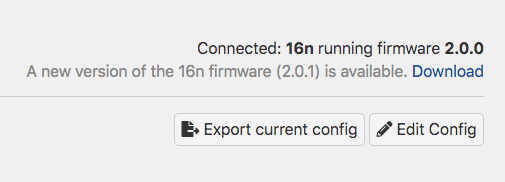

The 16n editor showing an available firmware update Finally, because I store the firmware version inside the firmware itself, the device can communicate this to the editor, and thus the editor can alert the user when there’s a new firmware to download, which should help everybody stay up-to-date: particularly important with a device that has such diverse users and manufacturers.

None of this is a particular new pattern. Novation, for instance, are using this to transfer patch settings, samples, and even firmware updates to their recent synthesizers via their Components tool. It’s a very user-friendly way of approaching this task, though: it’s reliable, uses a tool that’s easy to hand, and because the browser can read configurations from the physical device, you can adjust your settings on any computer to hand, not just your own.

I also think that by making configuration an easier task, more people will be willing to play with or explore configuration options for their device.

The point of this post isn’t just to talk about the code and technology that makes this interaction possible, though; it’s also to look at what it feels like to use, the benefits for users, and interactions that might be possible. It’s an unusual interaction - perhaps jarring, or surprising - to configure an object by firing up a web browser and “speaking” directly to it. No wifi to set up, no hub application, no shared password. A cable between two objects, and then a tool - the browser - that usually takes to the wireless world, not the wired. WebUSB also enables similar weird, tangible interactions, in a similar way to the one I’ve performed here, but with a more flexible API.

I think this an unusual, interesting and empowering interaction, and certainly something I’ll consider for any future connected devices: making configuration as simple and welcoming as possible, using tools a user already understands.

VOIPcards, or, On Solutionising

25 March 2020A bit over a week ago, I made a small tool - or toy, depending on your perspective, or the time of day - called VOIPcards. I demonstrated it on my public Twitter account:

I made some flashcards for you to hold up on videochat:https://t.co/tSPIEWqGWu

— Tom Armitage (@tom_armitage) March 17, 2020

You can install to your phone's homescreen, and it should work offline.

Ideal for when you want to comment, but stay quiet - or perhaps tell someone else to pipe down for a bit. pic.twitter.com/6525w9wbNYIt was made after my friend Alice showed pictures of her backwards post-it notes she’d hold up to her videoconference. I thought about making a tool for having on-demand backwards flashcards for video calls. A small toy to make, and thus, some time to practice some modern development practices, make a PWA and put myself to making something during Interesting Times.

Since then, a lot of people have liked it, or shared it, or been generally enthusiastic. Several have submitted patches and, most notably, translations, to it. And I’ve added some new features: white on black text, choice of skin-tone for emojis, and settings that persist between sessions.

I’m not sure it’s any good, though.

I don’t think it’s bad, though, and if it’s making a difference to your remote practice, that’s great. But I don’t think it’s the right tool for what it sets out to do.

And here’s the thing: it wasn’t meant to be. In some ways, the point of VOIPcards is as much a provocation as it is a thing for you to use. It says: here are things people sometimes need to say. Here are things people sometimes need to do, to support colleagues on a call. Here are things people need to do because it’s fun.

This is why I think of it as between a tool and a toy: it’s fun to use for a bit, it’s a provocation as to the kind of things we need alongside streaming video, and if you put it down when you’re bored (and your behaviour may have changed) that is fine.

The single most important card in the deck is a tie between “You have been talking a long time“ and “Someone else would like to speak“. These are useful and important statements to make in face-to-face meetings, but they’re doubly important when there’s twelve of you on a Zoom call. Sometimes, the person with better video quality noticing that someone wants to speak, and amplifying that demand, is good.

If what you come away from VOIPcards with is not a tool to use, but a better way of thinking about your communication processes, that’s probably more important than using a fun app.

But: equally, if you do find it useful, this isn’t a slight. That’s great! I’m glad it works for you.

I think the reason it’s popular is that people respond to the idea of it. The idea of the product has immediate appeal - perhaps more so than the reality of it. And that appeal is so immediate, so instant, that it makes me distrust it. Good ideas don’t just land instantly: they stand up to scrutiny. I’m really not sure VOIPcards does. At the same time, there’s value in the idea because of what it makes people think, how it makes them subsequently behave. And I think some of that value really does come down to it being real. A product you can try, fiddle with, demonstrate, lands stronger than a back-of-a-napkin idea - even if it turns out to be not much more than the idea.

Another obvious smell for me is that I don’t use the product. I enjoyed making it, and I was definitely thinking about other peoples’s needs - however imaginary - when making it. But it’s not for me, which makes it hard to make sensible decisions about it.

(What do I do instead? Largely, hand gestures and big facial expressions: putting a hand up to speak, holding a palm up to apologise for speaking over someone, lots of thumbs-ups. It puts me in mind of the way Daniel Franck and Ty Abraham describe the way the “Belters” - first-generation space dwellers - communicate in their Expanse novels. Belters talk with lots of broad hand-and-body gestures, rather than facial ones, because the culture developed communication techniques that worked whilst wearing a spacesuit. No-one can see an eyeroll through a visor, but everyone can see theatrical shrugs, sweeping hand gestures. I liked that. It feels like we’re all Belters on voice chat. Sublety goes out the window and instead, a big hand giving a thumbs-up into a camera is a nice way to indicate assent without cutting into somebody’s audio)

When I’m being most negative about VOIPcards, it is because they feel like solutionising - inventing a solution for a hypothetical problem. In this case, though, the problem is definitely something everybody has felt at some point. But this solution is perhaps too immediate, came too much from the “implementation” end of the brain to be the robust, appropriate answer to said problem.

There’s a lot of solutionising around right now, and I’m largely wary of it all. The right skills at the moment are not always leaping to solutions, working out what you can offer others, guessing at what might happen, what you might expect, and how you can respond to that. I think that the right skills to have - and the right tone to strike right now - are to be responsive, and resilient. Dealing with the unexpected, the unknown unknowns. Not solving the problems you can easily imagine, but getting ready to solve the ones you can’t.

Still: there is also value in making things to make other people think, rather than do. The win isn’t necessarily the product, but the behaviour it inspires. If what people take away from the cards is some time spent thinking more carefully about their communications, rather than yet another tool to use: that’s a win for me.

(You can try VOIPcards here. It works best on a mobile phone, and you can install it to your homescreen as an app.)

What I do

9 March 2020I am a technologist and designer.

I make things with technology, and I understand and think about technology by making things with it.

A lot of my work starts with uncertainty: an unknown field, or a new challenge, and the question: what is possible? What is desirable? From there, the work to build a product leads towards certainty: functioning code, a product to be used. I have worked on a lot of shipped, production code, but my best work is not only manufacture and delivery; it also encompasses research, exploration, technical discovery, and explanation.

Research could involve investigating prior art, or competitors, or using technical documentation to understand what the edges of tools or APIs are - beginning to map out the possible.

Exploration involves sketching and prototyping in low- and high-fidelity methods to narrow down the possible. That might also include specific technical discovery - understanding what is really possible by making things, compared to just reading the documentation; a form of thinking through making.

Delivery involves producing production code on both client and server-side (to use web-like terminology), as well as co-ordinating or leading a development team to do so, through estimation and organisation.

And finally, explanation is synthesis and sense-making: not only doing the work, but explaining the work. That could be ongoing documentation or reporting, contextualising the work in a wider landscape, or demonstrating and documenting the project when complete.

How does this work happen?

I work best - and frequently - within small teams of practice. That doesn’t necessarily mean small organisations, though - I’ve worked for quite large organisations too. What’s important is that the functional unit is small, self-contained, and multidisciplinary. I have built or led small teams of practice to achieve an outcome.

I’ve delivered work with a variety of processes. My favourite processes often resemble design practice more than, say, formal engineering: they are lightly-held, built as much around trust as around formal rules. Such processes are constraints, and they serve to help a team work together, but they are also flexible and adaptable.

A colleague recently described some of the team’s work on a recent project as “a lot of high-quality decisions made quickly“, and I take pride in that description; I hope to bring that sensibility to all my work.

You can find out more about past projects. If the above sounds of value to you, do get in touch with me; I am available for hire.

New Work: An Introduction to Coding and Design

29 January 2020

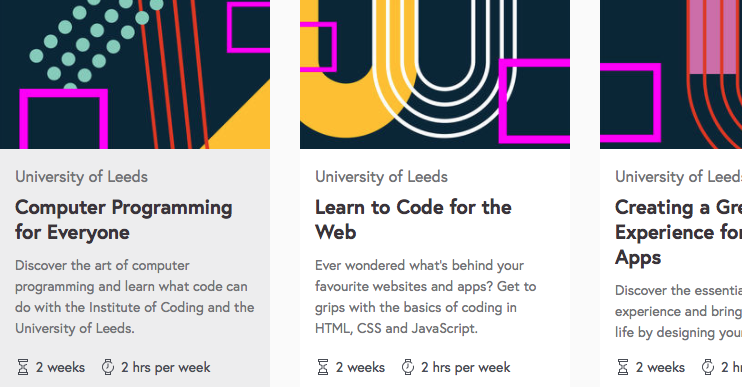

In the second half of 2019, I worked on a project I called Longridge. This project was to write three online courses in a series called An Introduction to Coding And Design, for a programme of courses from the Institute of Coding launching in 2020. I worked with both Futurelearn - the MOOC they’re hosted upon - and the University of Leeds to write and deliver the courses.

Those courses are now live at Futurelearn, as of the 27th January 2020!

The courses are designed as two-week introductions to topics around programming and design for beginners interested in getting into technology, perhaps as a career.

I’ve written up the project in much more detail here; you can read my summary of the work here. I cover some of the reasoning behind the syllabus, the choice of topics, and the delivery. And, most importantly, I thank the collaborators who worked with me throughout the process, and collaborated on the courses.

Latest work case studies, summer 2019 edition

11 July 2019The case studies on this website were getting a little stale. No more! I’ve just published lots of new case studies of individual projects over the past three years.

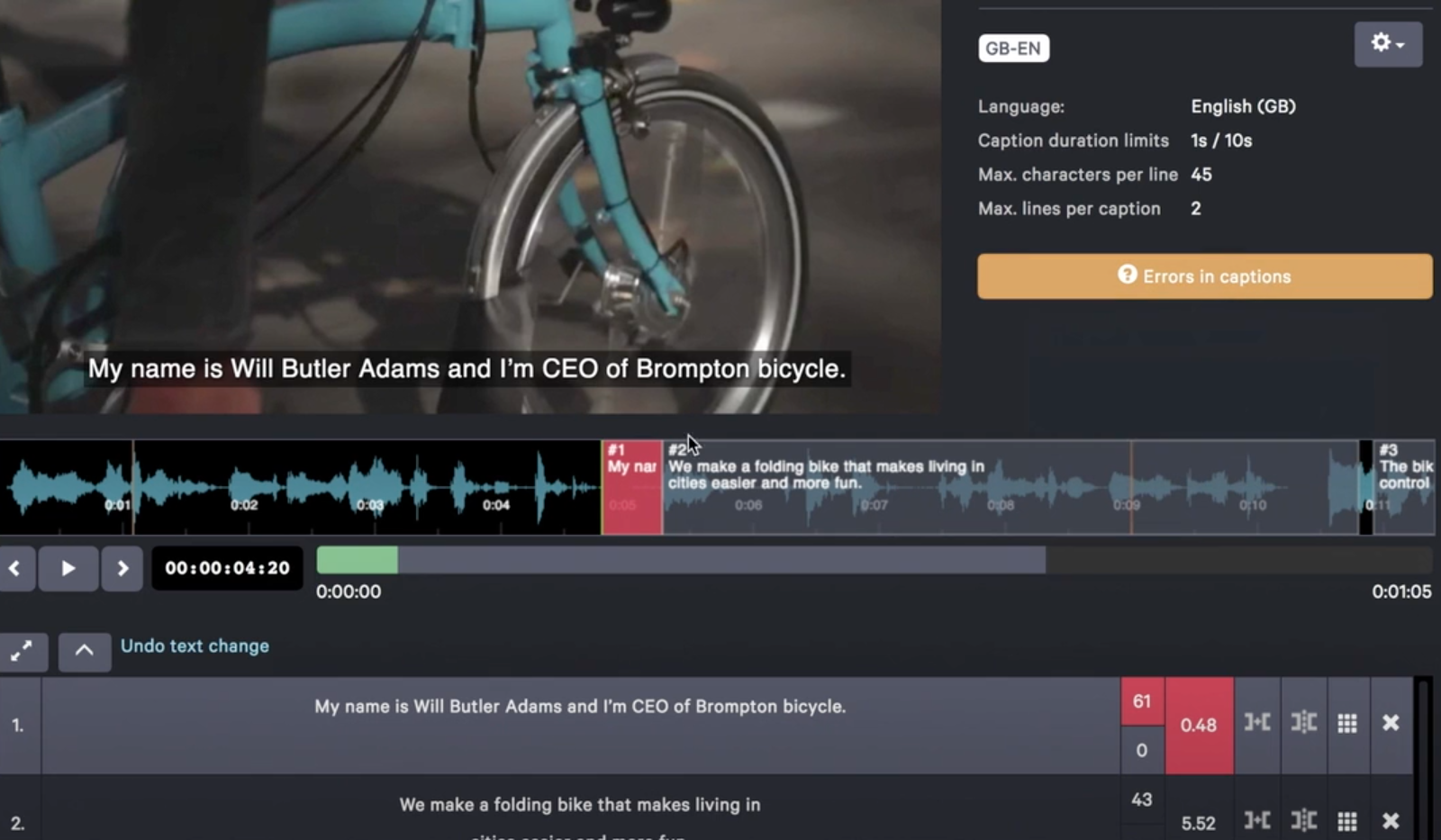

The big headline that I’m most keen to talk about is a long, detailed writeup of my work on CaptionHub - a project I worked on for 3.5 years, known in this feed as Selworthy. CaptionHub is an online tool for collaboratively captioning and subtitling video. I served as technical lead and pathfinder, taking the initial idea - the “what if?” - to a prototype and beyond into a shipping product, whilst the team grew and the product acquired clients. The write-up is detailed not just because of the length time I worked on the project, but because of the way the product changed as it developed and grew in scale. It’s a project that shows the breadth of my capabilities well, and the finished product is something I’m very proud of.

But there’s lots more in there too. Highlights include: an open source tool for musicians; teaching on the Hyper Island MA; building a digital musicbox; creating a Twitter bot for an installation at the Wellcome Collection.

The write-ups all include extended thought on process, and, of course, link back to the relevant weeknotes that I wrote during the process.

You can find out more at the updated projects list.

I’m currently looking for new projects to work on: technical leadership, early stage exploration, communication of ideas, are all areas I’m keen to continue in. Topic areas I’m particularly interested in include building tools for creatives and professionals, the bridge between the physical and digital, and audio and video. I’ve written more about my capabilities here.

“Designing Circuit Boards” masterclass in London

2 August 2017I’m running a course called Designing Circuit Boards in central London in October.

Maybe you’ve looked at a tangle of jumper wires on a breadboard and wondered how to take your electronics project beyond that point. Perhaps you’ve got an installation made out of lots of Arduinos, shields, and breakout boards and you’d like to make it more reliable and easier to reproduce. Or if you’ve got a prototype on your desk that you’d like to take the first stages of manufacturing: this masterclass will give you the tools to embark on that process.

Over four evening sessions (about 90-120 minutes each), we’ll take a project on a breadboard and learn how to design and fabricate a two-layer printed circuit board for it. This is a pragmatic course: it doesn’t presume any knowledge of CAD software, or any formal electronics training. We’ll be learning techniques and approaches, not just how to drive a piece of software.

The course is what I’d call intermediate-level. Some very basic experience of electronics – perhaps some tinkering with microcontroller projects (eg Arduino) on breadboards – is about the level of experience you need to enter. Maybe you’ve made complete projects or installations out of such technology. But I’m assuming that most people will have no experience of circuit board design.

We’ll be using Autodesk EAGLE as our primary tool, because it’s cross-platform, well-supported by the maker community, and free for our purposes.

You can find out more and sign up at the Somerset House website.

And if you’ve got any questions, you can email me.

TA In Berlin November 3rd-8th

2 November 2016I’m going to be in Berlin between the 3rd and 8th November. I’m attending Ableton’s Loop – purely as an attendee, to put my brain in a new context. I’d been wanting to go for a few years but circumstances conspired against me, and it felt especially relevant given this year’s work on instrument-building. I’m mainly at the conference, but I’ve also got some time for both sightseeing and saying hello.

So if you’re at Loop, or interested in saying hello regardless, drop me a line, there might be something to do; I’ve a bit of time on Thursday, Monday and Tuesday.

New Work: Empathy Deck

19 September 2016

Empathy Deck is an art project by Erica Scourti, commissioned by the Wellcome Collection for their Bedlam: The Asylum and Beyond exhibition. It’s also the project I’ve been referring to as Holmfell. I worked on building the software behind the Empathy Deck with Erica over the summer.

To quote the official about page:

Empathy Deck is a live Twitter bot that responds to your tweets with unique digital cards, combining image and text.

Inspired by the language of divination card systems like tarot, the bot uses five years’ worth of the artist’s personal diaries intercut with texts from a range of therapeutic and self-help literatures. The texts are accompanied by symbols drawn from the artist’s photo archive, in an echo of the contemporary pictographic language of emoticons.

Somewhere between an overly enthusiastic new friend who responds to every tweet with a ‘me-too!’ anecdote of their own and an ever-ready advice dispenser, the bot attempts an empathic response based on similar experiences. It raises questions about the automation of intangible human qualities like empathy, friendship and care, in a world in which online interactions are increasingly replacing mental health and care services.

I’m very pleased with how the bot turned out; seeing people’s responses to it is really buoying – they’re engaging with it, both what it says but also its somewhat unusual nature. It looks like no other bot I’ve seen, which I’m pleased with – it’s recognisable across a room. It’s also probably the most sophisticated bot I’ve written; I don’t want to focus too much on how it works at the moment, but suffice to say, it mainly focuses on generating prose through recombination rather than through statistics. Oh, and sometimes it makes rhyming couplets.

There’ll no doubt be a project page forthcoming, but for now, I wanted to announce it more formally. Working with Erica was a great pleasure – and likewise the Wellcome, who were unreservedly enthusiastic about this strange, almost-living software we made.

Systems Literacy at The Whitechapel Gallery

25 January 2016This coming Saturday – the 31st January, 2016 – I’ll be in conversation at the Whitechapel Gallery with James Bridle and Georgina Voss about Systems Literacy. It’s a topic on I’ve spoken a few times, through the lens of design, games, and play, and I’m looking forward to our conversation:

Artist James Bridle brings together speakers across disciplines to discuss the theme of systems literacy, the emerging literacy of the 21st Century: namely the understanding that we inhabit a complex, dynamic world of constantly-shifting relationships, made explicit but not always explained by our technologies.

In the context of the exhibition Electronic Superhighway 2016-1966, which features Bridle’s work, the conversation explores how the ability to see, understand and navigate these systems and the related technology is key to artistic, social and political work in an electronic world.

Joining the MV Works 2015-16 cohort

4 December 2015

I’m pleased to announce Richard Birkin and I have been selected as part of the mv.works 2015-2016 cohort. We’ve received funding to work until April 2016 on a new iteration of Twinklr – our physical/digital music box.

We’re going to spend the time iterating on the hardware, software, and enclosure, adding functionality and hopefully making the build more repeatable. Along the way, we’ll be documenting our progress, and exploring the opportunities the object – the instrument – affords.

We’re really excited – this news comes just as we wrap up the first phase of exploratory work. It’s a hugely exciting opportunity – the space to design an instrument for performance and composition, to build an object to create with, and to develop this idea that’s been percolating slowly. Of course, we’ll share all our progress along the way. It’s going to be good.

Future Speak – or ‘so I made a radio programme’

15 April 2015I’ve been going on a bit about a project called Periton, which seems to have involved train travel, interviews, and recording things. That’s because Periton is a radio programme: a thirty minute documentary, called Future Speak about just what all the fuss about learning to code is, and what the value of programming is to society. Is it just about making more Java developers? Or is it about more than that?

Look closely and you’ll see that computer code is written all over our offices, our homes and now in our classrooms too.

The recent Lords’ Digital Skills report says the UK’s digital potential is at a make or break point, with a skills gap to be plugged and a generation gap to be bridged.

As technologist Tom Armitage argues, there’s also a leap of the imagination to be made, to conceive of the wider benefits of reading, writing, and even thinking in code.

Future Speak was first broadcast on Tuesday 14th April; it’s repeated at 9pm on Monday 20th April, and is available now at the BBC website. (It’s also Documentary of the week, so should shortly be available for download in podcast form).

Documentaries are hard work, and they’re a team effort: massive thanks to all at Sparklab, particularly Kirsty McQuire who produced the programme. (What ‘producer’ means on a documentary like this is, if you don’t know: doing all the location recording, background interviews, booking studios, editing, pulling the script together, spending a long while discussing things with me and talking me out of bad ideas, and generally being very patient with a first-time presenter.) Thanks also to David Cook who originally suggested the idea many months ago.

The V&A Spelunker

6 January 2015At the end of 2014, I spent a short while working with George Oates of Good, Form & Spectacle on what we’ve called the V&A Spelunker.

We sat down to explore a dataset of the Victoria & Albert Museum’s entire collection. The very first stages of that exploration were just getting the data into a malleable form – first into our own database, and then onto web pages as what got called Big Dumb Lists.

From there, though, we started to twist the collection around and explore it from all angles – letting you pivot the collection around objects form a single place, or made of a single material.

That means you can look at all the Jumpers in the collection, or everything from Staffordshire (mainly ceramics, as you might expect), or everything by Sir John Soane.

And of course, it’s all very, very clickable; we’ve spent lots of time just exploring and excitingly sending each other links with the latest esoteric or interesting thing we’ve found.

George has written more on the V&A’s Digital Media blog. She describes what came to happen as we explored:

In some ways, the spelunker isn’t particularly about the objects in the collection — although they’re lovely and interesting — it now seems much more about the shape of the catalogue itself. You eventually end up looking at individual things, but, the experience is mainly about tumbling across connections and fossicking about in dark corners.

Exploring that idea of the shape of the catalogue further, we built a visual exploration of the dataset, to see if particular stories about the shape of the catalogue might leap out when we stacked a few things up together – namely, setting when objects were acquired against when they are from, and how complete their data is. You quickly begin to see objects acquired at the same time, or from the same collection.

This is very much a sketch that we’ve made public – it is not optimised in so many ways. But it’s a useful piece of thinking and as George says, is already teasing out more questions than answers – and that absolutely felt worth sharing.

Do read George’s post. I’m going to be writing a bit more on the Good, Form & Spectacle Work Diary about the process of building the Spelunker later this week. It’s the sort of material exploration I really enjoy, and it’s interesting to see the avenues for further ideas opening up every time you tilt the data around a little.