CaptionHub

Building the world's most advanced subtitling platform.

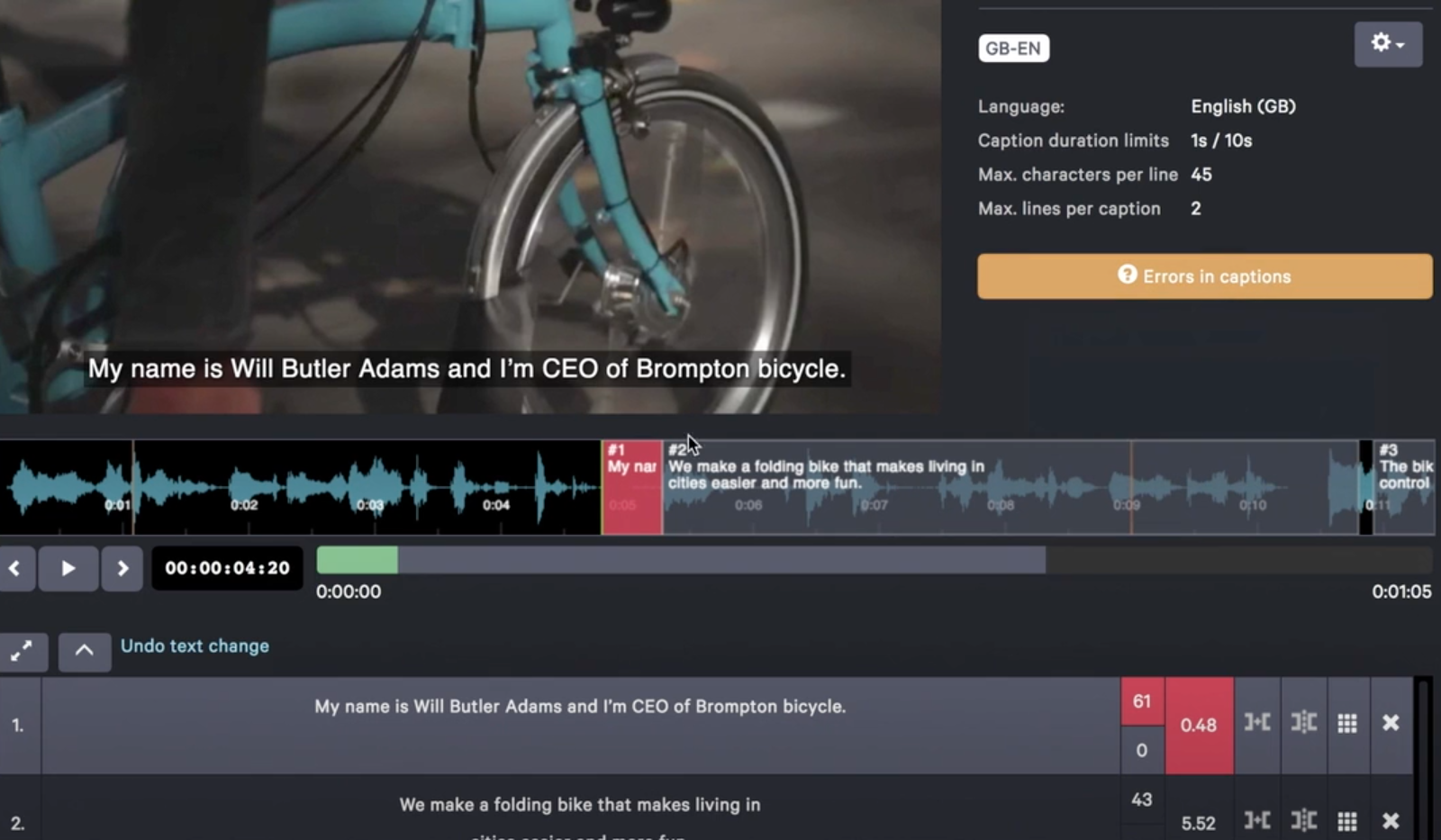

CaptionHub is an online platform for subtitling video. It allows teams to generate and edit captions inside a web browser, previewing them in a real-time editor. They can edit and adjust timing of captions using a slippy timeline, similar to desktop video editors. When they’re happy with a preview, users can emit caption data in industry-standard file formats, or render out video with the captions burned-in. It also allows translators to easily translate original captions, editing subtitle timings themselves to reflect language-specific changes. Because it all runs in the browser, it can be accessed from anywhere in the world, letting teams with in-territory staff collaborate around the clock - and it has clear workflow tools to enable that collaboration. CaptionHub can even generate your original captions in one of 28 languages using speech recognition, producing captions that break at natural points in your video, such as on scene transitions or sentence breaks.

I was technology lead on CaptionHub from its inception until the end of 2018; it made frequent appearances in my Weeknotes as a project called ‘Selworthy’. I began as the sole developer and designer on the prototype application, and worked on it as it developed into a successful commercial product, leading the development team that grew around it, and developing the technology capacity of my client.

The project began in 2015, with a very specific problem that needed solving.

The Problem

Neon are a London-based VFX and motion graphics studio. Some of their business-to-business work included producing foreign-language versions of client content, with foreign-language subtitles. The process they used at the time - common to many studios - was taking translated content from a translation agency, letting a video editor apply the subtitles to video content, and then taking feedback from the translation agency and client on the final video, until it was signed off.

One of their clients came to them with a new request: would they be able to subtitle an internal corporate video into eleven languages, with a 24-hour turnaround time, every week? Early tests with a roomful of translators suggested that it might be possible… but also that with the tools they had, it was a high-effort solution. Their suspicions were aroused: surely there was a better way of performing this task?

Would it be possible to build some kind of browser-based tool that would let translators work quicker, remotely, and empower them to make decisions around text and timing that they probably ought to be making anyway? Frequently, errors that a video editor won’t notice - spelling errors in a language they don’t speak (especially one with a non-roman character set such as Arabic, Hebrew, or Japanese), or captions that once translated into a new language exceed the house style for characters-per-line - are exactly the sort of thing a translator is equipped to do.

Neon approached me with this idea, and I concurred: the state of the HTML5 web browser in 2015 was such that we had a good chance of being able to do this inside Chrome or Safari, using off-the-shelf tools and libraries. They initially hired me to build a proof-of-concept.

The Prototype

Our initial prototype focused on the specific needs of the initial target client. For the prototype, we only needed to accept the video and timed text formats that they specified, and we only had to produce the file formats they required, so we built the system around those assumptions. Where possible, I leaned on cloud services to streamline the prototype development process, deploying code to Heroku, and using cloud-based image processors and video encoders. That left me free to focus on the meat of the prototype.

During this prototyping phase, I built the very first pass at our ‘rich editor’: the system of a timeline and a table to let users edit captions, adjust their timing on a timeline, and see a realtime preview, the timeline staying in sync with video playback.

This was built out of a Backbone-driven set of views, all rendering the same caption data. The table of captions was a Backbone view… and so was the canvas element that made our timeline. requestAnimationFrame triggered re-renders, meaning our canvas was a view rendering at around 60fps. (Why Backbone? Well, it was 2015, and at that point, it seemed a mature and stable framework for a data-bound product).

I also built our first pass at workflow tools for this process. In doing so, began to develop our understanding of the problem domain, and grow some of our own domain language around the project (much of which proved to be surprisingly robust, and survives internally to this day.)

The prototype worked end-to-end, making translation of videos straightforward, and emitting files in standard formats that were easily imported into After Effects or Final Cut.

1.0: Turning it into a Product

We had definitely proved our system worked, and the client was enthusiastic, but there was more to be done to make a real product - and appetite within Neon to deliver this. I stayed on to continue working on the project, and we started on the long list of tasks to take our prototype to a 1.0, including:

- replacing our reliance on cloud services so the software could operate on corporate hosting, inside the firewall. Crucially, that meant replacing the cloud video encoders with our own video encoding service. I built an internal webservice to handle queueing and processing these taks in the background, wrapping

ffmpegand integrating seamlessly with our existing primary application. This service encoded video for the realtime preview, generated thumbnails, and similar, notifying the end-user when their encodes were complete. - adding a visible audio waveform on the timeline, generated by our encoder tool. This made cutting captions to video much easier - it was instantly possible to see speech starting or stopping, and mark the ins and outs of captions to match.

- adding to our feature set, from new tweaks and functionality in the editor, to better handling of validating captions against client specifications (such as number of characters per line, or minimum duration on-screen of a caption)

- greatly increasing the number of data and video types we could import and export

- supporting ways of adding original captions beyond ‘already having a timed text file’; sometimes, users might need to start from a blank slate, or a plain text file.

Beyond 1.0: growing functionality, becoming CaptionHub

Our 1.0 was a success: we licensed it to the client, hosted on their premises, and the product started being used in production. Now Neon were keen to develop this product into a software-as-a-service offering, primarily hosted in the cloud, for a much wider range of clients.

This was the point where our previously un-named product became CaptionHub proper, both in terms of the maturity of the product, and in terms of a new company being developed to support it.

Whilst aiding translators was a key feature for us, for many potential users, supportin single-language captions was enough (and - enough to sell them on the utility of the product). We improved the experience of single-language use, refining the UI and UX for users working in single languages.

Around the core captioning features, we also added new flexibility to our workflow features, making it easier for teams to integrate CaptionHub with their own processes.

I also developed two key features that became integral to CaptionHub.

Firstly, I rewrote the entire internals of the captioning system to guarantee that the captions we produced were frame-accurate. That’s a challenge, given the HTML5 video element only ever handles timings in milliseconds. But it’s important to ensure that the captions generated are accurate to frames, because often, captions break on scene transitions - and if the caption hangs around a frame too long, something is visibly wrong, even if you don’t know what it is. By ensuring that the smallest unit of time CaptionHub’s internals ever dealt with was a frame, and by making sure the UI enforced that logic, we could guarantee that CaptionHub would produce frame-accurate captions - a hugely important feature for many video producers.

The second feature I worked on, that visibly transformed the experience of using the tool, was Speech-to-Text transcription of video.

One of the slowest parts of the process is creating the ‘original’ set of captions. Typing them out takes long enough, but even slower is timing them: making sure they all begin and end at the right timestamps in the video. We partnered with a white-label speech-to-text provider whose software was producing remarkable results. Using their API, we could get accurate transcriptions of speech in a matter of minutes. (At this point we were primarily only able to transcribe English in a number of accents; by 2019, 28 languages would be supported for transcription).

We then wrote our own logic to produce captions from those transcriptions: making sure that captions broke on sentence breaks, didn’t leave punctuation hanging, and would ‘snap’ to scene-changes if it looked like they were around a camera transition. I also added the scene detection data to the editor timeline, highlighting edit points in orange. Caption in- and out-points would automatically snap to edit points once they were a couple of frames away.

The end result was that users could upload a video, and have it automatically captioned in a few minutes - already to a high quality. Most notably, because the timing of the captions needed little alteration, correcting this transcription was a matter of some swift text edits, rather than having to drag all the caption boxes around. This massively improved end-user experience. Turnaround on caption production got much faster - especially for single-language captions. Captionhub continues to add support for more languages and features around speech-to-text. For instance, it also supports aligning known text to video: if you have a script, and a video of someone speaking that script, we can produce captions that are 100% correct, but perfectly timed to their speech.

And as ever, we continued to improve on smaller features and workflow tools, as well as handling the needs of supporting multiple clients at once.

We continued to add to import/export formats as clients approached us with needs. In particular, I developed code for handling formats popular in the broadcast world despite their venerable age, such as SCC and STL. That required investing time reading documentation, poking in the hex-editor mines, and using libraries such as bindata to produce suitable output. But the work paid off, leading to integrating with broadcast tool workflows - vital for some of our clients.

We improved our infrastructure, bringing in a dedicated sysadmin, building up automated deployment tooling to make maintaining all our environments - including local development environments - straightforward, reliable, and easy to alter.

We also grew the development team, and my new colleagues were instrumental in adding all manner of functionality - such as handling invoicing and payment online, building our own API-based services and integrating with increasing numbers of third-party tools.

I had gone from sole developer and pathfinder to tech lead: helping shape the team, guiding the CEO and working with them on developing the product, and trying to disseminate my deep domain knowledge that I had grown with the highly talented team we’d built.

Increasingly, I moved further away from the coalface of implementation. Now that CaptionHub knew their product and users better, there was much better insight into the UI users required, and so the team ended up redesigning and rebuilding the front-end, incrementally replacing code with component-based architecture in Vue. The last piece to be updated was the rich video editor itself, and I was hugely pleased to see other developers taking my Backbone code and bringing it up-to-date within a reactive framework. This effort paid off: the code is more performant, clearer to work with for the development team, and delivered much better user experience to clients.

CaptionHub Today

CaptionHub continues to grow its userbase, and makes a strong impression with all the clients and agencies who use it. The team has grown and matured, and the product still grows in several directions at once - from new integrations and partnerships to new core functionality, such as captioning live video automatically.

I’m proud of all my work on it - but I’m equally proud of the amount of my code which has now been rewritten or mothballed. There is a particular delight in seeing a product grow to the point that you have slowly been superseded. And, in parallel with my code being replaced, refactored, and improved, I have stepped back from working on the product. I’m still in touch with the team, though, and consult from time-to-time, on product and technology strategy, as well as the nuance of broadcast data formats.

I’m also proud of the impact it has. I enjoy making tools for other people to do their work with. It was hugely rewarding to hear linguists and captioning teams tell you it’s the best tool they’ve ever used, or that they’re twice as productive as they used to be.

It was exciting to share in the journey of building a software team and a product. The VFX industry is highly reliant on cutting-edge technology, and full of technology savvy - it’s an industry where many companies end up building their own tools and products. CaptionHub took the Neon team on their own journey to building technology products, and they were a delight to work with; I enjoyed my close relationship with Tom Bridges, their CEO, who became a great product owner and CEO of CaptionHub.

My work on CaptionHub reflects the development of my personal practice. It is rooted in thinking-through-making: using the act of prototyping as a way of understanding a domain and the right thing to build, not just for myself as a developer, but also for a client.

For a long while, I was the developer and designer of the whole system, working across the stack from browser to infrastructure. As the product grew, we began to grow the team to spread the load, and a lot of my work became shaping features out of requirements, and leading on architecture. By the end, my work was spread out vertically across the product: at one end, I was implementing code to legacy broadcast specifications; at the other, advising on technology strategy; in the middle was a deeply collaborative relationship with the team, all of whom were hugely capable. I’m somewhat loath to describe what I did as leadership; at the time, it felt very much like listening, sharing, and frequently accepting the insight of others, and the team worked well as trusting equals.

I’m delighted to see CaptionHub in the world, thriving, and being put to such good use by so many teams.