Posts tagged as weeknotes

Summer 2023: what's on the slate?

1 August 2023What’s been going on in the studio this summer?

UAL Creative Computing Institute

I finished another term of teaching at CCI: as usual, teaching the first year BSc Creative Computing students about Sound and Image Processing - an introduction to implementing audio and graphics in code. That means pixel arrays, dithering, audio buffers, unit generators, building up to particle systems and flocking - all with a focus on the creative application of these topics. It was great to see where the students had got to in their final portfolios - some lovely and surprising work in there, as always.

A reminder: why do I teach alongside consulting and development work? I have no employees, and so this is my way of sharing back my knowledge and developing new talent - as a practitioner-teacher, and as someone who can share expertise from within industry back to students looking to break into it.

I value “education” as an ideal, and so spending about half a day a week, around client work, for a few months, to educate and share knowledge feels like a reasonable use of my time. It’s also good to practice the teaching/educating muscle: there’s always new stuff to learn, especially in a classroom environment, that feeds into other workshop and collaboration spaces.

AI Clock P2

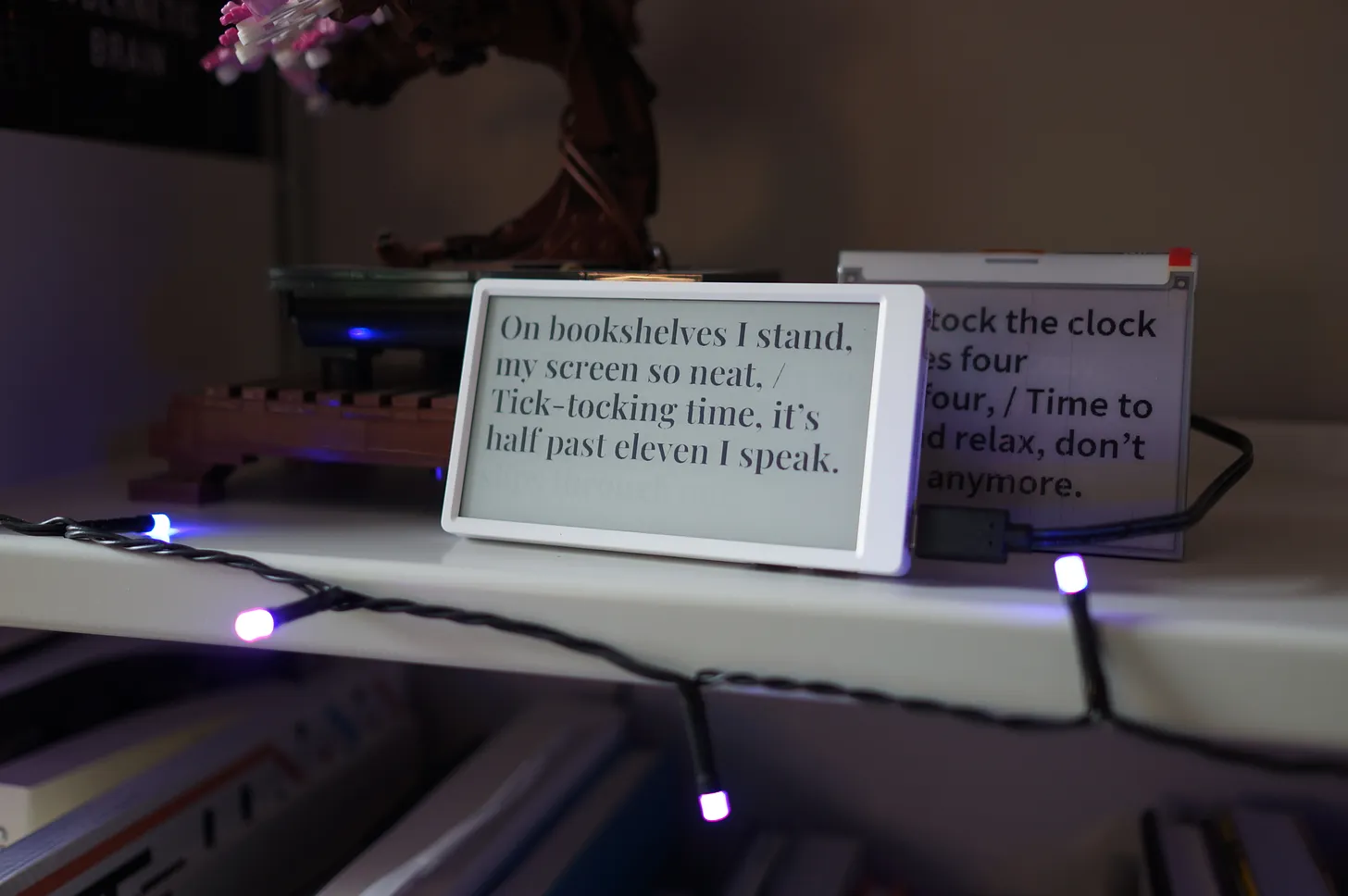

Matt's photo of P2 on his shelf I spent a short while working with Matt Webb on “P2” - prototype 2 - of his AI Clock. He’s written more about this prototype over at the newsletter for the project - including his route to getting the product into the world.

This prototype involved evaluating a few different e-ink screen modules according to Matt’s goals for the project, and creating a first pass of his interaction design in embedded code. I built out the critical paths he’d designed for connecting and configuring the clock, as well as integrating it with his new API service. The prototype established feasibility of the design and, during its development, informed the second version of the overall architecture.

Since I’ve handed over the code, he’s continued to build on top of it. I find this part of handover satisfying: nothing is worth than handing over code and it sitting, going stale, or unmaintained. By contrast, when someone can take what you’ve done and spin it up easily, start working with it, and extending it - that feels like part of a contracting/consulting job well done: not just doing the initial task, but making a foundation for others to build on. Some of that work is code, some of it’s documentation, and some of it’s collaboration, and it’s something I work towards on all my client engagements.

Lunar Energy

I’ve been working on a small project with Lunar Energy. The design team were looking to prototype a specific hardware interaction on the actual hardware involved. I’ve been making hardware and software to enable the designers to work with the real materials in a rapid fashion - and in a way that they can easily share with technical and product colleagues.

It’s been a great example of engineering in the service of design, and of the kind of collaborative toolmaking work I both enjoy and am particularly effective at. I’ve been jumping back and forth between browser and hardware, Javascript and embedded C++, and I think we’ve got to a really good place. I can’t wait to share a case study.

Promising Trouble

Finally, I’ve started a small consultancy gig with Promising Trouble on one of their programmes, spread over a few days this summer, and then a few more towards the winter. My job here is being a technological sounding board and trusted advisor, to help join the team join some dots on a project exploring a technological prototype to address societal issues. A nice consultancy project to sit alongside the nitty-gritty of the Lunar work.

and…?

And what else? I have capacity from early August onwards, so if you’d like to talk about opportunities for collaboration or work, now is a great time to get in touch. What shape of opportunities? Right now, my sweet spots are:

- invention and problem-solving: answering the questions what if? or could we even?; many of my most successful and impactful projects have begun by exploring the possible, and then building the probable.

- “engineering for design” - being a software developer in the service of design, or vice versa; the technical detail of platforms, APIs, or hardware is part of what we design for now, and recent work for companies like Google AIUX and Lunar has involved straddling both worlds, letting one inform the other, working in tight cross-functional teams to build and explore that space, and ultimately communicating all of this to stakeholders.

- projects that blend the digital and physical. I’ve been working on increasing numbers of projects that straddle hardware, firmware, and desktop or browser software, and the interactions that connect all of them.

- technology strategy; that might be writing/thinking/collaborating, or building/exploring, or first one, then the other; I’ve recently done this with a few early-stage founders and companies.

- focused technical projects, whether greenfield or a companion to existing work - that might be adding or integrating a significant feature, or building a standalone tool. I tend to work on the Web, in Ruby, Typescript and Javascript (as well as markup/CSS, obviously). I like relational data models, HTTP, and the interesting edges of browsers.

- and in all of the above: thinking through making, informing the work through prototypes and final product builds.

You can email me here if any of that sounds relevant. And now, back to the desk and workbench!

Spring 2022 Worknotes

20 April 2022What happened was: I meant to write about the end of 2021 before a big project in 2022 kicked off. And instead, 2022 came around, the project kicked off hard and whoosh it’s now the Spring.

I have been quiet here, but busy at work. And so, here we are.

What’s been going on?

Wrekin wrapped up in the autumn, as expected. I completed my final pieces of documentation, and handed them over. Recently, I’ve been doing a small amount of handover and advisory work with their new lead developer as they push through the next phase of the project. I’m excited to see where they’ll take it.

The small media project I mentioned last time came to fruition. Codenamed Hergest, it was a proof-of-concept to explore if a product idea was achievable - and feasible - within a web browser. Rather than prototype the whole product, I focused on the single most important part of the experience - which was also the single most complex part of the product, and the make-or-break for if the product was feasible. We got to a point where it looked like it would be possible, and I could frame what it’d need to take forward - to “paint the rest of the owl“, as I described it to the client.

Hergest covered a fun technology stack: an app-like UI in the browser, using a reactive web framework; on-demand audio rendering using Google Cloud Run; loading media from disk without sending it across the network thanks to the File API; and working with Tone.js for audio functionality in the browser. It’s remarkable what you can do in the browser these days, especially in contexts where such rich behaviour is appropriate. In a few weeks of prototyping we got to an end-to-end test that could be evaluated with users, to understand demand and needs without spending any more than necessary.

Lowfell has slipped a little, which is on me: there was some issues with UPS shipping, and then pre-existing commitments managed to steal a lot of my energy. I’m hoping to resolve this in the coming weeks, but I did at least get a second prototype shipped and a 1.0 firmware built and signed off.

There was more teaching. I returned to be industry lead for the Hyper Island part-time MA, and spent some good days with an international crew delivering some lectures and coaching them on their briefs - this time, a particularly interesting brief delivered by Springer Nature. The students really got stuck in to the world of scientific publishing; a shame we were still delivering remotely, but not much else could be done there. I also am teaching at CCI again, delivering Sound and Image Processing for first year Creative Computing undergrads for one morning a week, through to the summer.

Finally, in Q1 I kicked off a three month project working with Normally. A team of three of us worked on a challenging and intense public sector brief. Gathering data, performing interviews, sketching, and prototyping; we spent 12 busy weeks exploring and thinking, and I was personally really pleased where the final end-to-end prototype we shipped - as well as the accompanying thought and strategy - ended up. It was great working with Ivo and Sara, as well as spending time in the wider Normally studio, and perhaps our paths will cross again. It was a great start to the year, but pretty intense, and so I didn’t have much time outside it for reflection.

What’s up next?

Two existing projects need wrapping: firstly, finishing up term at CCI and marking some student portfolios, and also wrapping up Lowfell.

Secondly, I’m going to spend the summer working with the same crew inside Google Research that I worked on Easington with, on a interesting internal web project. Not a lot to be said there, but it’ll keep me busy until the autumn, and I’m looking forward to working with that gang again.

And so, as ever: onwards.

Spring 2022 Worknotes

20 April 2022What happened was: I meant to write about the end of 2021 before a big project in 2022 kicked off. And instead, 2022 came around, the project kicked off hard and whoosh it’s now the Spring.

I have been quiet here, but busy at work. And so, here we are.

What’s been going on?

Wrekin wrapped up in the autumn, as expected. I completed my final pieces of documentation, and handed them over. Recently, I’ve been doing a small amount of handover and advisory work with their new lead developer as they push through the next phase of the project. I’m excited to see where they’ll take it.

The small media project I mentioned last time came to fruition. Codenamed Hergest, it was a proof-of-concept to explore if a product idea was achievable - and feasible - within a web browser. Rather than prototype the whole product, I focused on the single most important part of the experience - which was also the single most complex part of the product, and the make-or-break for if the product was feasible. We got to a point where it looked like it would be possible, and I could frame what it’d need to take forward - to “paint the rest of the owl“, as I described it to the client.

Hergest covered a fun technology stack: an app-like UI in the browser, using a reactive web framework; on-demand audio rendering using Google Cloud Run; loading media from disk without sending it across the network thanks to the File API; and working with Tone.js for audio functionality in the browser. It’s remarkable what you can do in the browser these days, especially in contexts where such rich behaviour is appropriate. In a few weeks of prototyping we got to an end-to-end test that could be evaluated with users, to understand demand and needs without spending any more than necessary.

Lowfell has slipped a little, which is on me: there was some issues with UPS shipping, and then pre-existing commitments managed to steal a lot of my energy. I’m hoping to resolve this in the coming weeks, but I did at least get a second prototype shipped and a 1.0 firmware built and signed off.

There was more teaching. I returned to be industry lead for the Hyper Island part-time MA, and spent some good days with an international crew delivering some lectures and coaching them on their briefs - this time, a particularly interesting brief delivered by Springer Nature. The students really got stuck in to the world of scientific publishing; a shame we were still delivering remotely, but not much else could be done there. I also am teaching at CCI again, delivering Sound and Image Processing for first year Creative Computing undergrads for one morning a week, through to the summer.

Finally, in Q1 I kicked off a three month project working with Normally. A team of three of us worked on a challenging and intense public sector brief. Gathering data, performing interviews, sketching, and prototyping; we spent 12 busy weeks exploring and thinking, and I was personally really pleased where the final end-to-end prototype we shipped - as well as the accompanying thought and strategy - ended up. It was great working with Ivo and Sara, as well as spending time in the wider Normally studio, and perhaps our paths will cross again. It was a great start to the year, but pretty intense, and so I didn’t have much time outside it for reflection.

What’s up next?

Two existing projects need wrapping: firstly, finishing up term at CCI and marking some student portfolios, and also wrapping up Lowfell.

Secondly, I’m going to spend the summer working with the same crew inside Google Research that I worked on Easington with, on a interesting internal web project. Not a lot to be said there, but it’ll keep me busy until the autumn, and I’m looking forward to working with that gang again.

And so, as ever: onwards.

Worknotes, Autumn 2021 edition

8 October 2021It’s autumn. What’s been going on since I last wrote?

Teaching at UAL-CCI

I wrapped up a term of teaching a single module at UAL’s Creative Computing Institute.

Sound and Image Processing is a module about using code to generate and manipulate images, video, and sound. The explanation I use in the first week is: we’re not learning how to operate Photoshop, we’re learning how to write Photoshop.

The course worked its way up the ladder of abstraction, starting with the representation of a single coloured pixel, through to how images are just arrays of pixels (and video, similarly, just a sequence of those arrays). We covered manipulating those arrays simply - with greyscale filtering - and then more procedural methods such as greyscale dithering and convolution filters. We could then apply this approach to sound, too, starting by building a sound wave, sample-by-sample, and then looking at higher-level manipulations of that sound wave to make instruments and effects. Finally, having already looked at raster graphics, we looked at maniuplating vector-graphics in real time to produce animation, particle systems, and behaviour, culminating in implenenting Craig Reynolds’ Boids and creature-like simulation.

This was quite demanding: it was my first time teaching this material, which meant that before I could teach it to anybody else, I had to teach it to myself in enough depth to be confident tackling questions beyond the core material, and to be able to explain and clarify those basics. Perhaps that’s a lack of confidence showing - a kind of perfectionism as a way of hedging against coming up blank - but as the term went on, I relaxed into it, and found a good balance between preparation, delivery, and the collaborative process of teaching and learning.

And, of course, it was almost all taught entirely remotely. The students did admirably given that awkward restriction - some of them may only have been coding for a single term prior - and they delivered a great range of work in their portfolios. I’ve said yes to teaching the course again next year; whilst I greatly enjoy my commercial work, teaching aligns strongly with my values, and it’s a rewarding way to spend a small amount of time each week.

Ilkley

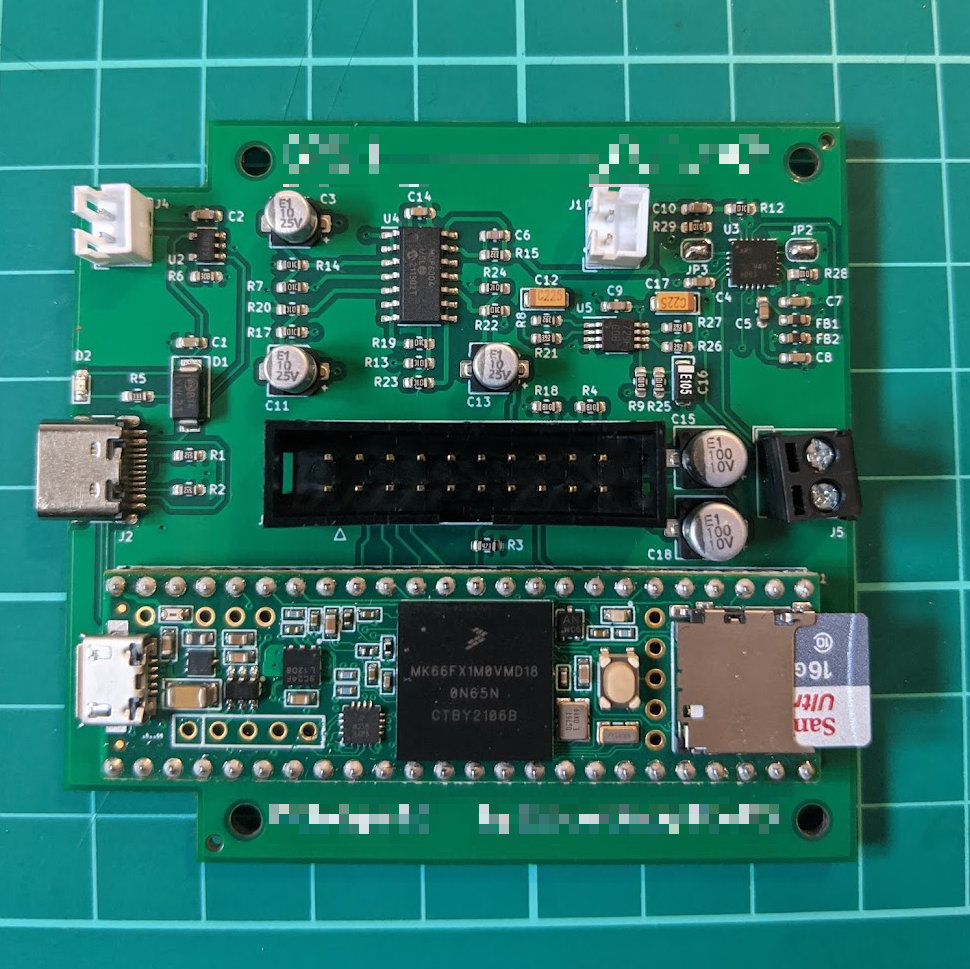

The Ilkley revision B 'brain' board, with a Teensy 3.6 attached. Ilkley has continued to run on for the spring and summer. Last I wrote, I’d just received the first Ilkley prototype board. Six months later, there have now been several more prototypes - three versions of the ‘brain’ board, and two of the upper control board. The second brain revision is the one we’re sticking with for now - the third was a misfire, and really, a reach beyond the needs of the project. By contrast, the control board was fine first time; the second version of was primarily about fitting the components in an enclosure better.

The firmware has been more stable, largely being fine out of the gate. The ongoing work was primarily about finesse, rather than wholesale replacement, so I’m pleased with that.

As I write, I’ve just packaged up five prototypes to send to Matt in New York, which wraps up the end of this phase of my work on the project. The device works end-to-end, and is ready to be played with by test users. I look forward to hearing about his results.

Wrekin

In the summer I began a new piece of work that we’ll call Wrekin, working with a very early-stage startup on building a functioning “experience prototype” to validate their product idea with real users.

This was very much a prototype in the mould described in Props and Prototypes. Some of the prototype had to be 100% working, as we wanted to evaluate certain ideas in a real implementation, to see if the technology was up to scratch, and if users liked it. One other key idea was, though core to the product, largely proven: a ‘known known’. Wwe could get away - for now - with a “stunt double” version of the idea. And then a few more pieces of the prototype were, whilst functioning, only for the experience test; they’d be thrown away in due course.

Over the course of a couple of 2-3 week phases, I built out a feasibility study, that then turned into a deployed prototype, and shipped that to the client for them to test. It’s been a fun project - some interesting boundaries of what you can do with realtime video and WebRTC - and I’ve continued to work with the client on planning future technology strategy, and, if all comes to plan, turning the experience prototype into an end-to-end one that demonstrates all pieces of the puzzle.

It’s also been a project where work on features and design concepts has directly informed future strategy. Not just thinking about what’s possible, or what is desirable, but also understanding the value of build-versus-buy for certain functionality, and for starting to explore the “what-ifs” - the unknown unknowns - that emerged as we work.

I’m continuing to advise the Wrekin team, and may be working with them a little more in the coming months.

Lowfell

Finally, Lowfell. this is another hardware project: a commission for a custom MIDI controller for an LA-based media composer, who works in film, games, and TV.

The controller itself has few features. A few people I’ve shown it to were a little underwhelmed! But the brief for the project was never about complexity.

What client wanted, really, was an axe - a “daily driver”. Something sturdy, beautiful, and not drowning in features: that just did what they need it. They were going to be looking at this thing every day, for eight or more hours a day. They didn’t need RGB lights or tons of features they weren’t going to use; just the things they did want, in an elegant and suitably-sized unit. It’s the same reason we spend a lot on a chair, or a monitor, or an expensive keyboard (or on good tools, or good shoes): you use them a lot, and it’s worth investing.

Also, they didn’t want just one: they wanted one for each setup in their studio space, making it easy to move between workstations and to keep going.

The project began slowly. We did lots of conversation just exploring the idea, and I sent some paper mockups as PDFs over email to give the client a feel for what the size of the unit would be. Once we were happy with the unit, I built a first version, with fully working electronics, and a prototype enclosure that used PCB substrate - coated fiberglass - for the panels, with the sides of the case produced on my 3D printer.

This was enough for us to evaluate functionality and features. I made sure it was trivial to update the firmware on the unit, so I could send over fixes as necessary. (In this case, because we’re using the UF2 bootloader, updating the firmware just requires holding a button on as the controller is connected; this mounts a disk on your computer desktop, to which a new firmware file can be dragged).

Whilst the client evaluated the project, I started work on final casings: using higher-quality printed nylon for the sides, and moving to bead-blasted anodized aluminium for the upper and lower panels. The result is weighty, minimal, and beautiful, and I’m looking forward to sharing more when the project is complete.

Wrekin was my main focus this summer. Ilkley and Lowfell wrapped around it quite well: the rhythm of hardware is bursty, with prototypes and design work alternating with the weeks of waiting for fabrication to come back to me with the things I can’t make myself.

What there’s not been a lot of, of course, is writing. It has fallen out of my process a little. Not deliberately; perhaps just as a byproduct of The Times, coupled with several products that were either challenging to share news on in progress, or in the case of ones with NDAs, impossible.

But: a decent six months. Up next is, I hope, shipping a final version of Lowfell, some more software development for Wrekin, and a potential small new web-based media project on the horizon. I’m on the lookout for future projects, though, and always enjoy catching up or meeting new people, so if you think you’ve got a project suited to the skills or processes I write about here, do drop me a line.

Worknotes for March 2021: 'What is this prototype for?'

23 March 2021Last week I sent all the files necessary to build the first draft at my Ilkley prototype to China. That means the plotting files to make the circuit boards, the list of all the components on them, the positions of all the components. The factory’s going to make the circuit boards and attach most of the components for me.

This is good, because many of the components are tiny.

The Ilkley prototype is on two boards: a ‘brain’ board that contains the microcontroller and almost all the electronics, and a separate ‘control’ board that is just some IO and inputs - knobs, buttons. I am focusing on the brain right now: its “revision A” board is the right size and shape to go in our housing; the current prototype of the control board is just designed to sit on my desk.

About six hours after I sent it all off, I got an email: I’d designed around the wrong sized part. (I’d picked a 3mmx3mm QFN part instead of a 4mm square part, because that’s what had been auto-selected by the component library). This meant they couldn’t place the part on the board: it wouldn’t fit.

“Should we ignore the part and go ahead with assembly?“

At this point blood rushes to my head. That part is one of the reasons I’m not building it myself: it’s not really possibly to attach with a soldering iron, and I don’t have a better tool available at home. So maybe I should quickly redesign around the right part? I hammered out a new design in Kicad.

But now I’d have to send new files, new placements over, and probably start the order again. This was going to add delays, might not even be accepted by the fabricator, and so on.

Deep breath.

At this point, I took a step back, and had a cup of tea.

Over said tea, I made myself answer the question: what was this prototype for?

Was it only to test the functionality of that single chip that couldn’t be placed? The answer, of course, was no. There were lots of things it evaluated, and lots of things that could still be evaluated:

- many other sub-circuits - notably, a battery charger, a second amplifier, a mixer

- the integration with the ‘control board’ and the feasibility of my ribbon-cable prototype

- how several parts ended up being fitted, which the fabricator has not used for me before

- the fit/finish inside our final enclosure

- not to mention whether I’d made any other mistakes on the board.

So far on Ilkley, we have been lucky: every single first revision of our hardware’s worked. This doesn’t mean we’re brilliant at everything; it means nothing more than that we’re in credit with the gods of hardware. Something will go wrong at some point - that’s what

revision Bis for. All that had happened was I’d hit my first big snag.Prototypes aren’t about answering every question, but they’re rarely also about answering one. I usually teach people to scope them by being able to answer the question what is being prototyped here? - the goal being to understand what’s in scope and what is not. Temporarily, in the panic of a 4am email from China, I forgot to answer that question myself. It was good to be reminded how many variables were at play in that Revision A, if only to acknowledge how many things I have going on with that board.

I wrote back to the factory; ignore the part and proceed. We’d still learn a lot from the prototype, and revision B would contain, at a minimum, a new footprint for that 4mm QFN chip. I saved the hasty changes I’d made to the circuit board after the email in a new branch called

revision_b- which I’d return to working on once the final boards arrived.Worknotes - February 2021

24 February 2021It’s the middle of February, 2021. What’s going on since I last wrote, and what’s coming up next?

Wrapping up at CaptionHub

My stint at CaptionHub got extended a little, and I finally wrapped in the middle of February, last week. Everything went as well as I could have hoped on the overhaul of some fundamental parts of the codebase that I was working on.

I’m pleased with the decisions we made. It was good to review all the work with the team and agree that, yes, those decisions we took our time over and drew so many diagrams of were sensible ones, and it had been worth investing the time at the point in the process. We added more complexity in one location, but managed to remove it from several others, and I enjoyed the points where code became easier to re-use simply because we had standardised an interface.

A pleasure to be back writing Ruby, too; not just for its familiarity, but for just how it is constantly such an expressive language to work in and to write. And, of course, a pleasure to work with such a sharp and thoughtful development team.

Working with Extraordinary Facility

I started working with Matt at Extraordinary Facility around the end of January. Let’s call this project Ilkley for now. It’s years since I worked with Matt at BERG, and I was excited for the opportunity to work with him again. I enjoy his eye, taste, and process as a designer so much. You should definitely check out his recently published Seeing CO2 prototype for an example of his approach.

Ilkley is a physical product prototype. I’m taking a prototype that existed between hardware and computer software, and shrinking it into a dedicated box: porting all the code to run on a microcontroller, building the user interface, adding necessary extra hardware to make it entirely standalone… and then designing custom circuit boards to fit into an enclosure. That means: firmware, a bit of EE, some CAD, and then iterating software to get it feeling right. Leaping back and forth between the digital and physical, and code, electronics, and atoms; from pens and paper to alt-tabbing between code editors, KiCad, and Fusion 360. It’s been highly enjoyable so far.

I’ve appreciated the way Matt is laser-focused on the outcomes he’s looking for. The existing prototypes were the design work; this phase is entirely about bringing that design to life. Implementation, feasibility, and building a roadmap for the future. Until he can get more people to use it, it’s not worth us spending time altering anything else. We still go off on diversions and discussions, of course, as we chat things over, but they’ll get gently parked before they jump their place in the queue. It’s great to still have those discussions, though!

Our first tranche of work investigated whether what Matt hoped for was possible. The answer was an emphatic yes, and by the end of it, we’d gone from a large breadboard and tangle of jumper wires on my desk to a small, custom “bench prototype” PCB. Nothing that could fit into a box yet, but something I could reliably send Matt (in New York) to evaluate and test. (Which in itself helped me prototype the answers to “_how do we best ship prototypes across the Atlantic_”).

I also could then start prototyping other submodule circuits on breadboards, experimenting with them whilst not worrying about the main electronics, which were ‘frozen’ thanks to having a fixed design.

One top tip from this process: something that helped enormously was continuously maintaining an up-to-date schematic of the breadboard prototype as I worked on it. As I built the tangly prototype on my workbench, I also drew it up in KiCad, altering it whenever wiring changed, or resistor values were altered. The tangle got more complex as time went on, but this didn’t matter, because there was always a map of it available in the ECAD tool. When we were happy with the prototype I’d been demoing on video calls, it was easy to start the work to turn it into a PCB because I already had the schematic. I didn’t need to start deciphering the knot of wires; I could just put them to one side and move straight onto board layout.

I did exactly the same for the small submodules as I built them; those schematics would then be ready to use again for the first ‘full’ prototype.

Progress has been really good, and I’m going to start a second phase of work soon, to take the various sub modules and bench-sized prototype, and start turning it into an entirely self-contained object. That’s going to run over the next couple of months, I’d imagine, with some gaps to get things fabricated and pull things together.

Teaching at UAL CCI

Having wrapped at CaptionHub, and with at least one client project moving on, I’m also going to be teaching an undergraduate course at UAL’s Creative Computing Institute - the Sound and Image Processing module for students in the first year of their BSc. One afternoon a week, for the next three months.

Why teach? I’m not an academic, and don’t plan to become a full time researcher or lecturer. But I’m dovetailing a small amount of teaching around my client work over the past few years - Hyper Island, the IOC, and now this.

A few answers spring to mind.

Firstly, it’s a bit about the shape of the “industry” I work within. I’m a consultant and freelancer. How do I develop talent, or share my knowledge with others? I can’t do it with the other employees of my company of one. Sharing my knowledge with students and learners through teaching engagements is one way I can. Many of my peers who are located more within the Design community integrate teaching into part of their practice well, and whilst I often use the word ‘design’ to describe a lot of my work, ‘technology’ is also an important part of my practice - and ways of integrating teaching into technical practice is something I perhaps don’t see as much of. So I’m going to see how it goes.

Secondly, I find it a great way to cast a lens at my own practice. Nothing forces you to re-evaluate your own work, approaches, or knowledge, than having to explain or discuss ideas with others. Is it selfish to say that? I don’t think so; more just to reinforce that you can’t help but learn things yourself by going through the process of teaching.

And perhaps most importantly - I learn from all the students I teach. This is in part a reflected version of the previous point - yes, I learn by looking at my own understanding and reflecting on it… but I also learn from listening and sharing with others. Students and learners at all levels bring new perspectives, expertise, experience, and ways of understanding to the table, and I (along with the rest of the group) get to share in that with them.

All of which I value. So, an opportunity arose, to think about images and sound from first principles, and ways of exploring and explaining that. I said yes.

Which all feels like a good slate for now: prototyping design products; teaching about the landscape of code; a little slack between all that for reflection and personal development and work, which (at the end of a year that was already busy before being A Bit Much on top of it all) is much needed.

Onwards.

Worknotes - November 2020

20 November 2020I taught at Hyper Island this year for my fourth year now, as Industry Leader for the Digital Technologies module of their part-time Digital Management MA.

At the end of the course, I give a paper where I talk about personal process and practice. It’s a bit left-of-centre: not so much Best Practice, as Things I Have Found Useful.

I always tweak and rework the course a little each year. This year, I came to this paper, and found myself stuck. In it, I talked about the value of personal documentation:

READMEs in every project and code directory, so you can always pick up a thread after months away; weeknotes as a ritual and way of evaluating work. I talked about the value of colocation - why I prefer studios to offices, why the right people together in the right space is such a winner for work, and how precious a Good Room can be. And at the end, I self-indulgently talk about the value of reading fictionAt the beginning of September, I sat looking at the deck, and realised:

I am doing none of that right now.

Weeknotes, as you can tell, have fallen by the wayside. I’m Working From Home If Possible (and it is possible), not going up to my studio space. I’m working on projects on a succession of videoconferencing tools, all with their own quirks, and spending longer in my study than I’d like. I’m not reading as much fiction as I’d like.

I felt like I’d be a hypocrite to say these things to students.

In the end, I said it all anyway, dropping in a quick “recordscratch” sound effect to call myself on my own shortcomings, and to talk about the difficulty of espousing studio culture in an airborne pandemic.

It went down well, but it also turned out to be not as foolish an idea as I thought. By acknowledging the fragility of ideals in the face of reality, we could explore why I was recommending those practices in the first place. By challenging myself as to why I wasn’t following my own advice, I could explore what the point of that advice might have been.

The fact that I wasn’t writing at the moment didn’t mean that writing wasn’t a thing to recommend. It possibly meant that weekly notes were not quite serving a purpose now. After all, if I was busy, and getting the work done, and if the thing that fell by the wayside was talking about that, I might have to live with it, and work out how to change it.

For now, weekly notes might disappear for a bit. I’ll work out what replaces them in due course.

Meanwhile, let’s see where things are right now, in the middle of November 2020, and what’s happened in the past four months.

Easington

I delivered Easington in early September. I’d written about the project here previously; most notably, in this post on props and prototypes.

But then writing about it dried up. This was partly because having worked out what the job was, I mainly had to work on delivering that. Having done bunch of Thinking About Stuff, much of the work was Making Stuff and Explaining Stuff (sometimes both at once). There often wasn’t much more I could easily say here other than “yep, getting on with things“.

If you’ve read these notes for a while, you’ll know I’ve got all manner of ways of saying “yep, getting on with things“, but 2020 appeared to exhaust them.

It was also challenging because Easington was under an NDA, and the specifics of the project meant there was almost nothing I could say without revealing things my NDA forbids me from revealing. And given that, I was largely quite quiet.

What I can say:

Easington was a fourteen-week R&D project for Google AIUX, who work at exactly that intersection: the user experience of future AI-driven products. I worked closely with design colleagues inside AIUX, especially in London, but also spoke to researchers and topic experts from internal teams around the world. It was challenging and rewarding in equal measure. I’m proud of what we delivered.

It was also a project that almost directly mapped to what I laid out in March, when I wrote about what I do, before Everything Changed, and it was hugely rewarding to confirm that yes, that’s an excellent summation of my sweetspot.

Vacation

I went on vacation in the UK for two weeks after Easington ended. This was long-planned, and not an emergency “COVID holiday”. But I was very fortunate to be able to take that time off, and to do so in a safe and sensible way.

Teaching at Hyper Island

As mentioned above, I taught at Hyper again. Normally, this takes place in December and January of a year. However, as we were going to be teaching remotely this year, they decided to move my module to be the first the students took, not the second, and we delivered this in September and early October.

I reworked a lot of the material, and will write more about the specific nature of that reworking in the future, because I learned a lot about delivering material online.

In broad terms, we made everything shorter, denser, and with more breaks - lectures became more like “episodes”, nothing over 25m without a break. We also ran shorter days - 11-5pm, rather than 9-6pm, as we were all on Zoom for most of the day. In that reworking and condensation, I think I got to some of the best versions of those talks; condensation, and the clarity videochat requires, really helped to focus things.

I also coached the teams on their client project, and this time around it was exciting to see them embark on their first project, with no idea of where they could go, or what they could get done, in such a short space of time. Everybody’s pitches came out great, and it was a delight to meet so many new people. I say something similar each year, but I say it anyway, because it’s true.

I was also grateful to colleagues and peers we could invite in as guest speakers - to everyone who came, thank you.

A change of venue

As mentioned, I’m not really using my studio space at all at the minute: there’s just no reason to be commuting. By September, I realised that this was going to be a longer-term change. I’m lucky enough to have a private workspace at home, and with some rearrangement, it’d be suitable as a place to work from. So, with some sadness, I handed in my notice at Makerversity. I’ve been at MV for almost exactly five years now, and was greatly enjoying the new setup in Vault 7. But right now, I cannot justify the expenditure, given how little I need to be there. And so I’m going to say goodbye, and work from the home office for a bit. Not how I ever imagined I’d leave MV, and the excellent Somerset House community, but there you go.

A return to CaptionHub

Finally, in October, I returned to a three-month contract with CaptionHub. They had some extra work coming up on their slate and an extra pair of hands that knew the project would be welcome to help deliver it. Given the uncertain nature of 2020 - and, to be honest, 2021 - it felt like a no-brainer to take a three-month contract with them.

In one sense, this project is ‘just’ a software development gig. But it’s a particular kind of engineering I quite like: a careful, almost standalone set of features that need as much planning as they do implementation. I’ve been focusing on swapping out some of the underlying premises in the code, in order to support future features, which has led to a lot of diagrams and thinking before embarking on a careful switch from one shape of the world to another. I have described this as indianajonesing, in reference to the opening of Raiders of the Lost Ark (and I should note, this is not my metaphor - I just cannot remember where I got it from). In our case, I’m swapping one set of code for another, and making sure nobody notices the changeover, and no-one gets squished by a giant boulder.

It’s going well, although it takes a lot of my brain and concentration (which is not quite in the supply it was in 2019). That often means there’s not space for much else at week’s end (I’m taking Fridays as days for personal work). But good work, with a lovely, thoughtful team, is highly appreciated right now.

Appreciation is the right note to end on. I’ve been very lucky to be able to take on all these projects this year, in a time when work hasn’t necessarily been easy for everyone, and I’m always careful to acknowledge that when talking about my own practice.

Right now, I wanted to acknowledge where everything was, and share what I could about the last few months. I imagine worknotes continuing in perhaps a less frequent format for the coming weeks and months, whilst I get back in the habit, and maybe find new ways to share my practice.

What I concluded, talking to the students, was not the frequency, but the act. Some writing beats no writing.

And hence: some writing.

Weeks 391-393: Props and Prototypes

20 July 2020I wrapped up phase two of Easington. We completed the beta of our interactive game-like thing that we built to explain the output of that phase. The prototype evolved a lot during the phase. Probably the best thing that happened as it progressed was that things I’d initially described in text were extracted to state. That is to say, we made it more gamelike: rather than describing other possible outcomes of an action, why not find a way of letting the user alter the state of the world, and then see what happens when they repeat the action?

It sounds obvious when I write it down, but when I’m head down in the code, it is sometimes hard to have that high-level picture. This is one of the values of weeknotes: acknowledging what I missed, and writing it down so I don’t forget. Making the prototype more interactive - adding new interactions, and making it respond more richly to them, turned out to be the right answer every time. Something to remember for the future.

This phase of the project has had me thinking a lot about propmaking, and its relationship to protoyping.

Props in films aren’t just one thing. Think about a prop like, say, a lightsaber from Star Wars. A single prop lightsaber will likely exist in several forms, including:

- a “hero” prop, that’s seen in close dramatic scenes. Made of realistic materials (metal, plastic, wood), full size, detailed. Something an actor can act with, and respond to. Something that will look good on camera.

- perhaps: further hero props in different states: with the blade extended and retracted, for instance, or “damaged” and “undamaged”.

- perhaps a separate functional hero prop for specific purposes. Imagine a close-up scene where we see the hero dismantling their lightsaber and repairing it: that “dismantalable” prop might be entirely different to the hero prop seen most of the time. (It might even be a partial prop - just the parts you can see in shot, and extra things to make it work also attached)

- stunt props. These look almost identical to hero props, but are usually made entirely of foam rubber, and cast from the hero prop. These are used, as the name suggests, for stunts, where the object might be bashed around, or come into contact with an actor at velocity. They also end up being used for scenes where there’s any rough handling of a prop that might damage the prop itself - being thrown, or dropped, for instance. And frequently rubber props will used for background action, made en masse to give to extras, or used when the hero prop isn’t strictly necessary - when the lightsaber is hanging from the hero’s belt in a scene where it’s not really used, for instance.

- once upon a time, props might have existed as scale models - a miniature lightsaber on a stop-motion puppet, for instance. Scale models tend to be more common for large objects, like vehicles or buildings.

- the modern equivalent of a scale model prop is a CG version of it, to be used in computer-generated visual effects. The prop has a digital double, perhaps made from a 3D scan of the object.

And for some of those props, on a large film shoot, there will be duplicates and spares.

I’ve been thinking about the way one prop exists in multiple forms, and the different roles they all serve, because of all the different kinds of prototype I’ve built so far on Easington.

So far, we’ve made real working code and tools (out of Python/Javascript and Docker/Cloud Run, as well as modern front-end web stuff); an interactive, explorable prototype pocket world; browser-based prototype UIs; clickable prototypes in Figma; and sound and video prototypes made out of samples, synthesis, and video in tools like Ableton Live and Hitfilm.

And all of those prototypes are very, very different.

- Our working code makes a useful point about the current state of things, and lets other people explore the idea. But it’s not in any way production ready, and it falls apart a little if you don’t use it the way it’s intended. That’s our close-up, working-for-one-task prop.

- Our clickable mockups are like stunt props: they look highly convincing, fulfill a hugely important role, are highly robust, but the second you touch one you’ll discover it’s fake.

- Our video and audio demos are a lot like CG: entirely convincing, we can do whatever we want with them, but 100% fake - and not even interactive.

- Our interactive gamelike is a bit like a scale model: it has not just look/feel but logic as well, albeit in a highly constrained and limited way. It makes sense in its own little pocket universe.

Like props, none of these are exactly ‘real’, and none of them work outside the world of the project. But they all serve useful purposes, and like all the different prop lightsabers, they all work together to tell our story. Our scale models, clickable mockups and VFX are made convincing by the fact we’ve also shown genuine, working code, but in a rougher form. And they wouldn’t have the same impact if we didn’t have that “close-up” prototype.

The mockups and scale models help us invent and imagine, and help people using them imagine how the fragile working code might feel in the world, and in the near future.

The other thing I find useful about enumerating the different types of prototype we’re making is it helps me understand what their individual value is - and when they might be considered “done”. Because they’re all fulfilling different roles, they have different completion criteria. Some need to look very good; some need to function correctly; some need to be somewhere in the middle. Understanding what the prototype is trying to achieve in terms of its role, not just its functionality, helps me think about the right material to build it out of, the right level of detail to furnish it with, and when to put it down and move onto a different kind of prototype.

Weeks 389-390

29 June 2020Another couple of weeks with my head down on Easington.

It’s a challenging project to talk about, because of NDAs. But that’s somewhat the point of weeknotes: noting not only what I did, but how, and why. The job isn’t to share the details of what I was up to: it’s to share the parts I felt worth sharing as insights into process, or thought, or for me to remember in the future.

The project has moved to a new section of exploration, which is involving less prototyping of working code, and is more about explaining ideas, possibilities, and illustrating processes. Some of this fortnight’s work has been finding ways to do this that Aren’t Decks.

Slide decks aren’t inherently dreadful. They have a lot to recommend themselves as formats to encapsulate and preserve information. They combine images and words relatively well, they are often terser than long prose documents, and emerge not as a ‘primary’ artefact - “here is my research paper” - but as synthesis. That means they’ve gone through the mill a few times and are condensed, compact versions of an idea.

But. The “distribution deck” is still very different to a presented deck - or even a writer walking you through the deck they will later distribute. Decks are relentlessly linear, which makes conveying ideas that may only ‘click’ after prolonged exposure challenging.

A good narrative reinforces itself when it ‘clicks’ into place. The ‘aha’ moment for a reader shouldn’t be a single moment of discovery; it should also reverberate through each step of a narrative, revealing how they set up this particular ending. That may sound flowery and poetic, but it’s often true of research or design presentations: everything up to the reveal is preparing you for it. Of course, you are then either carried forward by the speaker without a chance to revisit it… or have to flick backwards through the deck.

This is not really how thinking works. The moment something clicks may be different for different readers, dependent on how they think, where their attention is, what ways of transferring knowledge work best for them. Less linear formats let people pace their discoveries, repeat segments in more natural manners than ‘rewinding a tape’, and make re-examining content through new lenses more natural.

In short: what are more interactive ways of delivering R&D that convey the experience of discovery, and a depth of understanding, better?

One thing that came up as we tossed this idea over was Ben Eater and Grant Sanderson’s work on explaining quaternions. Try the first video to see what I mean. It may look like a video, but it is, in fact, a choreographed interactive page. There’s a timeline, and narration, and the narrator has their own cursor… but it turns out they are playing with a webpage, and you can play with it too whilst they talk. They even pause to let you have a fiddle. And you can continue to explore when they’re finished. This is a technically complex way of delivering content (and it’s also technically complex content!), but it radically changes the learning process compared to a static video.

I am not making anything so sophisticated. But I am exploring formats more similar to Hypercard decks or hidden-object Flash games than just a PDF of a slide deck. To that end, I’ve been working with Phaser a fair bit. Ignore the ugly website: Phaser is, effectively, a Flash-like game engine for HTML5, via Canvas and WebGL. For me, it resembles Flixel a lot: an object-y, sprite-y game engine that lets you write ES6.

I mainly fell on this as the easiest way to play with the idea I had. As it turns out, I could probably do a lot of it in the DOM with modern Javascript, but there’s something about using a tool designed for games, rather than documents, that changes how I work a little, and it made building the prototype I was working on relatively swift. The prototype delivers information, but it also was a way of prototyping content delivery. Already we have something that has pacing, “beats”, and can be replayed or re-explored to meaningful results.

I am certain we will end up delivering a deck as well. I’m working on one now. But letting people explore an idea, rather than just reading about it, seems like an avenue worth pursuing further.

Weeks 386-388

15 June 2020Weeknotes are late. It didn’t seem the right time on Monday 1st June to be prattling about my work practice or the landscape technology on the internet. Make some space for more important messages and voices to be heard.

At the beginning of week 386, we presented where we were to the team - the output of some discovery work - and we began to formulate a plan for the next steps. And here, I struggled with premise rejection: looking at the brief, reacting to it based on the discovery, and realising every bone in me wants to pivot away from it. The brief is for X; my (not necessarily correct) instinct is to say “…how about (something that is emphatically not-X)?”

Rather than being the thing I should do, this is a feeling I have to sit with for a bit and own. Sometimes, it’s just down to a bump to my confidence. I am put out by something I have discovered; an unforseen challenge has emerged; whatever has emerged is something I am afraid of. And whatever it is, I just have to work through it.

At the end of the week, I worked through it a bit on my own, and then shared my thoughts with my colleague. Fortunately, we both agreed on where we were. The discovery phase had, in fact, worked: we had learned a lot, and perhaps needed to pivot a little. What the full details of that pivot were, were unclear, but we agreed that it was needed. And we also both had come to similar conclusions about what we had discovered.

There was work to be done, but there was no need to panic entirely.

What then followed over the next few weeks was continuing to work through that. We spoke to a variety of peers through the organisation, shared where we were, and took some of their feedback on for the next chunk. I continued to sketch in paper and code.

And we chose a direction. Not quite the direction that would take us through to the very end, but a direction to take us along the next part of the journey. We’d take the discovery work and continue to explore and polish with it, just as planned, delivering a first phase that was roughly in line with what we’d promised: see it through, and work up our ideas in more detail. Then, we’d veer off: rather than treating the next two phases as incremental on the first, we’d address two other areas that had emerged in a similar manner. The second phase is feeling relatively clear in terms of topic, if nothing else; the third is perhaps vaguer, but might be shaped over the weeks that preceed it.

Phase one needs to wrap up next week, so in week 388, I spent a chunk of time making browser-based prototypes, and thinking about how best to make them self-explanatory. Not in the way a good piece of software is, but in the way a good textbook or exhibit is: the code is our example, but it needs content to situate it alongside it. I’m excited to follow this thread, and explore how to present this kind of work.

And, finally, a nice surprising note. I noticed a small ping from the Slack for Bradnor towards the end of the week, and hurriedly jumped over there to work out what had gone wrong. It turns out: nothing. Rather, a long quiet device gateway had come back online, and the sensors speaking to it had started trickling data into the system automatically and unasked. Exactly how it should have worked, and how it was envisaged. But it’s nice to see something that you had planned to be robust proving its robustness. Happy client, happy me.

Weeks 384-385

24 May 2020Whoops, missed a week. I’ve been intensely head-down on the first phase of Easington.

It always takes me a while to settle into the pace of new projects. I’m eager to start, keen to make headway. It also takes me a while to settle into the pace of new clients: how do they like to work? What are their expectations? What’s the cadence of us meeting, talking, and then me orbiting off to do some work, or sitting down to collaborate.

And that’s all heightened by doing everything remotely from the get-go. (I’m used to working at a distance, or independently, but still relish collaboration, colocation, and thinking in-person when possible).

So I spent the past two weeks “cranking the handle”, so to speak.

But: they’ve been a very rewarding two weeks. I’ve had regular catchups with my Primary Colleague, and have been bouncing up and down the powers of ten that my favourite kind of R&D projects tend to require: from gathering background research, speaking to colleagues, and reading papers through to thinking-out-loud, sketching interactions and ideas, and then, at the far end, pulling one particularly meaty thought together in code as a working, end-to-end prototype.

That code project took up most of the end of week 384 and all of 385. I proposed that we Just Build A Thing with a particular technology so that we could get a visceral grasp on what it could do. We know what it can do in theory, but it’d be good to feel that for ourselves in a real-world environment. That’ll help us and our stakeholders make strategic decisions about the next steps of the project, as well as help us understand the material we’re working with. It’s also a little gift: something to share back to the team for them to use themselves.

A lot of that’s come down to the cardboard-and-tape of web technologies, but that’s an exciting space at the weird edges. I’ve poked a bit at Functions As A Service before - AWS Lamba, Cloud Functions - but in this case have been using Cloud Run to apply that idea to a whole Docker container (for

$REASONS). A whole container, spun up from cold start, in under a second, to do something on demand, die when it’s done, and we only get billed for compute time. There was still also a chunk of code to write, but a lot of the code for this project is really infrastructure: the lines between things on the block diagram. Once the pipes were set up, we were running an interesting workflow largely on on-demand hosting. None of that is the meat of what’s going on, of course, but it’s still been instructive to put to use, rather just to understand impassively.So whilst I was motoring through that, I was also wrapping my head around a new problem domain, one specific problem, a new client, a new project, and a pile of misbehaving code (all written by me, obviously). And so weeknotes slipped, albeit for good reason. Still: good to drop a note at the end of what felt like a good fortnight and say “that felt like a good fortnight”. At the end of it, I had a rewarding, curious, and thought-provoking prototype, ready to demo next week.

Next week we’ll present where we are and go from there.

Week 383

11 May 2020I wrapped up Bradnor this week. I just had a few tweaks left in the code based on client feedback, and a few more to infrastructure - notably, sending deploy notifications from our deploy pipeline through to our error reporting tool.

With that done, the main job was handover. Part of that was to hand over various services to the client’s control; I always feel better knowing that the appropriate person ‘owns’ control of something, even if we’re at free or low-usage tiers.

More importantly, it meant documentation. I tidied up the READMEs lying around the place, and then wrote a long document called What We Did which synthesized the various discussions and interim documents into one clear document that could be referred to in future. I find it easiest to write this for an imaginary future developer coming to the project.

To do that, I assume relatively little specific technical knowledge. So I explain everything we’ve done that either deviates from norms, is domain-specific to the application and product, or that is our ‘configuration’ of existing tools. Beyond that, I link out to documents for open-source tools or products, rather than explaining them myself, but assume familiarity with the core language or framework being used.

That future developer is, of course, easy to imagine because I think about myself returning to a project after a long gap. It’s also there for the client, who is themselves technical: whilst they’ve been making decisions I’ve put to them, this is a reference document for them, too, so they can see how the things we’ve spoken about join up, and have a final ‘map’ of the infrastructure and code we’ve put together.

With the final pieces in place, I shipped the documentation, and the client seemed very pleased with it - and the project as a whole. A satisfying end to this phase of work, and perhaps we’ll work together on the project again in the future.

I got some feedback from the University of Leeds about the courses I wrote for them on Futurelearn. In general, they sounded very pleased: really exciting numbers of sign-ups, and good responses from learners in the comments threads. However, one ‘step’ of a particular course was causing a little confusion. I asked learners to skip over some stages of an external tutorial without quite clarifying why; many of them wanted to do the missing steps, or hadn’t quite worked out how to skip things. They asked me if I could make a short screencast clarifying what to do, and why.

So I spent a few hours this week back in my screencasting tools, making a short film to explain not just what to do, but why I thought this was a good idea.

How do I record screencasts at the moment? I record video using the “record area of screen” function built into Quicktime Player, with the audio from my webcam microphone alongside it. At the same time, I am recording my external condenser microphone into Logic Pro, with a small voice channel set up inside the DAW. I usually have a script or notes laid out on a table in front of me. Then, I hit record in Quicktime and in Logic, and just keep going until I have decent takes of everything I need.

Once that’s done, I fiddle with the voice channel in Logic, to get all the audio up to a nice level, and to remove any background noise. Careful application of the built-in compressor, and occasional Brusfri does the job here. Then, I bounce out the audio to a

wavfile.To edit it, I open all the media up inside Hitfilm, and synchronise the bounced audio from Logic against the ‘guide’ audio from the webcam. Once those are synced, I can remove the webcam audio entirely. Then it’s just a case of walking through the script, chopping and editing, and occasionally deploying small video effects to zoom in on an area, or making small comps to manipulate areas of the screen.

My goal isn’t to get to something completely final. Leeds have an excellent video team who take this and make it sing, adding B-roll, tidying my edits or comps, and adding titles, stings, and transitions, in line with their branding. Instead, I’m trying to give them enough to work with, to make sure the script and technical video are watertight, and to make the intent of the film clear.

Once we’d approved my short script, it (as ever) worked out at around an hour’s work per minute of footage - I’m pretty swift at this now, but never seem to be able to break that rule of thumb!

Finally, I had a quick meeting with the Easington team about that work, and we arranged a kick off meeting for Monday 11th - Week 384.

Week 382

3 May 2020Week 382 was primarily a busy week of writing code on Bradnor.

With last week‘s infrastructure in place, capturing messages from physical devices, we could now spend some time processing that data. That meant abstracting out devices from the locations they represent. After all, a device may go offline, or be replaced by another, but it’d be good to be able to see the history of data from a single location, as well as from a single device.

So we filled out our data model to encompass concepts such as ‘locations’ and ‘devices’ and the relationship between them (as well as logging when they change) Then, I could build another data-processing task, that would copy a ‘device message’ into a list of messages for a specific location if those two items were linked. (This also made it easy to have a ‘disabled’ flag on devices, making it possible to ignore inaccurate messages from possibly defunct devices). In that copying process, we also do a little decoration and transformation of the data, leading to a nice big table of per-location messages, that’s quick to query.

I could then backfill our location messages with the data from last week, as well as importing historical data from CSV files straight into the ‘location messages’ table.

There was also a lot of metadata CRUD to do, to make it easy to update and record information about locations, as well as to leave comments and annotations on many of the objects in our system. Rails made this about as straightforward as it could be to hammer out.

I made sure I had time to work on some belt-and-braces, too: making sure there was appropriate test coverage (especially of key parts like data processors and the end-to-end request cycle of message JSON arriving at a URL), and setting up Rollbar to catch and collate errors.

With all that done, we had raw data coming in, meaningful information being derived from that data, and the beginnings of a fleet-management tool.

Finally, I set up a visualisation pipeline using Grafana. The client had been using Grafana in their previous stack, so it made sense to keep doing so - especially as it was both easy to deploy (thanks to its preference for being deployed as a Docker image) and easy to integrate into Postgres (thanks to the Postgres plugin being supplied as default). With that deployed, we could spelunk away, and a short while hacking away at some SQL got me to a nice dashboard showing data for a set of monitoring locations grouped over time, and navigable with Grafana’s time-series tools (which make it easy to scroll around time and to zoom in and out). As new monitoring locations were added and new historical data dropped in, more lines appeared in the graph. Satisfying to see!

All this meant I largely wrapped up Bradnor this week. There’s just some spit and polish and handover reamining.

It’s not the most sophisticated set of tools, but it is built out of well-known building blocks - application frameworks, databases, protocosls - that are maintainable, testable, and knowable. We’ve simplified the platform infrastrcture a little, but still have a good base to build upon, perhaps to add new features to or to extract to smaller services if necessary. Tests help verify that the code is doing what it should be, and by using known, mature tools, it becomes easier to recruit others to work on it should I be unavailable. I was pleased with the shape of this project - what looked like a quick software build project actually turned in an opportunity to lay some good foundations, re-examine infrastructure, invest in more than just lines of code.

On other fronts, I signed paperwork for Easington, which should kick off in week 383, and take me right through the summer. This is going to be an exciting, challenging R&D project to pour myself into, and I’m looking forward to it.

Week 381

27 April 2020I got my head down on an initial phase of Bradnor this week.

Bradnor is a small project to build out a new back-end for an existing IOT platform. I’m replacing the storage and administration end of affairs: the data gathering and transit layer isn’t changing. The week saw me investigating existing code, evaluating options for replacing parts of it, and deploying the code to newly provisioned infrastructure.

My goal for the end of the week was to get data piped from devices into a database. Once that data was safely piped and stored somewhere, we could then build upon it next week with various visualisation tools and management APIs. But first, we just had to put it somewhere.

I framed the work to the client as “research and development”. Not, perhaps, in the traditional sense of an R&D project - here, the task was known, and the problem-space well defined. But I was still going to have to research options for this greenfield project, sometimes by writing software or testing third-party services, and then present that work back so a path could be chosen. Researching what could be done, and only then developing the thing that needed doing.

That meant the first chunk of work was reading documentation, tinkering with small tests, and a lot of synthesis and writing to present back to the client.

Once we’d agreed on an approach, I started building out the skeleton of an application to get us to parity with the existing infrastructure. That first involved reproducing some data-processing that was originally written in Javascript in Ruby, and then getting live data flowing through my code, and into our datastore.

Finally, I provisioned some suitable hosting. It’d be possible to move everything to a large chain of small cloud-based services - queues, on-demand functions, datastores - but we chose, for now, to use a simple PAAS for the application code, and a managed database instance for our storage.

The data is perhaps the most valuable part of the product, so I felt it was worth not pretending we have time to be our own DBAs, and instead invest in someone else scaling it, managing it, and maintaining backups. A traditional application structure, but one that would do the job for now (especially with sensible background of tasks, thanks to Que).

There’s always a trade-off between expending effort on application code versus application infrastructure: do you spend time arranging an array of services, but ultimately writing less code, or do you invest in code and extract to services later?

I tend to prefer starting with monolithic code, and then extracting to services later. That seemed especially apt here, given the code was already a greenfield rewrite, and as such, I was still wrapping my head around the needs of the domain and the other platforms it was built out of. By keeping the infrastructure relatively simple - and knowable - I hoped the next most obvious changes to make to it would emerge in time.

So I focused on getting data from devices, through the pipeline, and into storage - and getting this deployed by the end of the week. With this solid base was in place, I could spend week 382 focusing on fleet management, data-visualisation, and external API acccess - as well as contemplating a roadmap for future upgrades, and perhaps taking better advantage of cloud services.

By the end of the week, we had code running, data flowing into it before being processed and stored, a deployment pipeline set up, and a hefty amount of documentation of both the problem space being explored, and the work that I had produced. A good week’s work, and a good foundation for week 382.

Week 380

17 April 2020Where were we?

I last wrote notes around Week 374. My

Makefiletells me this is about Week 380. I think that’s correct. If not, well, time has slightly lost some of its meaning in recent weeks, and who’s counting? Week 380 it is.In week 375, I finally finished my career review, and [wrote that up]. That was a prelude to more formally seeking out new projects and clients. Of course, what then happened was COVID-19 made it quite clear that we were not proceeding as normal for a bit. I left the studio and went to work from home, and started trying to investigate new work from there.

Which was not, at that point, particularly fast-moving, and, coupled with the strangeness of lockdown, everything slowed down a little. It was going to be a challenge to write ongoing weeknotes where I invented euphemisms for “not very busy over here”, so I went a little quiet.

When I write down what I’ve been up to since then, though, it’s a decent amount:

- I made voipcards. This was, initially, one of my one-line-gags turned into a small project. It was also a useful tool to prod at learning a bit more Svelte, to practice building PWAs, and to occupy my mind. It turned out quite popular on the internet, which led to me writing about the problems of solutionising, and why I’m still not sure it’s that good a project.

- I released the 2.0.x firmware for 16n, and wrote about that here. This had been on the shelf for a different project - Mayhill - for a while, and I realised it could easily be ported to 16n. The big feature this introduces is configuring your hardware from the browser, over USBMidi. Really pleased with how this turned out, and the community feedback has been great.

- I built up a personal electronics project over a few days, which turned out rather well after some fettling. Fun things I learned here included using naked board substrate as a transparent surface for LEDs to shine through, how to drive 256 LEDs off only 64 channels (four sixteen-LED drivers chained over I2C), and then tweaking the update rate of the LEDs so they don’t flicker on cameras as well as to the naked eye. (That involved moving the update rate to an integer division of 30 frames a second…)

- been spending some time volunteering on Makerveristy’s PPE effort - primarily, on a tangential project to the 3D printing they’ve been launching, where I’ve been lending some support on digital logistics work as well as comms and lightweight project management.

- pitching a bit and having phone-calls and chats.

- quite a lot of business-related admin.

- setting up two new projects. Let’s called them Bradnor and Easington. I signed the contracts for Bradnor, a short project around infrastructure for an IOT project, last week, and we’re nearly there with Easington.

That felt like enough to finally write about. And now I’m back in the saddle, it should be harder to break the chain next week. As ever: onwards.

Week 374

2 March 2020I’ve been freelance for over seven years. In that time, my work has slowly changed a little in its nature, along with my professional interests, and the shape of the wider market. In a quiet week, following just-about wrapping a few projects, it felt time for a more formal review of my work to date.

I loosely followed Matt’s notes on his own career review as a starting point: looking at all my past work, and analysing it. What did the successes have in common? What would I like to do more of? Are there trends? As usual, it’s easy as a lone practitioner to assume that it’s all just gig-after-gig, but that’s not true.

Some focused time with pen an paper let me look clearly at my work. It turns out that over all the wide range of projects, there really are trends, and there is definitely expertise built over time that is worth sharing. So it was definitely useful to take that time to do this properly.

I then performed an extra step after Matt’s original list of questions: I looked at the how of each project. How did those circumstances occur? How did the work happen? Who is involved? Who is committed? That helped me gain a better focus on what circumstances are most successful for me, and how I enable the work to happen.

Having done all that, there was a small piece of writing work to synthesize and explain everything. At which point, I promptly got a cold - not COVID-19, I should add - which knocked out the end of my week. So finishing up the review work would have to begin in week 375. Still, the groundwork was laid this week, and it was a good way to use some time off project work.

Week 373

23 February 2020This week I:

- spent a half day on Monday wrapping up a polish pass on Willsneck with its designer. I then gave a short talk about Willsneck to the direct client on Thursday Morning. Primarily, I talked about the deployment strategy we were using, and why it was a good fit for the project and client. It was good to validate the thinking we’d been doing as a team, and also to communicate that what looked like off-the-cuff decisions did still have a bunch of thought behind them. There were good questions and some nice feedback, so that was satisfying.

- spent Tuesday workshopping with Tim. Early investigations into something, digging into what really interested him (and me) about a particular idea, and then some due diligence and research into prior art.

- continued from my end-to-end breakthrough on Mayhill with further iteration. The firmware feels pretty complete; there’s one error at the level of hardware design that will need resolving before I can confirm that, but otherwise, I’m pleased. I also continued to iterate on the software end of things, adding features to the browser-based editor. I looked into the state of the hardware, but then got a bit downcast as I realised the effort required to take it beyond the workbench. With its own fast microcontroller in, it likely falls under FCC regulation of “unintentional radiators”, which puts another hurdle in front of selling or shipping it. Something to think about, but meant I largely parked thinking about it for a bit.

- finally, snatched a victory from the work on Mayhill by realising there’s nothing to stop it working with 16n, so started working on a version 2.0.0 firmware for that, which should be ready soon. Early feedback from the community has been highly positive, so it’ll be good to ship that to as many people as possible.

Week 372

16 February 2020Lots of work on Willsneck this week, to bring it into land. We wrapped everything up by Thursday PM, and I’ve got a half-day on this left over to finalise the production deployment. Happy with how it’s turned out, I think.

Hallin got merged into master by the client, so I’m looking forward to hearing how they get on with it in production.

I had a good chat with Christian and Miguel from Schema who were in town, having been introduced by a friend. Always nice to meet new people, and interesting to chat to them about designing for data, working with clients on that problem, and the tools used to do it. Thanks to Steve for putting us in touch!

Finally, I spent some of Friday working on Mayhill, and had a really big breakthrough. That breakthrough is what we called end-to-end at Berg: a complete workflow up and running, even if every component is a bit ‘version one’. I began by working on overhauling some of the browser-based UI: making it look a lot tidier, and refactoring a lot of the large

App.sveltefile into smaller components, including a big wrapper component for handling the MIDI context. Then, I wrote the JavaScript to translate edits in the browser to Sysex data. Finally, back in firmware-land, I wrote the firmware code to intercept that stream of data and write it to Flash RAM. There were other small firmware kinks to iron out, too.By the end of the day, I had a system where you could open an editor in your browser, connect a physical object (that I’d both designed the electronics for and coded the firmware on) and see it appear automatically, and reconfigure it in the browser app. The browser app could transmit edits back to the hardware, which would persist those changes. Hugely satisfying: what’s largely left on this is polish, now, and working on the “1.0” hardware (rather than this ‘development board’) that I built for myself.