Posts tagged as hardware

One Thing: Synthstrom Deluge Crash Reports

19 February 2024I was really taken with this, which @scy (Tim Weber) posted on Mastodon the other day:

The Mastodon post is very clear, so to quickly summarize: The device has crashed; it’s shared its stack trace optically, using the LED button matrix on the device. To share that stacktrace with the development team, the end-user only has to post a photograph of it to the development Discord, where an image-analysing bot decodes it and shares the stacktrace line references as hexadecimal. Neat, end-to-end stack-tracing, bridging the gap between one hardware device and the people who develop its firwmare.

This feature is particular to the “community firmware” available for the Deluge. Designed by Synthstrom Audible in New Zealand, the Deluge is a self-contained music device - a ‘groovebox’ - that was originally released in 2016. In 2023, Synthstrom released the firmware for the device as open source, and a community has emerged working on a parallel firmware to the ‘factory’ firmware, with many features and improvements. Synthstrom can concentrate on their core product, and on working on maintaining and improving the hardware; the more malleable part of the device, the firmware, is given to the community to be more malleable.

Why Share This?

Firstly, as the comments in @scy’s original post point out: this is very cool. The crash itself goes from being an irritation to a shareable artefact - look at what this thing can do! I am pretty sure if that if I owned one of these and it crashed, I would share a similar post with friends.

I particularly like it, though, for its transparency. When devices with embedded firmware fail, it can be difficult to work out why, or what has happened - and if the device has no network connection (usually completely understandably!) there’s rarely a way of sharing that with the developer. By contrast, this failure state makes it clear that something has failed and it’s OK to share this fact with the user - because the developer is also asking to be told.

The Deluge makes this reporting even more challenging in one aspect: whilst the Deluge now ships with an OLED screen, original Deluges only have the RGB matrix and a small seven-segment numerical display for output. There’s no way of displaying any text on these earlier models!

But by encoding the stack trace as four binary numbers, it can be displayed on the screen as four 32-bit numbers. Sharing the stacktrace is left to the owner - and can be done with a ubiquitous phone camera. Finally, decoding the stack-trace is the responsibility of the bot in the Discord channel - no need to involve the end-user in that necessarily. The part of this process that runs on the embedded firmware is the least complex part of the process; networking is left up to the user’s phone, and the more computationally complex decoding is left to server-side code.

This feature is also an appropriate choice for the product. Sharing the stack trace to Discord might be a little too involved (or “nerdy”) for some deices, for but for an enthusiast product like the Deluge, with an involved and supportive community, it feels like a great choice.

And it gives the user agency in the emotional journey of a crash. Most of use have clicked “send” on stack traces going to Apple, Microsoft, or Adobe, and then perhaps just given up on the idea that anyone ever sees our crash logs. But here the user is an active participant in the journey: if they choose to share the stacktrace, they have visibility on the fact it’s been seen and decoded in the Discord channel. And now that they’ve shared it to a social space, they have created an artefact to hang future conversation about the crash off - and where they may even learn about future fixes to it.

The feature is neat, and a talking point - but it’s also interesting to see how a moment of software failure can be captured without a network connection on the device, shared, and ultimately socialised. (It also beats the IBM Power On Self-Test Beeps by a long stretch…)

One Thing is an occasional series where I write in depth about a recent link, and what I find specifically interestind about it.

Worknotes: end of 2023 wrap-up

8 January 20242023 was frustratingly fallow, despite all best efforts. Needless to say, not just for me - the technology market has seen lay-offs and funding cutbacks and everything has been squeezed. But after a quiet few months, the end of 2023 got very busy, and there’s been a few different projects going on that I wanted to acknowledge. It looks like these will largely be drawing to a close in early 2024, so I’ll be putting feelers out around February. I reckon. In the meantime, several things going on to close out the year, all at various stages:

Lunar Design Project

After working on the LED interaction test harness for Lunar, I kicked off another slightly larger project with them in the late autumn. It’s a little more of an exploratory design project - looking at ways of representing live data - and that’s going to roll into early 2024. More to say when we have something to show - but for now it’s a real sweetspot for me of code, data, design, and sound.

Web development project “C”

More work in progress here: a contract working on a existing product to deliver some features and integrations for early 2024. Returning to the Ruby landscape for a bit, with a great little team, and a nice solid codebase to build on. Lots of nitty-gritty around integrating with other platforms’ APIs. This is likely to wrap up in early 2024.

This and the Lunar project were primary focuses for November and December 2023.

Nothing Prototyping Project

Kicking off in December, and running into January 2024: a small prototyping project with the folks at Nothing.

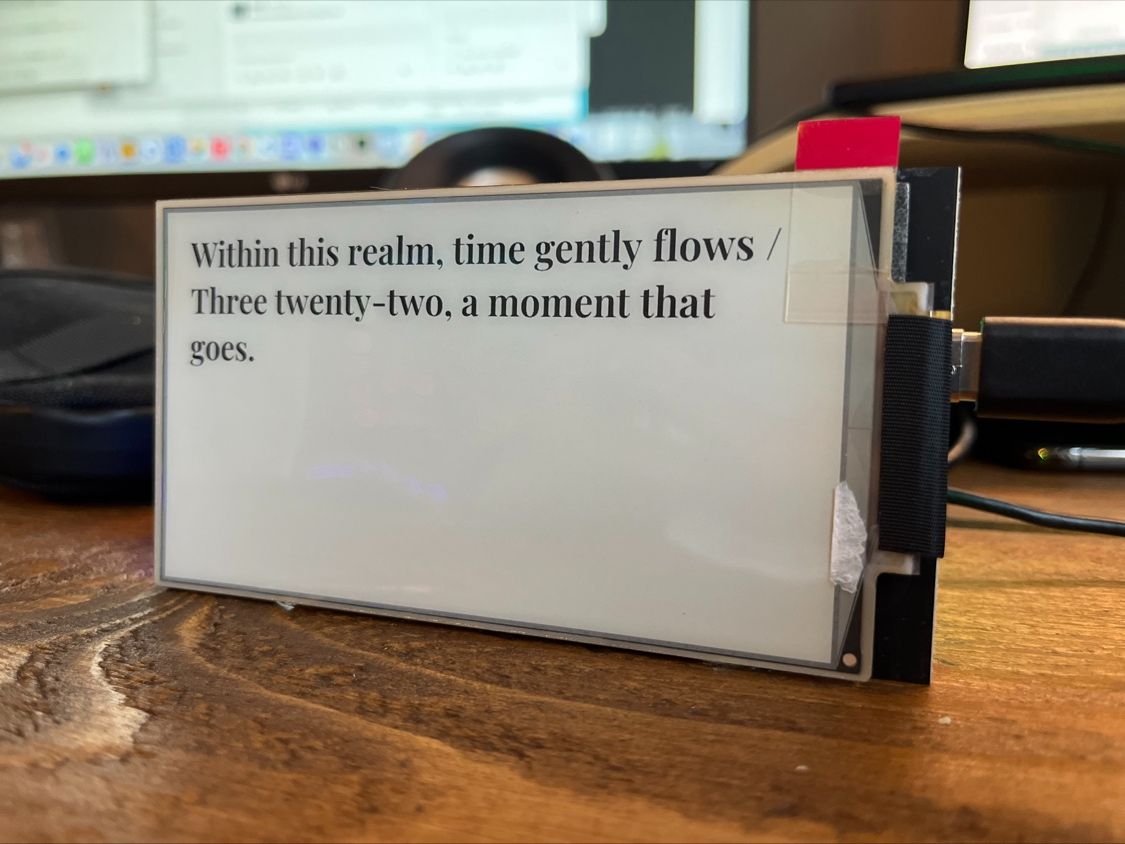

AI Clock

A short piece of work for Matt Webb to get the AI Clock firmware I worked on in the summer up and running on the production hardware platform. The nuances of individual e-ink devices and drivers made for the bulk of the work here. Matt shared the above image of the code running on his hardware at the end of the year; still after all these years of doing this sort of thing, it’s always satisfying to see somebody else pushing your code live successfully.

Four projects made the end of 2023 a real sprint to the finish; the winter break was very welcome. These projects should be coming into land in the coming weeks, which means it’s time start looking at what 2024 really looks like come February.

New case study: Lunar Energy LED interactions

31 August 2023I’ve shared a new case study of the work I did this summer with Lunar Energy (see previous worknotes).

As I explain at length over at the post, it’s a great example of the kind of work I relish, that that necessarily straddles design and engineering. It’s a project that goes up and down the stack, modern web front-ends talking to custom hardware, and all in the service of interaction design. Lunar were a lovely team to work with; thanks to Matt Jones and his crew for being great to work with.

-

I recently released the 2.0.0 firmware for 16n, the open-source fader controller I maintain and support. This update, though substantial, is focused on one thing: improving the end-user experience around customisation. It allows users to customise the settings of their 16n using only a web browser. Not only that, but their settings will now persist between firmware updates.

I wanted to unpick the interaction going on here - why I built it and, in particular, how it works - because I find it highly interesting and more than a little strange: tight coupling between a computer browser and a hardware device.

To demonstrate, here’s a video where I explain the update for new users:

Background

16n is designed around a 32-bit microcontroller - Paul Stoffregen’s Teensy - which can be programmed for via the popular Arduino IDE. Prior to version 2.0.0, all configuration took place inside a single file that the end-user could edit. To alter how their device behaved, they had to edit some settings inside

config.h, and then recompile the firmware and “flash” it onto the device.This is a complex demand to make of a user. And whilst the 16n was always envisaged as a DIY device, many people attracted to it who might not have been able to make their own have, entirely understandably, bought their own from other makers. As the project took off, “compile your own firmware“ was a less attractive solution - not to mention one that was harder to support.

It had long seemed to me that the configuration of the device should be quite a straightforward task for the user; certainly not something that required recompiling the firmware to change. I’d been planning such a move for a successor device to 16n, and whilst that project was a bit stalled, the editor workflow was solid and fully working. I realised that I could backport the editor experience to the current device - and thus the foundation for 2.0.0 was laid.

MIDI in the browser

The browser communicates with 16n using MIDI, a relatively ancient serial protocol designed for interconnecting electronic musical instruments (on which more later). And it does this thanks to WebMIDI, a draft specification for a browser API for sending and receiving MIDI. Currently, it’s a bit patchily supported - but there’s good support inside Chrome, as well as Edge and Opera (so it’s not just a single-browser product). And it’s also viable inside Electron, making a cross-platform, standalone editor app possible.

Before I can explain what’s going on, it’s worth quickly reviewing what MIDI is and what it supports.

MIDI: a crash course

MIDI - Musical Instrument Digital Interface describes several things:

- a protocol for a serial communication format

- an electronic spec for that serial communication

- a set of connectors (5-pin DIN) and how they’re wired to support that.

It is old. The first MIDI instruments were produced around 1981-1982, and their implementation still works today. It is somewhat simple, but really robust and reliable: it is used in thousands of studios, live shows and bedrooms around the world, to make electronic instruments talk to one another. The component with the most longevity is the protocol itself, which is now often transmitted over a USB connection (as opposed to the MIDI-specific DIN-connections of yore). “MIDI” has for many younger musicians just come to describe note-data (as opposed to audio-data) in electronic music programs, or what looks like a USB connection; five-pin DIN cables are a distant memory..

The serial protocol consists of a set of messages that get sent between MIDI devices. There are relatively few messages. They fall into a few categories, the most obvious of which are:

- timing messages, to indicate the tempo or pulse of a piece of music (a bit like a metronome), and whether instruments should be started, stopped, or reset to the beginning.

- note data: when a note is ‘on’ or ‘off’, and if it’s on, what velocity it’s been played it (the spec is designed around keyboard instruments)

- other non-note controls that are also relevant - whether a sustain pedal is pushed, or a pitch wheel bent, or if one of 127 “continuous controllers” - essentially, knobs or sliders - has been set to a particular value.

16n itself transmits “continuous controllers” - CCs - from each of its sliders, for instance.

There’s also a separate category of message called System Exclusive which describe messages that an instrument manufacturer has got their own implementation for at the device end. One of the most common uses for ‘SysEx’ data was transmitting and receiving the “patch data” of a synthesizer - all the settings to define a specific sound. SysEx could be used to backup sound programs, or transmit them to a device, and this meant musicians could keep many more sounds to hand than their instrument could store. SysEx was also used by early samplers as a slow way of transmitting sample data - you could send an audio file from a computer, slowly, down a MIDI cable. And it could be also used to enable computer-based “editors”, whereby a patch could be edited on a large screen, and then transmitted to the device as it was edited.

Each SysEx message begins with a few bytes for a manufacturer to identify themselves (so as not to send it to any other devices on the MIDI chain), a byte to define a message number, and then a stream of data. What that data is is up to the manufacturer - and usually described somewhere in the back pages of the manual.

Like the DX7 editors of the past, the 16n editor uses MIDI SysEx to send data to and from the hardware.

How the 16n editor uses SysEx

With all the background laid out, it’s perhaps easiest just to describe the flow of data in the 16n editor’s code.

- When a user opens the editor in a web browser, the browser waits for a MIDI interface called 16n to connect. It hears about this via a callback from the WebMIDI API.

- When it finds one, it starts polling that connection with a message meaning give me your config!

- If 16n sees a message aimed at a 16n requesting a config, it takes its current configuration, and emits it as a stream of hex inside a SysEx message: here is my config.

- The editor app can then stop polling, and instantiate a

Configurationobject from that data, which in turn will spin up the reactive Svelte UI. - Once a user has made some edits in the browser, they choose to transmit the config to the device: this again transmits over SysEx, saying to the device: here is a new config for you.

- The 16n receives the config, stores it in its EEPROM, and then sets itself to use that config.

- If a 16n interface disconnects, the WebMIDI API sends another callback, and the configuration interface dismantles itself.

Each message in italics is a different message ID, but that’s the limit of the SysEx vocabulary for 16n: transmitting current state, receiving a new one, and being prompted to send current state.

With all that in place, the changes to the firmware are relatively few. Firstly, it now checks if the EEPROM looks blank, at first boot, and if it is, 16n will itself store a “default” configuration into EEPROM before reading it. Then, there’s just some extra code to listen for SysEx data, process it and store it on arrival, and to transmit it when asked for.

What this means for users

Initially, this is a “breaking change”: at first install, a 16n will go back to a ‘default’ configuration. Except it’s then very quick to re-edit the config in a browser to what it should be, and transmit it. And from that point on, any configuration will persist between firmware upgrades. Also, users can store JSON backups of their configuration(s) on their computer, making it easy to swap between configs, or as a safeguard against user error.

The new firwmare also makes it much easier to distribute the firmware as a binary, which is easier to install: run the loader program, drag the hex file on, and that’s that. No compilation. The source code is still available if they want it, but there’s no need to install the Arduino IDE to modify a 16n’s settings.

As well as the settings for what MIDI channel and CC each fader transmits, the editor let users set configuration flags such as whether the LED should blink on data transmission, or how I2C should be configured. We’ve still got some bytes free to play with there, so future configuration options that should be user-settable can also be extracted like this.

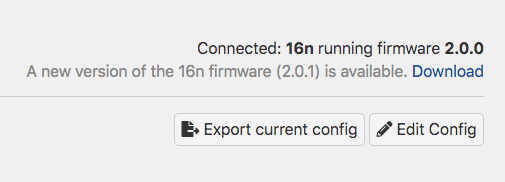

The 16n editor showing an available firmware update Finally, because I store the firmware version inside the firmware itself, the device can communicate this to the editor, and thus the editor can alert the user when there’s a new firmware to download, which should help everybody stay up-to-date: particularly important with a device that has such diverse users and manufacturers.

None of this is a particular new pattern. Novation, for instance, are using this to transfer patch settings, samples, and even firmware updates to their recent synthesizers via their Components tool. It’s a very user-friendly way of approaching this task, though: it’s reliable, uses a tool that’s easy to hand, and because the browser can read configurations from the physical device, you can adjust your settings on any computer to hand, not just your own.

I also think that by making configuration an easier task, more people will be willing to play with or explore configuration options for their device.

The point of this post isn’t just to talk about the code and technology that makes this interaction possible, though; it’s also to look at what it feels like to use, the benefits for users, and interactions that might be possible. It’s an unusual interaction - perhaps jarring, or surprising - to configure an object by firing up a web browser and “speaking” directly to it. No wifi to set up, no hub application, no shared password. A cable between two objects, and then a tool - the browser - that usually takes to the wireless world, not the wired. WebUSB also enables similar weird, tangible interactions, in a similar way to the one I’ve performed here, but with a more flexible API.

I think this an unusual, interesting and empowering interaction, and certainly something I’ll consider for any future connected devices: making configuration as simple and welcoming as possible, using tools a user already understands.