Weeks 386-388

15 June 2020Weeknotes are late. It didn’t seem the right time on Monday 1st June to be prattling about my work practice or the landscape technology on the internet. Make some space for more important messages and voices to be heard.

At the beginning of week 386, we presented where we were to the team - the output of some discovery work - and we began to formulate a plan for the next steps. And here, I struggled with premise rejection: looking at the brief, reacting to it based on the discovery, and realising every bone in me wants to pivot away from it. The brief is for X; my (not necessarily correct) instinct is to say “…how about (something that is emphatically not-X)?”

Rather than being the thing I should do, this is a feeling I have to sit with for a bit and own. Sometimes, it’s just down to a bump to my confidence. I am put out by something I have discovered; an unforseen challenge has emerged; whatever has emerged is something I am afraid of. And whatever it is, I just have to work through it.

At the end of the week, I worked through it a bit on my own, and then shared my thoughts with my colleague. Fortunately, we both agreed on where we were. The discovery phase had, in fact, worked: we had learned a lot, and perhaps needed to pivot a little. What the full details of that pivot were, were unclear, but we agreed that it was needed. And we also both had come to similar conclusions about what we had discovered.

There was work to be done, but there was no need to panic entirely.

What then followed over the next few weeks was continuing to work through that. We spoke to a variety of peers through the organisation, shared where we were, and took some of their feedback on for the next chunk. I continued to sketch in paper and code.

And we chose a direction. Not quite the direction that would take us through to the very end, but a direction to take us along the next part of the journey. We’d take the discovery work and continue to explore and polish with it, just as planned, delivering a first phase that was roughly in line with what we’d promised: see it through, and work up our ideas in more detail. Then, we’d veer off: rather than treating the next two phases as incremental on the first, we’d address two other areas that had emerged in a similar manner. The second phase is feeling relatively clear in terms of topic, if nothing else; the third is perhaps vaguer, but might be shaped over the weeks that preceed it.

Phase one needs to wrap up next week, so in week 388, I spent a chunk of time making browser-based prototypes, and thinking about how best to make them self-explanatory. Not in the way a good piece of software is, but in the way a good textbook or exhibit is: the code is our example, but it needs content to situate it alongside it. I’m excited to follow this thread, and explore how to present this kind of work.

And, finally, a nice surprising note. I noticed a small ping from the Slack for Bradnor towards the end of the week, and hurriedly jumped over there to work out what had gone wrong. It turns out: nothing. Rather, a long quiet device gateway had come back online, and the sensors speaking to it had started trickling data into the system automatically and unasked. Exactly how it should have worked, and how it was envisaged. But it’s nice to see something that you had planned to be robust proving its robustness. Happy client, happy me.

Weeks 384-385

24 May 2020Whoops, missed a week. I’ve been intensely head-down on the first phase of Easington.

It always takes me a while to settle into the pace of new projects. I’m eager to start, keen to make headway. It also takes me a while to settle into the pace of new clients: how do they like to work? What are their expectations? What’s the cadence of us meeting, talking, and then me orbiting off to do some work, or sitting down to collaborate.

And that’s all heightened by doing everything remotely from the get-go. (I’m used to working at a distance, or independently, but still relish collaboration, colocation, and thinking in-person when possible).

So I spent the past two weeks “cranking the handle”, so to speak.

But: they’ve been a very rewarding two weeks. I’ve had regular catchups with my Primary Colleague, and have been bouncing up and down the powers of ten that my favourite kind of R&D projects tend to require: from gathering background research, speaking to colleagues, and reading papers through to thinking-out-loud, sketching interactions and ideas, and then, at the far end, pulling one particularly meaty thought together in code as a working, end-to-end prototype.

That code project took up most of the end of week 384 and all of 385. I proposed that we Just Build A Thing with a particular technology so that we could get a visceral grasp on what it could do. We know what it can do in theory, but it’d be good to feel that for ourselves in a real-world environment. That’ll help us and our stakeholders make strategic decisions about the next steps of the project, as well as help us understand the material we’re working with. It’s also a little gift: something to share back to the team for them to use themselves.

A lot of that’s come down to the cardboard-and-tape of web technologies, but that’s an exciting space at the weird edges. I’ve poked a bit at Functions As A Service before - AWS Lamba, Cloud Functions - but in this case have been using Cloud Run to apply that idea to a whole Docker container (for

$REASONS). A whole container, spun up from cold start, in under a second, to do something on demand, die when it’s done, and we only get billed for compute time. There was still also a chunk of code to write, but a lot of the code for this project is really infrastructure: the lines between things on the block diagram. Once the pipes were set up, we were running an interesting workflow largely on on-demand hosting. None of that is the meat of what’s going on, of course, but it’s still been instructive to put to use, rather just to understand impassively.So whilst I was motoring through that, I was also wrapping my head around a new problem domain, one specific problem, a new client, a new project, and a pile of misbehaving code (all written by me, obviously). And so weeknotes slipped, albeit for good reason. Still: good to drop a note at the end of what felt like a good fortnight and say “that felt like a good fortnight”. At the end of it, I had a rewarding, curious, and thought-provoking prototype, ready to demo next week.

Next week we’ll present where we are and go from there.

Week 383

11 May 2020I wrapped up Bradnor this week. I just had a few tweaks left in the code based on client feedback, and a few more to infrastructure - notably, sending deploy notifications from our deploy pipeline through to our error reporting tool.

With that done, the main job was handover. Part of that was to hand over various services to the client’s control; I always feel better knowing that the appropriate person ‘owns’ control of something, even if we’re at free or low-usage tiers.

More importantly, it meant documentation. I tidied up the READMEs lying around the place, and then wrote a long document called What We Did which synthesized the various discussions and interim documents into one clear document that could be referred to in future. I find it easiest to write this for an imaginary future developer coming to the project.

To do that, I assume relatively little specific technical knowledge. So I explain everything we’ve done that either deviates from norms, is domain-specific to the application and product, or that is our ‘configuration’ of existing tools. Beyond that, I link out to documents for open-source tools or products, rather than explaining them myself, but assume familiarity with the core language or framework being used.

That future developer is, of course, easy to imagine because I think about myself returning to a project after a long gap. It’s also there for the client, who is themselves technical: whilst they’ve been making decisions I’ve put to them, this is a reference document for them, too, so they can see how the things we’ve spoken about join up, and have a final ‘map’ of the infrastructure and code we’ve put together.

With the final pieces in place, I shipped the documentation, and the client seemed very pleased with it - and the project as a whole. A satisfying end to this phase of work, and perhaps we’ll work together on the project again in the future.

I got some feedback from the University of Leeds about the courses I wrote for them on Futurelearn. In general, they sounded very pleased: really exciting numbers of sign-ups, and good responses from learners in the comments threads. However, one ‘step’ of a particular course was causing a little confusion. I asked learners to skip over some stages of an external tutorial without quite clarifying why; many of them wanted to do the missing steps, or hadn’t quite worked out how to skip things. They asked me if I could make a short screencast clarifying what to do, and why.

So I spent a few hours this week back in my screencasting tools, making a short film to explain not just what to do, but why I thought this was a good idea.

How do I record screencasts at the moment? I record video using the “record area of screen” function built into Quicktime Player, with the audio from my webcam microphone alongside it. At the same time, I am recording my external condenser microphone into Logic Pro, with a small voice channel set up inside the DAW. I usually have a script or notes laid out on a table in front of me. Then, I hit record in Quicktime and in Logic, and just keep going until I have decent takes of everything I need.

Once that’s done, I fiddle with the voice channel in Logic, to get all the audio up to a nice level, and to remove any background noise. Careful application of the built-in compressor, and occasional Brusfri does the job here. Then, I bounce out the audio to a

wavfile.To edit it, I open all the media up inside Hitfilm, and synchronise the bounced audio from Logic against the ‘guide’ audio from the webcam. Once those are synced, I can remove the webcam audio entirely. Then it’s just a case of walking through the script, chopping and editing, and occasionally deploying small video effects to zoom in on an area, or making small comps to manipulate areas of the screen.

My goal isn’t to get to something completely final. Leeds have an excellent video team who take this and make it sing, adding B-roll, tidying my edits or comps, and adding titles, stings, and transitions, in line with their branding. Instead, I’m trying to give them enough to work with, to make sure the script and technical video are watertight, and to make the intent of the film clear.

Once we’d approved my short script, it (as ever) worked out at around an hour’s work per minute of footage - I’m pretty swift at this now, but never seem to be able to break that rule of thumb!

Finally, I had a quick meeting with the Easington team about that work, and we arranged a kick off meeting for Monday 11th - Week 384.

Week 382

3 May 2020Week 382 was primarily a busy week of writing code on Bradnor.

With last week‘s infrastructure in place, capturing messages from physical devices, we could now spend some time processing that data. That meant abstracting out devices from the locations they represent. After all, a device may go offline, or be replaced by another, but it’d be good to be able to see the history of data from a single location, as well as from a single device.

So we filled out our data model to encompass concepts such as ‘locations’ and ‘devices’ and the relationship between them (as well as logging when they change) Then, I could build another data-processing task, that would copy a ‘device message’ into a list of messages for a specific location if those two items were linked. (This also made it easy to have a ‘disabled’ flag on devices, making it possible to ignore inaccurate messages from possibly defunct devices). In that copying process, we also do a little decoration and transformation of the data, leading to a nice big table of per-location messages, that’s quick to query.

I could then backfill our location messages with the data from last week, as well as importing historical data from CSV files straight into the ‘location messages’ table.

There was also a lot of metadata CRUD to do, to make it easy to update and record information about locations, as well as to leave comments and annotations on many of the objects in our system. Rails made this about as straightforward as it could be to hammer out.

I made sure I had time to work on some belt-and-braces, too: making sure there was appropriate test coverage (especially of key parts like data processors and the end-to-end request cycle of message JSON arriving at a URL), and setting up Rollbar to catch and collate errors.

With all that done, we had raw data coming in, meaningful information being derived from that data, and the beginnings of a fleet-management tool.

Finally, I set up a visualisation pipeline using Grafana. The client had been using Grafana in their previous stack, so it made sense to keep doing so - especially as it was both easy to deploy (thanks to its preference for being deployed as a Docker image) and easy to integrate into Postgres (thanks to the Postgres plugin being supplied as default). With that deployed, we could spelunk away, and a short while hacking away at some SQL got me to a nice dashboard showing data for a set of monitoring locations grouped over time, and navigable with Grafana’s time-series tools (which make it easy to scroll around time and to zoom in and out). As new monitoring locations were added and new historical data dropped in, more lines appeared in the graph. Satisfying to see!

All this meant I largely wrapped up Bradnor this week. There’s just some spit and polish and handover reamining.

It’s not the most sophisticated set of tools, but it is built out of well-known building blocks - application frameworks, databases, protocosls - that are maintainable, testable, and knowable. We’ve simplified the platform infrastrcture a little, but still have a good base to build upon, perhaps to add new features to or to extract to smaller services if necessary. Tests help verify that the code is doing what it should be, and by using known, mature tools, it becomes easier to recruit others to work on it should I be unavailable. I was pleased with the shape of this project - what looked like a quick software build project actually turned in an opportunity to lay some good foundations, re-examine infrastructure, invest in more than just lines of code.

On other fronts, I signed paperwork for Easington, which should kick off in week 383, and take me right through the summer. This is going to be an exciting, challenging R&D project to pour myself into, and I’m looking forward to it.

Week 381

27 April 2020I got my head down on an initial phase of Bradnor this week.

Bradnor is a small project to build out a new back-end for an existing IOT platform. I’m replacing the storage and administration end of affairs: the data gathering and transit layer isn’t changing. The week saw me investigating existing code, evaluating options for replacing parts of it, and deploying the code to newly provisioned infrastructure.

My goal for the end of the week was to get data piped from devices into a database. Once that data was safely piped and stored somewhere, we could then build upon it next week with various visualisation tools and management APIs. But first, we just had to put it somewhere.

I framed the work to the client as “research and development”. Not, perhaps, in the traditional sense of an R&D project - here, the task was known, and the problem-space well defined. But I was still going to have to research options for this greenfield project, sometimes by writing software or testing third-party services, and then present that work back so a path could be chosen. Researching what could be done, and only then developing the thing that needed doing.

That meant the first chunk of work was reading documentation, tinkering with small tests, and a lot of synthesis and writing to present back to the client.

Once we’d agreed on an approach, I started building out the skeleton of an application to get us to parity with the existing infrastructure. That first involved reproducing some data-processing that was originally written in Javascript in Ruby, and then getting live data flowing through my code, and into our datastore.

Finally, I provisioned some suitable hosting. It’d be possible to move everything to a large chain of small cloud-based services - queues, on-demand functions, datastores - but we chose, for now, to use a simple PAAS for the application code, and a managed database instance for our storage.

The data is perhaps the most valuable part of the product, so I felt it was worth not pretending we have time to be our own DBAs, and instead invest in someone else scaling it, managing it, and maintaining backups. A traditional application structure, but one that would do the job for now (especially with sensible background of tasks, thanks to Que).

There’s always a trade-off between expending effort on application code versus application infrastructure: do you spend time arranging an array of services, but ultimately writing less code, or do you invest in code and extract to services later?

I tend to prefer starting with monolithic code, and then extracting to services later. That seemed especially apt here, given the code was already a greenfield rewrite, and as such, I was still wrapping my head around the needs of the domain and the other platforms it was built out of. By keeping the infrastructure relatively simple - and knowable - I hoped the next most obvious changes to make to it would emerge in time.

So I focused on getting data from devices, through the pipeline, and into storage - and getting this deployed by the end of the week. With this solid base was in place, I could spend week 382 focusing on fleet management, data-visualisation, and external API acccess - as well as contemplating a roadmap for future upgrades, and perhaps taking better advantage of cloud services.

By the end of the week, we had code running, data flowing into it before being processed and stored, a deployment pipeline set up, and a hefty amount of documentation of both the problem space being explored, and the work that I had produced. A good week’s work, and a good foundation for week 382.

Week 380

17 April 2020Where were we?

I last wrote notes around Week 374. My

Makefiletells me this is about Week 380. I think that’s correct. If not, well, time has slightly lost some of its meaning in recent weeks, and who’s counting? Week 380 it is.In week 375, I finally finished my career review, and [wrote that up]. That was a prelude to more formally seeking out new projects and clients. Of course, what then happened was COVID-19 made it quite clear that we were not proceeding as normal for a bit. I left the studio and went to work from home, and started trying to investigate new work from there.

Which was not, at that point, particularly fast-moving, and, coupled with the strangeness of lockdown, everything slowed down a little. It was going to be a challenge to write ongoing weeknotes where I invented euphemisms for “not very busy over here”, so I went a little quiet.

When I write down what I’ve been up to since then, though, it’s a decent amount:

- I made voipcards. This was, initially, one of my one-line-gags turned into a small project. It was also a useful tool to prod at learning a bit more Svelte, to practice building PWAs, and to occupy my mind. It turned out quite popular on the internet, which led to me writing about the problems of solutionising, and why I’m still not sure it’s that good a project.

- I released the 2.0.x firmware for 16n, and wrote about that here. This had been on the shelf for a different project - Mayhill - for a while, and I realised it could easily be ported to 16n. The big feature this introduces is configuring your hardware from the browser, over USBMidi. Really pleased with how this turned out, and the community feedback has been great.

- I built up a personal electronics project over a few days, which turned out rather well after some fettling. Fun things I learned here included using naked board substrate as a transparent surface for LEDs to shine through, how to drive 256 LEDs off only 64 channels (four sixteen-LED drivers chained over I2C), and then tweaking the update rate of the LEDs so they don’t flicker on cameras as well as to the naked eye. (That involved moving the update rate to an integer division of 30 frames a second…)

- been spending some time volunteering on Makerveristy’s PPE effort - primarily, on a tangential project to the 3D printing they’ve been launching, where I’ve been lending some support on digital logistics work as well as comms and lightweight project management.

- pitching a bit and having phone-calls and chats.

- quite a lot of business-related admin.

- setting up two new projects. Let’s called them Bradnor and Easington. I signed the contracts for Bradnor, a short project around infrastructure for an IOT project, last week, and we’re nearly there with Easington.

That felt like enough to finally write about. And now I’m back in the saddle, it should be harder to break the chain next week. As ever: onwards.

-

I recently released the 2.0.0 firmware for 16n, the open-source fader controller I maintain and support. This update, though substantial, is focused on one thing: improving the end-user experience around customisation. It allows users to customise the settings of their 16n using only a web browser. Not only that, but their settings will now persist between firmware updates.

I wanted to unpick the interaction going on here - why I built it and, in particular, how it works - because I find it highly interesting and more than a little strange: tight coupling between a computer browser and a hardware device.

To demonstrate, here’s a video where I explain the update for new users:

Background

16n is designed around a 32-bit microcontroller - Paul Stoffregen’s Teensy - which can be programmed for via the popular Arduino IDE. Prior to version 2.0.0, all configuration took place inside a single file that the end-user could edit. To alter how their device behaved, they had to edit some settings inside

config.h, and then recompile the firmware and “flash” it onto the device.This is a complex demand to make of a user. And whilst the 16n was always envisaged as a DIY device, many people attracted to it who might not have been able to make their own have, entirely understandably, bought their own from other makers. As the project took off, “compile your own firmware“ was a less attractive solution - not to mention one that was harder to support.

It had long seemed to me that the configuration of the device should be quite a straightforward task for the user; certainly not something that required recompiling the firmware to change. I’d been planning such a move for a successor device to 16n, and whilst that project was a bit stalled, the editor workflow was solid and fully working. I realised that I could backport the editor experience to the current device - and thus the foundation for 2.0.0 was laid.

MIDI in the browser

The browser communicates with 16n using MIDI, a relatively ancient serial protocol designed for interconnecting electronic musical instruments (on which more later). And it does this thanks to WebMIDI, a draft specification for a browser API for sending and receiving MIDI. Currently, it’s a bit patchily supported - but there’s good support inside Chrome, as well as Edge and Opera (so it’s not just a single-browser product). And it’s also viable inside Electron, making a cross-platform, standalone editor app possible.

Before I can explain what’s going on, it’s worth quickly reviewing what MIDI is and what it supports.

MIDI: a crash course

MIDI - Musical Instrument Digital Interface describes several things:

- a protocol for a serial communication format

- an electronic spec for that serial communication

- a set of connectors (5-pin DIN) and how they’re wired to support that.

It is old. The first MIDI instruments were produced around 1981-1982, and their implementation still works today. It is somewhat simple, but really robust and reliable: it is used in thousands of studios, live shows and bedrooms around the world, to make electronic instruments talk to one another. The component with the most longevity is the protocol itself, which is now often transmitted over a USB connection (as opposed to the MIDI-specific DIN-connections of yore). “MIDI” has for many younger musicians just come to describe note-data (as opposed to audio-data) in electronic music programs, or what looks like a USB connection; five-pin DIN cables are a distant memory..

The serial protocol consists of a set of messages that get sent between MIDI devices. There are relatively few messages. They fall into a few categories, the most obvious of which are:

- timing messages, to indicate the tempo or pulse of a piece of music (a bit like a metronome), and whether instruments should be started, stopped, or reset to the beginning.

- note data: when a note is ‘on’ or ‘off’, and if it’s on, what velocity it’s been played it (the spec is designed around keyboard instruments)

- other non-note controls that are also relevant - whether a sustain pedal is pushed, or a pitch wheel bent, or if one of 127 “continuous controllers” - essentially, knobs or sliders - has been set to a particular value.

16n itself transmits “continuous controllers” - CCs - from each of its sliders, for instance.

There’s also a separate category of message called System Exclusive which describe messages that an instrument manufacturer has got their own implementation for at the device end. One of the most common uses for ‘SysEx’ data was transmitting and receiving the “patch data” of a synthesizer - all the settings to define a specific sound. SysEx could be used to backup sound programs, or transmit them to a device, and this meant musicians could keep many more sounds to hand than their instrument could store. SysEx was also used by early samplers as a slow way of transmitting sample data - you could send an audio file from a computer, slowly, down a MIDI cable. And it could be also used to enable computer-based “editors”, whereby a patch could be edited on a large screen, and then transmitted to the device as it was edited.

Each SysEx message begins with a few bytes for a manufacturer to identify themselves (so as not to send it to any other devices on the MIDI chain), a byte to define a message number, and then a stream of data. What that data is is up to the manufacturer - and usually described somewhere in the back pages of the manual.

Like the DX7 editors of the past, the 16n editor uses MIDI SysEx to send data to and from the hardware.

How the 16n editor uses SysEx

With all the background laid out, it’s perhaps easiest just to describe the flow of data in the 16n editor’s code.

- When a user opens the editor in a web browser, the browser waits for a MIDI interface called 16n to connect. It hears about this via a callback from the WebMIDI API.

- When it finds one, it starts polling that connection with a message meaning give me your config!

- If 16n sees a message aimed at a 16n requesting a config, it takes its current configuration, and emits it as a stream of hex inside a SysEx message: here is my config.

- The editor app can then stop polling, and instantiate a

Configurationobject from that data, which in turn will spin up the reactive Svelte UI. - Once a user has made some edits in the browser, they choose to transmit the config to the device: this again transmits over SysEx, saying to the device: here is a new config for you.

- The 16n receives the config, stores it in its EEPROM, and then sets itself to use that config.

- If a 16n interface disconnects, the WebMIDI API sends another callback, and the configuration interface dismantles itself.

Each message in italics is a different message ID, but that’s the limit of the SysEx vocabulary for 16n: transmitting current state, receiving a new one, and being prompted to send current state.

With all that in place, the changes to the firmware are relatively few. Firstly, it now checks if the EEPROM looks blank, at first boot, and if it is, 16n will itself store a “default” configuration into EEPROM before reading it. Then, there’s just some extra code to listen for SysEx data, process it and store it on arrival, and to transmit it when asked for.

What this means for users

Initially, this is a “breaking change”: at first install, a 16n will go back to a ‘default’ configuration. Except it’s then very quick to re-edit the config in a browser to what it should be, and transmit it. And from that point on, any configuration will persist between firmware upgrades. Also, users can store JSON backups of their configuration(s) on their computer, making it easy to swap between configs, or as a safeguard against user error.

The new firwmare also makes it much easier to distribute the firmware as a binary, which is easier to install: run the loader program, drag the hex file on, and that’s that. No compilation. The source code is still available if they want it, but there’s no need to install the Arduino IDE to modify a 16n’s settings.

As well as the settings for what MIDI channel and CC each fader transmits, the editor let users set configuration flags such as whether the LED should blink on data transmission, or how I2C should be configured. We’ve still got some bytes free to play with there, so future configuration options that should be user-settable can also be extracted like this.

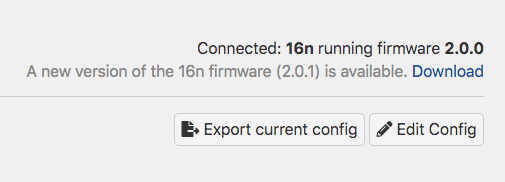

The 16n editor showing an available firmware update Finally, because I store the firmware version inside the firmware itself, the device can communicate this to the editor, and thus the editor can alert the user when there’s a new firmware to download, which should help everybody stay up-to-date: particularly important with a device that has such diverse users and manufacturers.

None of this is a particular new pattern. Novation, for instance, are using this to transfer patch settings, samples, and even firmware updates to their recent synthesizers via their Components tool. It’s a very user-friendly way of approaching this task, though: it’s reliable, uses a tool that’s easy to hand, and because the browser can read configurations from the physical device, you can adjust your settings on any computer to hand, not just your own.

I also think that by making configuration an easier task, more people will be willing to play with or explore configuration options for their device.

The point of this post isn’t just to talk about the code and technology that makes this interaction possible, though; it’s also to look at what it feels like to use, the benefits for users, and interactions that might be possible. It’s an unusual interaction - perhaps jarring, or surprising - to configure an object by firing up a web browser and “speaking” directly to it. No wifi to set up, no hub application, no shared password. A cable between two objects, and then a tool - the browser - that usually takes to the wireless world, not the wired. WebUSB also enables similar weird, tangible interactions, in a similar way to the one I’ve performed here, but with a more flexible API.

I think this an unusual, interesting and empowering interaction, and certainly something I’ll consider for any future connected devices: making configuration as simple and welcoming as possible, using tools a user already understands.

VOIPcards, or, On Solutionising

25 March 2020A bit over a week ago, I made a small tool - or toy, depending on your perspective, or the time of day - called VOIPcards. I demonstrated it on my public Twitter account:

I made some flashcards for you to hold up on videochat:https://t.co/tSPIEWqGWu

— Tom Armitage (@tom_armitage) March 17, 2020

You can install to your phone's homescreen, and it should work offline.

Ideal for when you want to comment, but stay quiet - or perhaps tell someone else to pipe down for a bit. pic.twitter.com/6525w9wbNYIt was made after my friend Alice showed pictures of her backwards post-it notes she’d hold up to her videoconference. I thought about making a tool for having on-demand backwards flashcards for video calls. A small toy to make, and thus, some time to practice some modern development practices, make a PWA and put myself to making something during Interesting Times.

Since then, a lot of people have liked it, or shared it, or been generally enthusiastic. Several have submitted patches and, most notably, translations, to it. And I’ve added some new features: white on black text, choice of skin-tone for emojis, and settings that persist between sessions.

I’m not sure it’s any good, though.

I don’t think it’s bad, though, and if it’s making a difference to your remote practice, that’s great. But I don’t think it’s the right tool for what it sets out to do.

And here’s the thing: it wasn’t meant to be. In some ways, the point of VOIPcards is as much a provocation as it is a thing for you to use. It says: here are things people sometimes need to say. Here are things people sometimes need to do, to support colleagues on a call. Here are things people need to do because it’s fun.

This is why I think of it as between a tool and a toy: it’s fun to use for a bit, it’s a provocation as to the kind of things we need alongside streaming video, and if you put it down when you’re bored (and your behaviour may have changed) that is fine.

The single most important card in the deck is a tie between “You have been talking a long time“ and “Someone else would like to speak“. These are useful and important statements to make in face-to-face meetings, but they’re doubly important when there’s twelve of you on a Zoom call. Sometimes, the person with better video quality noticing that someone wants to speak, and amplifying that demand, is good.

If what you come away from VOIPcards with is not a tool to use, but a better way of thinking about your communication processes, that’s probably more important than using a fun app.

But: equally, if you do find it useful, this isn’t a slight. That’s great! I’m glad it works for you.

I think the reason it’s popular is that people respond to the idea of it. The idea of the product has immediate appeal - perhaps more so than the reality of it. And that appeal is so immediate, so instant, that it makes me distrust it. Good ideas don’t just land instantly: they stand up to scrutiny. I’m really not sure VOIPcards does. At the same time, there’s value in the idea because of what it makes people think, how it makes them subsequently behave. And I think some of that value really does come down to it being real. A product you can try, fiddle with, demonstrate, lands stronger than a back-of-a-napkin idea - even if it turns out to be not much more than the idea.

Another obvious smell for me is that I don’t use the product. I enjoyed making it, and I was definitely thinking about other peoples’s needs - however imaginary - when making it. But it’s not for me, which makes it hard to make sensible decisions about it.

(What do I do instead? Largely, hand gestures and big facial expressions: putting a hand up to speak, holding a palm up to apologise for speaking over someone, lots of thumbs-ups. It puts me in mind of the way Daniel Franck and Ty Abraham describe the way the “Belters” - first-generation space dwellers - communicate in their Expanse novels. Belters talk with lots of broad hand-and-body gestures, rather than facial ones, because the culture developed communication techniques that worked whilst wearing a spacesuit. No-one can see an eyeroll through a visor, but everyone can see theatrical shrugs, sweeping hand gestures. I liked that. It feels like we’re all Belters on voice chat. Sublety goes out the window and instead, a big hand giving a thumbs-up into a camera is a nice way to indicate assent without cutting into somebody’s audio)

When I’m being most negative about VOIPcards, it is because they feel like solutionising - inventing a solution for a hypothetical problem. In this case, though, the problem is definitely something everybody has felt at some point. But this solution is perhaps too immediate, came too much from the “implementation” end of the brain to be the robust, appropriate answer to said problem.

There’s a lot of solutionising around right now, and I’m largely wary of it all. The right skills at the moment are not always leaping to solutions, working out what you can offer others, guessing at what might happen, what you might expect, and how you can respond to that. I think that the right skills to have - and the right tone to strike right now - are to be responsive, and resilient. Dealing with the unexpected, the unknown unknowns. Not solving the problems you can easily imagine, but getting ready to solve the ones you can’t.

Still: there is also value in making things to make other people think, rather than do. The win isn’t necessarily the product, but the behaviour it inspires. If what people take away from the cards is some time spent thinking more carefully about their communications, rather than yet another tool to use: that’s a win for me.

(You can try VOIPcards here. It works best on a mobile phone, and you can install it to your homescreen as an app.)

What I do

9 March 2020I am a technologist and designer.

I make things with technology, and I understand and think about technology by making things with it.

A lot of my work starts with uncertainty: an unknown field, or a new challenge, and the question: what is possible? What is desirable? From there, the work to build a product leads towards certainty: functioning code, a product to be used. I have worked on a lot of shipped, production code, but my best work is not only manufacture and delivery; it also encompasses research, exploration, technical discovery, and explanation.

Research could involve investigating prior art, or competitors, or using technical documentation to understand what the edges of tools or APIs are - beginning to map out the possible.

Exploration involves sketching and prototyping in low- and high-fidelity methods to narrow down the possible. That might also include specific technical discovery - understanding what is really possible by making things, compared to just reading the documentation; a form of thinking through making.

Delivery involves producing production code on both client and server-side (to use web-like terminology), as well as co-ordinating or leading a development team to do so, through estimation and organisation.

And finally, explanation is synthesis and sense-making: not only doing the work, but explaining the work. That could be ongoing documentation or reporting, contextualising the work in a wider landscape, or demonstrating and documenting the project when complete.

How does this work happen?

I work best - and frequently - within small teams of practice. That doesn’t necessarily mean small organisations, though - I’ve worked for quite large organisations too. What’s important is that the functional unit is small, self-contained, and multidisciplinary. I have built or led small teams of practice to achieve an outcome.

I’ve delivered work with a variety of processes. My favourite processes often resemble design practice more than, say, formal engineering: they are lightly-held, built as much around trust as around formal rules. Such processes are constraints, and they serve to help a team work together, but they are also flexible and adaptable.

A colleague recently described some of the team’s work on a recent project as “a lot of high-quality decisions made quickly“, and I take pride in that description; I hope to bring that sensibility to all my work.

You can find out more about past projects. If the above sounds of value to you, do get in touch with me; I am available for hire.

Week 374

2 March 2020I’ve been freelance for over seven years. In that time, my work has slowly changed a little in its nature, along with my professional interests, and the shape of the wider market. In a quiet week, following just-about wrapping a few projects, it felt time for a more formal review of my work to date.

I loosely followed Matt’s notes on his own career review as a starting point: looking at all my past work, and analysing it. What did the successes have in common? What would I like to do more of? Are there trends? As usual, it’s easy as a lone practitioner to assume that it’s all just gig-after-gig, but that’s not true.

Some focused time with pen an paper let me look clearly at my work. It turns out that over all the wide range of projects, there really are trends, and there is definitely expertise built over time that is worth sharing. So it was definitely useful to take that time to do this properly.

I then performed an extra step after Matt’s original list of questions: I looked at the how of each project. How did those circumstances occur? How did the work happen? Who is involved? Who is committed? That helped me gain a better focus on what circumstances are most successful for me, and how I enable the work to happen.

Having done all that, there was a small piece of writing work to synthesize and explain everything. At which point, I promptly got a cold - not COVID-19, I should add - which knocked out the end of my week. So finishing up the review work would have to begin in week 375. Still, the groundwork was laid this week, and it was a good way to use some time off project work.

Week 373

23 February 2020This week I:

- spent a half day on Monday wrapping up a polish pass on Willsneck with its designer. I then gave a short talk about Willsneck to the direct client on Thursday Morning. Primarily, I talked about the deployment strategy we were using, and why it was a good fit for the project and client. It was good to validate the thinking we’d been doing as a team, and also to communicate that what looked like off-the-cuff decisions did still have a bunch of thought behind them. There were good questions and some nice feedback, so that was satisfying.

- spent Tuesday workshopping with Tim. Early investigations into something, digging into what really interested him (and me) about a particular idea, and then some due diligence and research into prior art.

- continued from my end-to-end breakthrough on Mayhill with further iteration. The firmware feels pretty complete; there’s one error at the level of hardware design that will need resolving before I can confirm that, but otherwise, I’m pleased. I also continued to iterate on the software end of things, adding features to the browser-based editor. I looked into the state of the hardware, but then got a bit downcast as I realised the effort required to take it beyond the workbench. With its own fast microcontroller in, it likely falls under FCC regulation of “unintentional radiators”, which puts another hurdle in front of selling or shipping it. Something to think about, but meant I largely parked thinking about it for a bit.

- finally, snatched a victory from the work on Mayhill by realising there’s nothing to stop it working with 16n, so started working on a version 2.0.0 firmware for that, which should be ready soon. Early feedback from the community has been highly positive, so it’ll be good to ship that to as many people as possible.

Week 372

16 February 2020Lots of work on Willsneck this week, to bring it into land. We wrapped everything up by Thursday PM, and I’ve got a half-day on this left over to finalise the production deployment. Happy with how it’s turned out, I think.

Hallin got merged into master by the client, so I’m looking forward to hearing how they get on with it in production.

I had a good chat with Christian and Miguel from Schema who were in town, having been introduced by a friend. Always nice to meet new people, and interesting to chat to them about designing for data, working with clients on that problem, and the tools used to do it. Thanks to Steve for putting us in touch!

Finally, I spent some of Friday working on Mayhill, and had a really big breakthrough. That breakthrough is what we called end-to-end at Berg: a complete workflow up and running, even if every component is a bit ‘version one’. I began by working on overhauling some of the browser-based UI: making it look a lot tidier, and refactoring a lot of the large

App.sveltefile into smaller components, including a big wrapper component for handling the MIDI context. Then, I wrote the JavaScript to translate edits in the browser to Sysex data. Finally, back in firmware-land, I wrote the firmware code to intercept that stream of data and write it to Flash RAM. There were other small firmware kinks to iron out, too.By the end of the day, I had a system where you could open an editor in your browser, connect a physical object (that I’d both designed the electronics for and coded the firmware on) and see it appear automatically, and reconfigure it in the browser app. The browser app could transmit edits back to the hardware, which would persist those changes. Hugely satisfying: what’s largely left on this is polish, now, and working on the “1.0” hardware (rather than this ‘development board’) that I built for myself.

Still not quite sure how I feel about Svelte. I like the compiled-up-front approach, especially for something that couldn’t really be done many other ways; I’m not quite sure about its idiosyncratic syntax, and whilst I quite like its two-way reponsiveness, that leads to a propensity to get yourself in a tangle. Still, it’s enabled all the things I’ve needed to do so far in a reasonably straightforward manner, and I’m a big fan of its Vue-style single-file components, so it’ll do for now. One thing in its favour is that it was easy enough to pick up after months away: most of it, most of the time, is just browser-based technologies.

A good week, then: mainly code for clients, some more esoteric code for myself with a serious breakthrough, and some good conversations to round it out.

Week 371

11 February 2020I’m writing these notes awfully late, so let’s rattle through them:

- shipped all the final changes to the client on Hallin. They seem pleased, so hoping to wrap this next week.

- kicked off a second phase of work on Willsneck. This was primarily focused on front-end design changes: new markup and CSS, and content updates. There was still some more unusual code to implement, though. Some of the content on the site is extracted from JSON files using Hugo’s ability to use JSON data in templates. These JSON files are derived from online sources, and needed to be regularly updated. How to do that with a static site? It turns out it’s now quite straiightforward. We’re already deploying the site using Github Actions on every push to

master. Actions also supports cronlike functionality, with scheduled actions. I wrote some scripts to download and process the relevant JSON files, and then wrote some Actions workflows that, once a day, would run the script, commit the results back tomaster, and deploy the site. Really happy with this: I’m sure you can also do similar with CI, but Github Actions are really lightweight and straightforward out of the box. Might use this pattern again in future. - met up with Gabi from Hyper Island, who have moved their London office into Makerversity - just around the corner from me. We debrief on the module I’d worked on, and caught up more generally.

- did a bit more writing on Ninebarrow. Slowly moving forward; still painful.

- finally, booked all my accomodation for Loop this year. Really looking forward to this again: a neat combination of being personally interesting and enjoyable, and a good source of inspiration and thinking-time for my work-brain. Can’t wait.

Week 370

2 February 2020Back to code, mainly, in week 370.

I fixed up all the major issues the client had requested fixing on Hallin - two fairly chunky bugs I needed to take apart a little to fix, and two minor tweaks. With those resolved, the client’s tech lead gave my branch a thorough code review. They were very happy with the way I’d dived into their codebase, and most of the feedback came down to notes on minor formatting issues, and on code that was perhaps not so legible at first look. A few quick commits took care of some inconsistent formatting. More important was a second pass on the code that wasn’t so clear. That meant simplifying conditionals, reducing fragility of a few parts, and tidying things that hadn’t seemed overcomplex when I was writing them.. I also extracted some highly specific code into something more general - but not too general - that would set a good precedent for any future refactoring of related tasks. As ever, the integration/feature tests acted as an excellent safety net, and I wrapped up the code review in an afternoon.

There was some brief discussion around a pacey second phase of Willsneck that will kick off in week 371. This time around, we have a firmer deadline, but also are much firmer in what needs delivering in that timeframe, so I sat down with the designer to go over what changes needed to be done, and wrote up a thorough document to cover my estimates and highlight anything I thought was a risk. I shall dive into that code on Monday.

I spent some time on Wednesday continuing to work on the writing project that is Ninebarrow. I am making progress - not hugely quickly, but progress nonetheless. It is already proving more challenging than I expected, partly because I cannot quite write as fast as my brain can go, and so I begin to start doubting or questioning what I’m doing whilst in the process of doing it. Shutting down that critical voice long enough to work is going to be something I’ll have to practice!

I launched the Futurelearn courses that were previously known as Longridge, and wrote them up here. I’m pleased that they’re now out in the world. Next week, I’ll check in on how the learners are getting on in their discussion and comments threads.

And finally, I payed my tax bill. Thank god that’s done.

New Work: An Introduction to Coding and Design

29 January 2020

In the second half of 2019, I worked on a project I called Longridge. This project was to write three online courses in a series called An Introduction to Coding And Design, for a programme of courses from the Institute of Coding launching in 2020. I worked with both Futurelearn - the MOOC they’re hosted upon - and the University of Leeds to write and deliver the courses.

Those courses are now live at Futurelearn, as of the 27th January 2020!

The courses are designed as two-week introductions to topics around programming and design for beginners interested in getting into technology, perhaps as a career.

I’ve written up the project in much more detail here; you can read my summary of the work here. I cover some of the reasoning behind the syllabus, the choice of topics, and the delivery. And, most importantly, I thank the collaborators who worked with me throughout the process, and collaborated on the courses.

Week 369

27 January 2020Another largely admin-focused week, for now.

I completed everything to do with taxation at the end of the tax year. My bookkeeping was largely up-to-date, so that just involved going over it all, a quick check-in with my accountant, a few final reports, and then getting everything sent to HMRC.

I did some final tweaks to material for the Longridge courses, which launch on Futurelearn on Monday 27th. They’ll get their own project page and announcement on this site in Week 370.

I finally wrote my yearnotes for 2019. Useful to reflect everything I got up to, and perhaps how I might want the shape of work to change this year.

I got some feedback from the client on Hallin, so triaged those issues ready to return to writing code next week.

I started writing on Ninebarrow. Not for long enough, but enough to break the ground, and leave a few dangling threads that I’d like to return to - usually the easiest way to get me to want to Keep Writing.

I ripped out Adobe Fonts from this site. I’d been using Typekit since way back when. Adobe absorbed it and, for a while, provided it cheaply. However: when my free year of “Adobe XD” (which includes their fonts) expires, the pricing will go up to £120 a year, which is just too much for the odd typeface around the internet. I was reminded of this by a friend getting a ‘surprise’ credit card charge. My renewal turned out to be due in April, so I used the time to remove this dependency.

So I ripped out all reference to Typekit from my live sites, and, where necessary, found alternatives on Google Fonts (where the open-source faces are decent enough for my needs). On this site, that meant moving to a more traditional sans as a face for headlines and display, and adjusting alignment a little across the site. I am happy with the slight refresh. But: if you noticed the design change, this is why.

End-of-year admin out of the way, next week should see a return to more head-down productivity, and perhaps more writing.

Finally, worth noting a little about how I use weeknotes here. Weeknotes on this site are, for me, a diary of work and things I’m doing in a professional capacity. I have a personal blog as well, and I continue to write and link there; subscribing to its RSS feed will keep you up-to-date. I like to (try to) separate work from non-work, although it’s not always 100% straightforward. When I write here, though, it’s equally to log what I was up to for myself, and share the way I work - and how I think about work - publicly. So if they seem dry, that’s a little deliberate - but they’re certainly not the sum total of myself.

Yearnotes, 2019

24 January 2020Another year - the seventh full one of working for myself. Just enough distance from the 31st of December makes for a good time to review what I got up to in 2019, and match up some codenames to projects.

Clients

Client work is, as ever, the major focus of my work.

I wrapped up my engagement with Captionhub at the beginning of the year. CaptionHub had been a highly successful project for me. I took the technology aspect of the project from a prototype to a fully-fledged product. The small team grew; the client built a technology capacity; I learned a great deal in the process. I finally had a chance to write this work up at length, and I’m glad I’ve done so.

I spent much of 2019 working at Bulb as Lead Technologist inside their Labs department. I’d summarise Labs’ role as “product invention and business development”. That is to say: we did R&D around future products and the business units they might spawn. We then worked out what would be necessary to bring those to fruition, from both a business and customer perspective. My role was to understand, explain, and prototype technology, leading technology inside Labs, working with the designers in the unit, as well as other developers, and colleagues throughout the business.

I learned a great deal about the nature of the power and energy industry, a little the specifics of high-voltage electricity, and a fair chunk about electric cars along the way! I greatly enjoyed everyone I worked with inside Labs - Alex, Claire, James, Jenna, Lachie, and Daphne - as well as colleagues throughout the organisation, many of whom went above and beyond to assist us with our projects and research. We also got to partner with some great people outside the business, and in particular, I enjoyed working on prototypes with Pam and Ling from Intellicharge; Bulb’s trial with Intellicharge kicked off in November, a little while after I left.

My engagement at Bulb wrapped up around Week 322 of last year. My time there was codenamed Highrigg.

Since then, client work has included work with After The Flood, IF, and the New Left Review.

Teaching

There’s been more teaching this year. With Hyper Island, I delivered the Digital Technologies module on their Digital Management MA for two cohorts: at the beginning of the year, in January, for the part-time cohort (who’d meet in London every month). This year, I also performed this role for the full-time students, in Manchester, in March.

I also worked on three courses for the Institute of Coding that will launch on Futurelearn in January 2020. Targeting beginners, they are two-week introductions to programming, web development in HTML/CSS, and UX design. I’ll write about them more very shortly. These courses were codenamed Longridge.

Products

In the background, I continued to explore a few avenues around physical products.

I continued to ship kits under the Foxfield label, although I’ve not introduced any more projects. Being honest, I find product support much harder than product development, and adding new products just adds new things to support. So I’m thinking hard about what to do there: how to simplify.

The big product I worked on was 16n. This had been rolling in the background for a while in 2018; in January 2019, I decided to stop dawdling and release it to the world. 16n is a hardware-and-firmware product, sure - but it’s also an open-source product. I don’t actually make any. (Well, that’s not quite true: I have hand-built a few. But in general, I don’t make them). Instead, other people - hobbyists, small businesses - around the world have built their own - and, because of licensing, sold them to others.

I’m happy with that trade-off. The thing is in the world; other people are enjoying it and making music with it. Every time I see a picture of one in somebody’s setup, I’m happy. Also, Richie Hawtin has one.

I continued to support and provide firmware patches for 16n through the year. And now I’m thinking about what successors to it might be in 2020: I have a few ideas about how to improve the core experience of the product. How I get those to market remains to be seen.

Still: without shipping very much beyond data, I shipped a thing.

There were also perhaps a few too many prototypes behind the scenes, which fitted around work during downtime. Some of these were no-goes; a few stalled at around 90%, as I baulked at what it might take to push them over the top. Next yea,r a lesson has to be only working on things with a more defined goal, and a commitment to make them real. If it looks like it’s fun, but might not go anywhere… probably something to stop sooner. A lesson learned.

And, of course, physical/electronic products are a small part of my practice. It’s often easy to chat about them in weeknotes when other, larger work is harder to talk about - and that doesn’t always present an accurate picture of my work’s balance. Again, something to think about next year.

And that was 2019. 2020 begins by wrapping up a few pieces of client work. And then it’s time to look for new projects!

As ever, do get in touch if you’re looking for someone to work with on the shape of projects - technology (particularly on the web), invention, R&D, prototyping and strategy, playful interaction, the boundary between digital and physical - that you read about here.

Onwards!

Week 368

20 January 2020A quiet week, as I was working until Sunday night last week teaching.

It’s an admin-oriented time of the year. I finished up all my book-keeping for the previous year to ship to my accountant. This was largely up-to-date thanks to regular FreeAgenting, but there were still a few bits and bobs to catch up on. I also sent a few invoices, and paid a few bills related to the studio fit-out in the autumn.

I spent a day or so presenting a demo of the state of Hallin to the direct client. We worked out what would be necessary to do before we shipped a beta to staging. I finished up that code, wrote some appropriate end-user documentation, and shipped that off to them for testing.

Over on my personal site, I wrote up the Spark AR filter I made one afternoon last week. It’s not so much a technical write-up as it is an exploration of environments for making software toys:

It’s a while since I’ve worked on a platform that’s wanted to be fun. I’ve made my own fun with software, making tools to make art or sounds, for instance. But in 2020, so much of the software I use wants me to not have fun.

[…]

Twitter is now very hard to make jokes on. The word ‘bot’ has come to stand for not ‘fun software toy’ but ‘bad actor from a foreign state’. The API is increasingly more restricted as a result. I’m required to regularly log in to prove an account is real. My accounts aren’t real: they’re toys, automatons, playing on the internet.

I get why these restrictions are in place. I don’t like bad actors spreading misinformation, lies, and propaganda. But I’m still allowed to be sad the the cost of that is making toys and art on the platform.

You can read more (and see the filter in action) in Fun With Software, over at infovore.org.

Finally, a longer-term personal project that I’m calling Ninebarrow began to take shape - or, at least, take a little more solid form. No huge developments here just yet; there’s little more than thinking on it, so far, but I’m writing it down for the sake of tracking the progress of that project, if it happens, over time. Here is where it began.

Week 367

13 January 2020And we’re back in 2020, with the first full week of the year being Week 367.

I did a small amount of work on Hallin, getting things shipshape for the client demo that was moved to the beginning of Week 368. Most things were in place, though I spent a few hours making one slight improvement to better reflect the existing domain model in the work I was doing.

Some of my time was taken up with typical beginning-of-year admin.

I spent a pleasant afternoon building a toy for myself in SparkAR. Spark turns out to be a highly pleasant development environment, and simple results can be worked up surprisingly quickly. Node-based programming environments aren’t always my favourite, but they make a lot of sense of things involving realtime video or pipelines, and I soon settled into Spark’s mental model.

By the end of the week, I’d sent the toy off for review. Of course, I immediately found a serious number of UX improvements to make the moment I’d hit submit. So I imagine a 1.1 release will be submitted fairly soon after, and that’ll be the one I release for people to play with.

Really, though, the big work this week was preparing for the second weekend of teaching on the MA course with Hyper Island, and then delivering those classes on Friday, Saturday and Sunday. A few talks, including one that’s a crash course in cryptography, that goes on to use that knowledge to better evaluate blockchain (and cryptocurrency, with a brief digression into What Money Is). This is always a hard one: really, it’s about critically evaluating technology by refusing to be told that something is too complicated to describe clearly. Lots of good questions and analysis from the students, and it led nicely into a wonderful session (as ever) from Wesley Goately on critical thinking around AI and related technologies. That seemed to go really well too.

Mainly, though, the weekend focused on the students finishing up their pitches to deliver to the client on Saturday night, and they all delivered excellent, interesting, and varied outcomes. As ever, I greatly enjoyed myself: I don’t just get the chance to think about the ideas and content I’m delivering, but also I get to learn from my students: seeing how they engage, watching what examples they bring to the table, as well as how they merge their learning with their own professional practice and workplaces. They’re always a diverse, international crew, and so my perspectives are always widened. And I’m always learning about how to convey and express ideas: what sorts of coaching and information people best respond to, how to find ways to help them come to solutions for themselves. Hugely satisfying and rewarding, as ever.

The card that says ‘yearnotes‘ is still in my

TODOcolumn. I hope I can get those out the door soon.Week 365-366

31 December 2019No weeknotes over the Christmas break.