When in Rome RMS

A tool for managing game audio.

When In Rome is a boardgame by Sensible Object. It’s a travel quiz for two teams that requires an Amazon Echo to play. As teams travel the world, they answer questions to win points and souvenirs. “Alexa” keeps track of the score, explains the rules, and hosts the game.

But Alexa doesn’t ask the questions; that honour goes to locals from the 20 cities featured in the game. Real local natives contribute the voices to greet players, offer assistance, and ask questions.

Of course, with 20 natives, each with many hundred lines of dialogue, the audio data was going to pile up quickly. Ideally, it’d be useful to have a tool to manage all this audio. Which is where I came in!

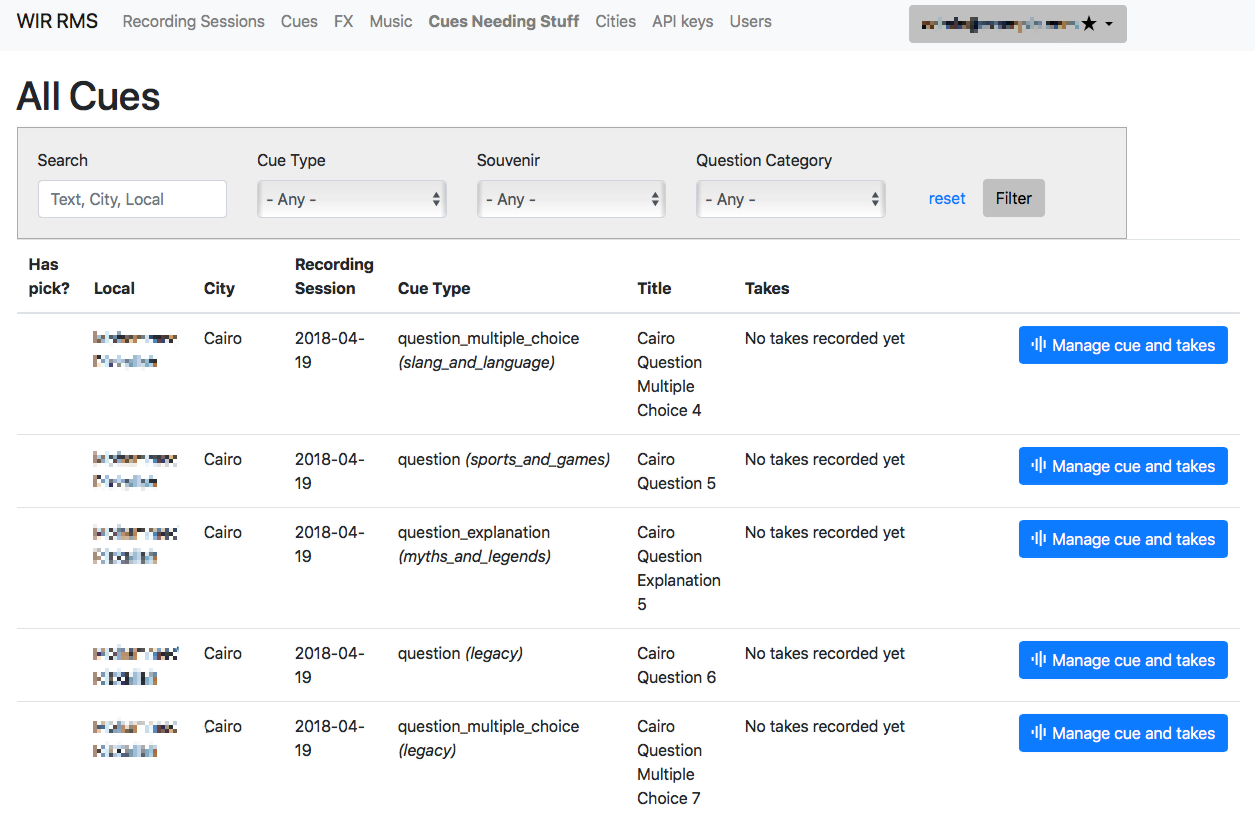

I worked on producing what I called an “RMS” - Recording Management System - for the game. It took processes that had relied on files stored in the cloud and spreadsheets and structure them a little more rigidly. So producers would be able to populate the system with recording sessions, and store which cues were to be recorded by each voice in those sessions; the sound team could upload multiple takes of each cue, allowing the production team to select their favourites; and the RMS would offer a map of all its content as JSON for the development team to consume during their build process.

A data view in the RMS

Building the RMS required me to quickly understand a few separate domains. Firstly, the game: what content needed to be stored? What was a sensible way of structuring it? How much process should be enforced or left implicit? (In the end, there was very little ‘workflow’ in the app, as the small team had high levels of trust and in-person workflow). Secondly, what would be the best scenario for the development team? Ideally, something machine-parsable, that could be bundled into applications at build time, and reliable as a source of truth. And finally, it was important to make it as simple as possible for the audio team to use - they were focused on recording all the content! To that end, I implemented simple drag-and-drop upload direct to the appropriate screens. I also handled media transcoding inside the application: all the audio team had to upload were original wav files, and I set them up to be automatically processed with ffmpeg to generate the bitrate the Amazon systems required.

The project was a pacy piece of work, with lots of iteration. As the recording process went on, I continued to add a number of tweaks to meet the needs of either the developers or the content teams; lots of quality-of-life improvements occurred through the lifespan of the project.

The whole project came together over a handful of weeks. Whilst it was not a hugely complex project, it was a fast-moving one, and drew on a lot of prior experience, be it quickly flinging up simple CMS systems, or processing media using system tools. And, of course, it drew on my experience of being a developer myself, and working out what would be useful for someone else to consume. It was only a small contribution to the game as a whole, but I’m pleased with the marked improvements it offered.