Lunar Energy: Data Sonification

Music as a design material: describing energy data with sound

In early 2024 I worked on exploring representing data with sound for Lunar Energy; an short exploration of what might be possible in the domain.

Matt Jones has already written a bit about the project here, introducing it and the brief. I’d like to dive into a bit more technical detail - both in terms of software and code, but also in terms of thinking about music as a design material.

Coming up:

- The Project

- On Data Sonification

- Sketching Data-driven Music

- Sketching in Ableton

- Sharing Work-In-Progress

- Breaking out the Big Horns

- Programming Data Sonification

- Final Words

The Project

The top-level brief looked at using sound and music to describe Gridshare energy data. “What might Gridshare sound like?”. Lunar Design had already conduct data visualisation explorations. I would look at those same datasets for my sonic explorations.

The final goal was something that could be automated if taken to production - rendering audio offline on a cloud server, in response to data sent to it. So after a first sketching phase, producing early sonic explorations, we described the next step as building an engine: working on tools to make the process repeatable and data-driven.

But to begin with, we had to sketch - which for music, means composing. I wasn’t just going to be writing code or ‘solving problems’ here; I had to invent new musical ideas from scratch. That’s usually something I do for myself; I’ve never had to compose for another client before! But I bit the bullet and started writing music.

As Matt describes, I chose to begin by “playing along” with the videos of our visualisations - finding sounds, scales, patterns that might fit with what we had going on. Simultaneously, I was thinking about what could be controlled and automated, and how.

On Data Sonification

I tend to think of “turning data into sound” as a sliding scale. At one end is a very pure kind of Data Sonification - mapping data quite specifically to individual sonic elements, and seeing what emerges. This is a great way of trying to expose patterns and insight in datasets you might otherwise not notice; sonficiation as a tool for discovery.

At the other end of that sliding scale is Dynamic Scoring: a musical score that respond to changes in data. This happens not in a direct mapping between each field and a musical control, but in higher level, broader abstractions. Videogame soundtracks, powered by tools like FMod or WWise are a great example of this: facets of game data are fed into the music engine, and it leads to changes in the sound - whether it’s “playing a whole new section of music” or “adjusting effects levels on the current track”.

For reasons I’ll go into shortly, I leant strongly towards this second approach: it may be less ‘pure’ as sonification goes, but I think it makes for output that’s more pleasing to listen to, and crucially, more legible. (Audible?)

Sketching Data-driven Music

Music is often made out of a number of different parts playing simultaneously - instruments, voices, sound. I personally believe that, on first listen, most people can only pick out a limited number of simultaneous things going on in a piece of music. And, more importantly, they can only pick out differences going on in different domains.

For instance, in a piece of orchestral music, it might be easy to differentiate between what the different sections are doing, because they all sound different: high strings, low strings, high wind, low wind, horns, percussion. But picking out what individual parts are doing - what’s the difference between first and second violin - requires closer listening, and it’s just not immediate.

Given that, I wanted the music to work in broad brushstrokes; pick a limited number of domains to manipulate, and make those manipulations really identifiable. That’s not a problem - it’s exactly what a lot of functional music (music written for a purpose, like a soundtrack or opera score) already does. But it was going to limit the number of data-sources we could apply simultaneously.

What can we change about music? Some of the domains we can consider in generative sound include:

- tempo - is the music fast or slow as a whole?

- speed - are individual parts playing notes quickly or slowly within the beat?

- pitch - what are those notes? are they in a key that sounds “jolly” or “mournful”? How are they being picked?

- range - are the notes “high” or “low” - any more complex is hard to pick out.

- timbre - the quality of the sound itself. In an orchestra, that comes down to what instrument is playing it; in electronic sound, it’s the parameters of an electronic instrument that determine this. For instance, “is the sound ‘dark’ or ‘bright’”?

In generative music, the next question is what ‘levers’ to connect up to these parameters. I was interested in making sonification that would be ‘glanceable’ (or an auditory equivalent of that!): clearly understandable in its first playback. So that meant wiring up a few variables, clearly identifiable in data, to clearly distinguishable sonic elements.

This mean answer the questions one often has to answer with functional music. What is going on? What’s the story we want to tell? Who’s the ‘hero’? How could we illustrate that in sound? And what in the data supports it? We’re just doing it with data (and a visualisation of it) rather than a written narrative.

I picked two stories to tell with the score: “Gridshare rises to the occasion” and “solar energy makes things possible”. I picked a few aspects of the data that could tell this: the ‘general level of activity’ (how much charging/discharging is going on at a point in time, regardless of direction); ‘balance of charging/discharging’ indicating whether energy was flowing in from the sun, or being sent out to the grid.

In my first sketch, the balance of charge/discharge affected timbre - ‘bright’ sounds represent discharge (think: ‘excited electrons’); ‘deep’ sounds represented charging (think: ‘slowly warming up’). I used the ‘general level of activity’ to control the rhythmic complexity of patterns.

Sketching in Ableton

I sketched in Ableton Live, a Digital Audio Workstation, with one ear on writing music that could be written in code later. That meant, to begin with, entirely synthetic sounds, and generative processes that could be described numerically.

Rhythmic complexity is a fun thing to play with: we don’t just have to choose between “fast notes” or “slow notes”. Euclidean Rhythms - as described in this paper, and popularised in many electronic music plugins and synth modules - allow a couple of numbers to describe all manner of rhythm, and lead to different outcomes with simple changes. These were an ideal fit for my needs - as the energy gets busier, so does the rhythm, and in a fairly organic way.

Something similar was possible with melody: picking a scale, and thus a ‘bucket of notes’ to represent an idea, meant that I could control how wide a range of notes was picked from a bucket - the range of an arpeggiator, quantised to a scale. Number goes up, and the part becomes more expansive.

Both of these ideas were implemented with off-the-shelf Max For Live tools. Ableton’s “Studio As Instrument” philosophy is ideal for this kind of work, piecing together various components and then mapping physical controls - my faders - to one or more parameters. Each sketch was essentially a custom instrument, designed to accompany a single visualisation.

I “played” the data into these sketches. I connected all the parameters I wanted to control in the sketch to physical faders, using an 8mu - a small set of faders - on my desk to control them. I hit “play” in Ableton, and watched the visualisation, sliding the faders - one for ‘charging/discharging’, one for ‘activity’ - as I parsed the activity. What did this do for the music?

In the sketching process, I iterated both on the sounds and notes being played, but also on the mapping of parameters to controls - often mapping one slider (“data source”) to multiple controls across multiple tracks.

Sharing Work in Progress

Throughout the project, I kept a project diary I shared with the Lunar team - a simple Eleventy-powered blog that I regularly updated, posting links to it in Slack. Here’s what I wrote on it about the above sketch at the time:

I have two controls:

- balance describes the see-saw between “charging” and “discharging”

- intensity describes the magnitude of the current scene - so you could have “intense charging” or “intense discharging” or “not very intense but still mainly discharging”, as it were.

I’ve also given discharging and charging their own voices, designed to be heard alongside each other.

- discharging (blue) is a slower, brassier synth, which even when it’s more “hectic” still moves much more slowly than charging.

- charging (yellow) is a more frenetic, sharp lead. As it gets more “hectic”, it gets quicker in its rhythms, and covers a wider range of notes.

- There’s a kick-drum pulse, which goes from every other beat at “low intensity” to every beat at “high intensity”. A ghost note at the end of the bar makes it feel more melodic. I like reflecting intensity both in rhythm and range of pitch.

Breaking Out The Big Horns

At this point, Matt gave me a piece of creative direction. I wish I’d thought of this myself, but that’s the point of external direction. “What would you do if you didn’t have to do this in code?“ Rather than constraining myself to what I thought feasible, what else might be possible if I stopped restraining myself?

So I broke out some orchestral libraries and started playing with what a Hollywood soundtrack to these visualisations might sound like. What would Lorne Balfe or Cliff Martinez do?

all that our “data” (my hands on faders) is doing here is controlling volume of some parts of pre-composed music

- hang drum and legato strings are a kind of ostinato, present throughout

- bassier elements - low brass, low spiccato strings - are “discharging” at night

- our high strings and winds are our “charging” hero theme - they emerge in sunlight

And then after aiming for Cliff Martinez and missing, I took similarly wonky aim at Reznor/Ross:

the ‘rules’ -

- mallets as ostinato

- strings are “general amount of stuff going on” (roughly)

- bass and piano are our discharge, but they don’t go to zero, they just drop back

- major-seventh horns are the “charging” fanfare

(It was only after I listened to this back I realised that, unconsciously, the “charging fanfare” was a total lift from Interstellar.)

These sketches don’t really work as things I could make in code. But they did help us expand the idea, showing it had legs beyond my early bleeps and boops; these versions were also easily explainable to other stakeholders.

The answers to those Functional Music Questions I mentioned earlier - here’s some stuff going on; who should I root for? What’s the story? - are incredibly legible in these more cinematic approaches. We’re pre-disposed - primarily through our exposure to cultural norms - to interpret certain sounds, certain keys, certain moods of music in certain ways. The grid lights up with solar energy, those heroic mid-range horns come in, and you know that’s probably who I’m rooting for, the protagonist of this story about an energy grid.

Every time I watched these orchestral sketches, I was reminded how powerful leitmotif really is.

This sketching process was a few days of work up front. It was successful enough that we moved onto the next, longer phase: turning these ideas into code.

Programming Data Sonification

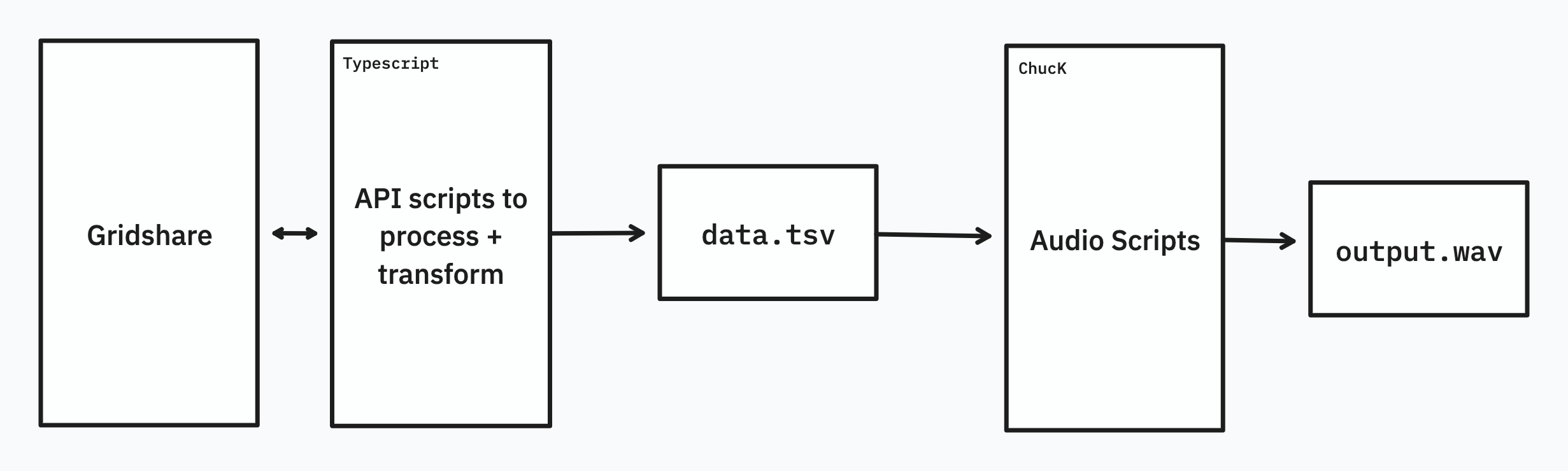

As part of the sketching work, I explored a few options for generating sound programatically. My main requirements were something that could run offline - in a container or on a server, fed with data, outputting a WAV file; something that was expressive enough to offer the control we’d been afforded by music tools; and something that wouldn’t take too long.

I settled on ChucK, a programming language designed for writing music in. The peers I spoke to about this project - including those who know ChucK - were surprised by this choice, but it worked well for me: the manual was good, it sounded great, and it was straightforward to get running at a command-line.

ChucK is a nicely expressive language for describing music. Its key feature is that it is “strongly timed”; it is designed to produce audio where things that are meant to happen at the same time really do (to accuracy suitable for DSP, measured in microseconds). It can handle multiple processes/functions - “shreds“ - running simultaneously - being “sporked“ - and keeping them all in sync.

I evaluated the language with my “hello world” for music programming: a version of Steve Reich’s Piano Phase. (If you’re interested in seeing what ChucK looks like, here’s my code for Piano Phase.) I then used this as a foundation to explore processing data, piping that to controls (such as a filter), and confirming it’d render WAV files as I hoped.

With an environment chosen, and some sketches to implement, the rest of the project came down to coding. I wrote scripts to parse CSV and turn it into data files that ChucK could parse more easily; I rebuilt parts of the Ableton setup - notably, Euclidean rhythm generators and arpeggiators - in ChucK code - and I recreated the simple sonic aesthetics of my early sketches with drum samples and ChucK’s in-built synthesis tools.

Refreshingly, it only took a little tweaking to get my entirely programmatic output to sound reasonably close to our original sketches. I pass my data files to ChucK in a single command-line call, and a few minutes later - ChucK renders in roughly real-time - I had a WAV file.

I produced my final demos by aligning the audio file with the start of the earlier video sketches, and rendering out a composite video.

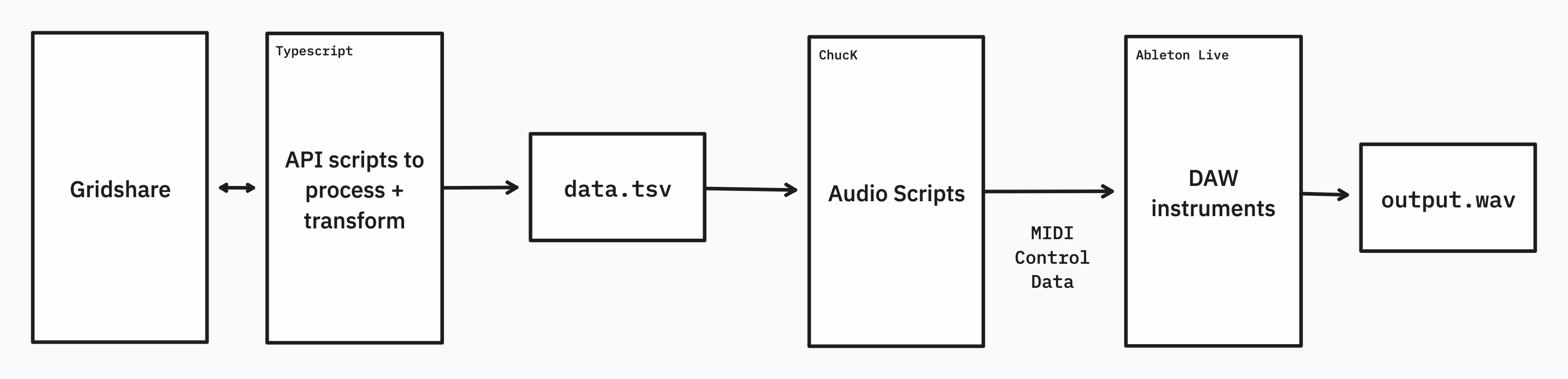

There was one final exploration: what if we relaxed the need for offline rendering? I realised that we could still use ChucK to generate control data, but instead of then passing it to ChucK’s internal DSP and producing notes/rhythms inside ChucK, that control data could be sent as MIDI over to our Ableton sketches. Effectively, we were going back to the first sketches, replacing me-as-Mechanical-Turk with ChucK.

This led to really great results: it combined the logic of the Max For Live sequencers with data-driven control, and could use the high-quality internal synthesis of Ableton’s instruments. I’m pretty pleased with the results:

Final Words

This whole project ran for just under three weeks of highly non-consecutive time. We spent a handful of days on early sketching, both to get a feel for what things might sound like, and what we could implement them in, before diving deep into code for a couple of weeks to produce end-to-end demos.

This project threaded a needle through a wide set of skills - composition, data manipulation, programming, orchestrating command-line tools - and emerged as a technical design project with music as its core material. It was fascinating and exciting to work on, and it’s a domain I’d love to return to some time.